Most AI agents powered by Large Language Models (LLMs) struggle with a fundamental limitation: their fixed context windows. Once a conversation exceeds a few thousand tokens, earlier details vanish—user preferences, past decisions, or even basic facts like your name can be forgotten. This leads to repetitive, impersonal, and incoherent interactions, especially in long-running or multi-session applications.

MemoryOS directly solves this problem. Inspired by how operating systems manage memory—swapping data between registers, RAM, and disk—it introduces a hierarchical, dynamic memory architecture for AI agents. The result? An agent that remembers who you are, what you’ve discussed, and how you prefer to interact—across hours, days, or even weeks.

Backed by peer-reviewed research (accepted at EMNLP 2025) and open-sourced on GitHub, MemoryOS delivers 49.11% higher F1 scores and 46.18% better BLEU-1 performance on the LoCoMo benchmark compared to standard baselines. It’s not just theoretical—it’s engineered for real-world integration.

What Problem Does MemoryOS Solve?

Traditional LLM-based agents treat every conversation as isolated. Without persistent memory, they can’t:

- Recall your job title after you mention it once

- Remember you dislike certain topics or formats

- Track the evolution of a multi-day project discussion

- Personalize responses based on historical behavior

This isn’t just inconvenient—it breaks trust and limits utility in professional, educational, or customer-facing scenarios.

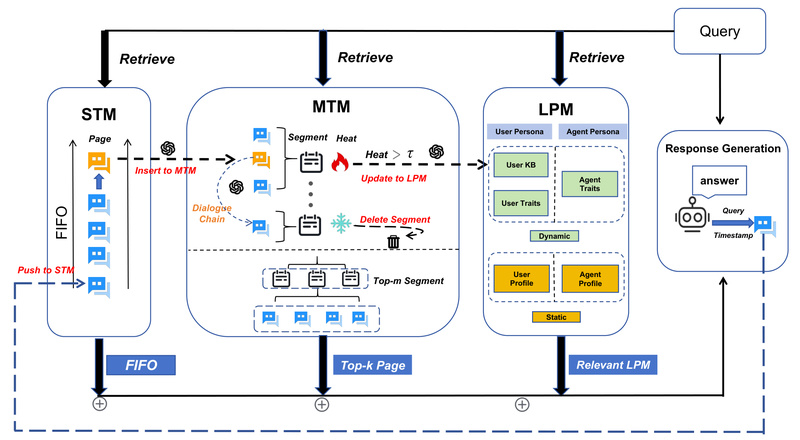

MemoryOS tackles this by implementing a three-tier memory hierarchy:

- Short-term memory: Holds the current conversation thread (like CPU cache).

- Mid-term memory: Stores summarized dialogue chains from recent sessions (like RAM).

- Long-term personal memory: Archives user profiles, preferences, and domain knowledge (like persistent storage).

Crucially, MemoryOS doesn’t just store data—it intelligently updates it. Short-to-mid transfers follow a dialogue-chain-based FIFO policy, while mid-to-long promotions use a segmented page strategy, ensuring only high-value, coherent information is retained long-term.

Key Features That Make MemoryOS Stand Out

1. Proven Performance on Real Benchmarks

On the LoCoMo benchmark—designed specifically to evaluate long-term memory in conversational agents—MemoryOS significantly outperforms standard RAG and context-window-only approaches. This isn’t simulated gain; it’s measurable improvement in contextual coherence and personalization.

2. Plug-and-Play Architecture

MemoryOS is designed as a modular layer. You can swap in different:

- Storage backends (e.g., built-in file system or ChromaDB)

- Embedding models (BAAI/bge-m3, Qwen3-Embedding, all-MiniLM-L6-v2)

- Update and retrieval strategies

This flexibility means you’re not locked into one tech stack.

3. Universal LLM Compatibility

MemoryOS works with any LLM that supports the OpenAI API format—including GPT-4o, Claude, Deepseek-R1, Qwen3, and locally hosted models via vLLM or Llama Factory. Just provide your API key and base URL.

4. MemoryOS-MCP: Ready for Agent Workflows

For developers building AI agents (e.g., in Cline, Cursor, or custom platforms), the MemoryOS Model Context Protocol (MCP) server exposes three simple tools:

add_memory: Log interactionsretrieve_memory: Fetch relevant historyget_user_profile: Access synthesized user traits

This turns MemoryOS into a service your agent can call—no deep integration required.

Ideal Use Cases for MemoryOS

MemoryOS shines in any scenario where continuity, personalization, and contextual depth matter:

- Personal AI Assistants: Remember your schedule, communication style, and life updates across weeks.

- Customer Support Bots: Recall past tickets, product preferences, and frustration points to deliver empathetic, efficient service.

- Research or Coding Companions: Track project milestones, codebase changes, or literature reviews over time—no more repeating setup instructions.

- Education Tutors: Adapt explanations based on a student’s historical misunderstandings or learning pace.

In short: if your agent needs to “know” the user beyond a single chat session, MemoryOS provides the memory infrastructure.

How to Get Started

MemoryOS offers multiple entry points depending on your use case:

For Quick Prototyping

Install via PyPI and run a demo in minutes:

pip install memoryos-pro

A few lines of Python code let you initialize memory, add interactions, and retrieve context-aware responses.

For Agent Integration

Use MemoryOS-MCP:

- Configure your LLM and storage settings in

config.json - Launch the MCP server

- Connect your agent client (e.g., Cline in VS Code) to call memory tools

For Scalable Deployments

- ChromaDB Support: Switch to vector database storage for large-scale retrieval.

- Docker: Run containerized instances with GPU support for production environments.

- Playground: Experiment via a web UI to explore memory behavior interactively.

All options share the same core architecture—so you can prototype locally and scale seamlessly.

Limitations and Considerations

While powerful, MemoryOS has practical constraints to consider:

- LLM Dependency: It requires an external LLM (via OpenAI-compatible API). You must manage API keys, costs, and rate limits.

- Embedding Model Sensitivity: Switching embedding models (e.g., from BGE-M3 to Qwen) requires a new data storage path to avoid vector space mismatches.

- Storage Management: Long-term memory data is stored locally by default; you’ll need to handle persistence, backups, and privacy if deploying in regulated environments.

That said, these are common trade-offs in modular AI systems—not unique flaws. The documentation provides clear guidance for each scenario.

Summary

MemoryOS isn’t just another memory wrapper—it’s a complete memory operating system for AI agents. By borrowing proven concepts from computer architecture and adapting them to LLM limitations, it delivers real, measurable gains in personalization and coherence.

For project and technical decision-makers, it represents a strategic shortcut: instead of building fragile, ad-hoc memory logic, you integrate a battle-tested, benchmark-validated system that plugs into your existing LLM stack.

Whether you’re prototyping a personal assistant or deploying enterprise-grade agents, MemoryOS ensures your AI doesn’t just respond—it remembers.