In today’s world of intelligent systems—from autonomous robots to immersive AR experiences—depth perception is essential. Yet most cameras only capture 2D RGB images. MiDaS (Monocular Depth Estimation with Scale-Awareness) solves this problem by estimating dense, reliable depth maps from a single image, without requiring stereo cameras, LiDAR, or other specialized sensors. Developed by Intel Labs and continuously refined since 2019, MiDaS has evolved into a robust, open-source toolkit trusted across research and industry.

MiDaS v3.1 marks a major leap forward, introducing a model zoo built on modern vision backbones—including BEiT, Swin, SwinV2, LeViT, and Next-ViT—enabling users to choose the ideal balance between accuracy, speed, and hardware constraints. Whether you’re building a mobile AR app, prototyping a robot navigation system, or generating 3D content from text, MiDaS offers plug-and-play depth estimation that works out-of-the-box across diverse environments—even on datasets it was never trained on.

Why MiDaS Stands Out

A Model Zoo for Every Use Case

Unlike one-size-fits-all depth estimators, MiDaS v3.1 provides a curated selection of models, each optimized for different scenarios:

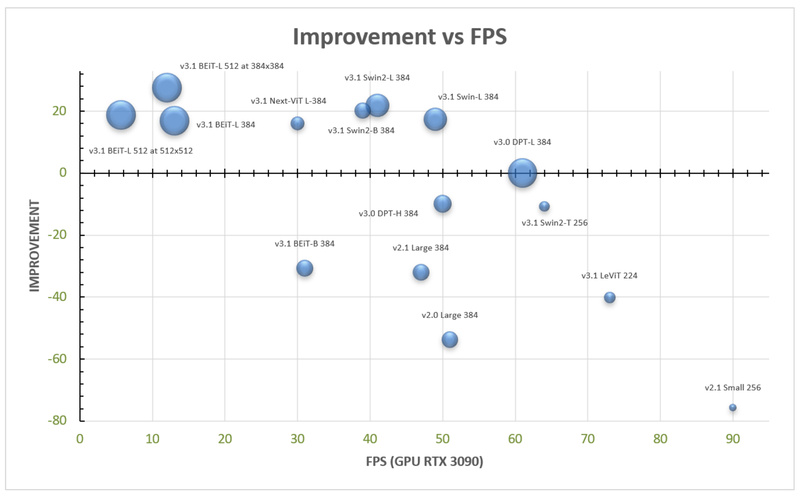

- dpt_beit_large_512: Highest accuracy (28% improvement over v3.0), ideal for offline, high-fidelity applications.

- dpt_swin2_large_384: Best trade-off between quality and inference speed—great for real-time desktop or edge systems.

- dpt_swin2_tiny_256 and dpt_levit_224: Lightweight models for resource-constrained devices, including mobile and embedded platforms.

This flexibility empowers technical decision-makers to align model choice with project constraints—without sacrificing robustness.

Zero-Shot Generalization That Just Works

MiDaS is trained on 12 diverse datasets (including KITTI, NYU Depth V2, MegaDepth, and TartanAir) using multi-objective optimization. Crucially, it excels in zero-shot transfer: it performs reliably on scenes and domains it has never seen during training. This eliminates the need for costly per-domain fine-tuning—a major pain point in deploying depth estimation systems in the real world.

Seamless Integration Across Stacks

MiDaS isn’t just a research artifact—it’s built for deployment:

- PyTorch Hub: Load pretrained models with a single line of code.

- Docker: Containerized inference with GPU support.

- OpenVINO: Optimized CPU inference for Intel hardware (via the legacy v2.1 small model).

- Mobile: iOS and Android support for on-device depth sensing.

- ROS: Ready for robotics integration (currently v2.1; DPT models coming soon).

This ecosystem readiness drastically reduces time-to-prototype and time-to-production.

Where MiDaS Delivers Real Value

Robotics and Autonomous Systems

Robots navigating unknown environments need spatial awareness. MiDaS provides immediate depth cues from standard RGB cameras, enabling obstacle avoidance, terrain assessment, and path planning—even when metric depth isn’t strictly required.

AR/VR and 3D Scene Understanding

Augmented reality apps rely on understanding scene geometry. MiDaS delivers real-time depth maps that allow virtual objects to correctly occlude or interact with physical surfaces—using only a smartphone camera.

Generative AI and 3D Content Creation

MiDaS powers cutting-edge generative models like LDM3D, which produces both RGB images and depth maps from text prompts. The depth maps used to train LDM3D were generated by MiDaS, proving its reliability as a foundational component in 3D generative pipelines.

Academic Prototyping and Rapid Experimentation

For researchers, MiDaS removes the barrier of collecting or labeling depth data. Its zero-shot robustness makes it an ideal starting point for experiments in vision, graphics, and embodied AI—accelerating validation cycles without domain-specific tuning.

Getting Started in Minutes

MiDaS is designed for simplicity:

-

Set up the environment:

conda env create -f environment.yaml conda activate midas-py310

-

Download a model weight (e.g.,

dpt_beit_large_512for best quality) into theweightsfolder. -

Run inference:

python run.py --model_type dpt_beit_large_512 --input_path input --output_path output

For live camera depth estimation:

python run.py --model_type dpt_swin2_tiny_256 --side

The --side flag displays RGB and depth side-by-side in real time.

Optional flags like --height and --square let you fine-tune input resolution—critical for optimizing speed on edge devices.

Understanding Trade-offs and Limitations

While powerful, MiDaS has important constraints to consider:

- Relative Depth Only: MiDaS predicts relative depth (ordinal relationships between objects), not metric depth in meters. For true scale-aware depth, combine it with post-processing modules like ZoeDepth, which fuses MiDaS outputs with metric binning.

- Resolution vs. Speed: Higher-resolution models (e.g., 512×512) offer superior detail but require high-end GPUs (e.g., RTX 3090) and run at ~5–12 FPS. Low-res models (256×256) can exceed 60 FPS on the same hardware.

- Square Inputs for Some Backbones: Models based on Swin, SwinV2, and LeViT require square inputs (

--squareflag), which may distort aspect ratios if not handled carefully. - No Fine-Grained Control: MiDaS is a general-purpose estimator. If your application demands pixel-perfect accuracy in a narrow domain (e.g., surgical imaging), domain-specific training may still be necessary.

These limitations are well-documented and manageable—especially when weighed against the alternative of building a depth system from scratch.

Summary

MiDaS v3.1 delivers state-of-the-art monocular depth estimation with unprecedented flexibility, robustness, and ease of use. By offering a spectrum of models—from ultra-accurate BEiT variants to lean LeViT architectures—it empowers developers and researchers to integrate depth perception into their projects without specialized hardware or extensive retraining. Its proven zero-shot performance across diverse scenes, coupled with support for PyTorch, Docker, mobile, and ROS, makes MiDaS not just a research tool, but a production-ready asset. If your work involves understanding 3D structure from 2D images, MiDaS is a compelling, community-backed solution worth adopting today.