Imagine needing realistic, physics-compliant character movement for a game, simulation, or robotics project—but without the months of trial, error, and low-level infrastructure work usually required. That’s where MimicKit comes in.

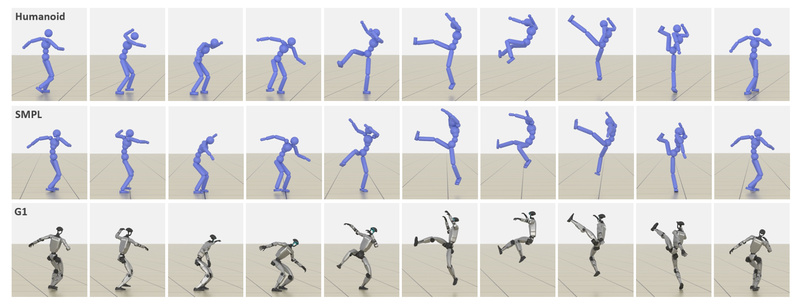

MimicKit is an open-source reinforcement learning (RL) framework purpose-built for motion imitation and control. It enables developers, researchers, and engineers to train motion controllers that replicate reference motion clips—from simple walks to complex acrobatic maneuvers—while respecting real-world physics. By combining established motion-imitation methods with proven RL algorithms, MimicKit offers a streamlined, modular path to building lifelike, dynamically stable characters across domains like gaming, animation, robotics, and virtual prototyping.

Unlike from-scratch implementations, MimicKit provides a standardized, lightweight codebase that reduces boilerplate, minimizes dependencies, and accelerates experimentation. Whether you’re replicating a character’s spin kick or teaching a robot to navigate uneven terrain using human-like gait patterns, MimicKit helps you get there faster and more reliably.

Why MimicKit Stands Out

Built-In Support for State-of-the-Art Motion Imitation Methods

MimicKit ships with ready-to-use implementations of four influential motion imitation techniques:

- DeepMimic: The foundational method that uses reference motions as a guide for learning complex physical skills via deep reinforcement learning.

- AMP (Adversarial Motion Priors): Enables learning from large, unstructured motion datasets while ensuring naturalistic and diverse behaviors.

- ASE (Adversarial Skill Embeddings): Facilitates reusable, composable skill representations that can be combined for complex behaviors.

- ADD (Adversarial Differential Discriminators): A newer technique that improves motion fidelity by focusing on temporal consistency and physical plausibility.

These methods are no longer confined to research papers—MimicKit makes them accessible and plug-and-play.

Proven Reinforcement Learning Algorithms

To power training, MimicKit integrates two widely adopted RL algorithms:

- PPO (Proximal Policy Optimization): A robust, on-policy method known for stable and efficient learning in continuous control tasks.

- AWR (Advantage-Weighted Regression): An off-policy algorithm that excels at learning from fixed datasets, offering greater data efficiency.

This dual support gives users flexibility depending on whether they’re training interactively in simulation or leveraging pre-collected motion data.

Modular, Lightweight, and Simulator-Agnostic

MimicKit’s architecture is intentionally clean and modular. Environment, agent, and motion data are decoupled through standardized interfaces, making it easy to:

- Swap characters (e.g., from humanoid to quadruped)

- Change motion sources (single clip vs. full dataset)

- Adapt reward functions or observation spaces

It also supports two leading GPU-accelerated simulators: Isaac Gym and Isaac Lab. By abstracting the simulation backend behind a simple engine_name configuration, MimicKit lets you choose the best tool for your hardware and performance needs without code rewrites.

Practical Applications and Ideal Scenarios

MimicKit solves real problems for teams working on dynamic character control:

- Game Development: Rapidly prototype NPCs with physically grounded locomotion, jumps, or combat moves—without relying solely on keyframed animation.

- Robotics Research: Train robot controllers that mimic human motion patterns for tasks like walking, carrying, or balancing, improving generalization and robustness.

- Virtual Humans & Simulation: Create avatars for VR training, digital twins, or film previsualization that move naturally under physics constraints.

- Academic Prototyping: Test new RL or imitation learning ideas against standardized baselines with minimal setup overhead.

By grounding motion in physics, MimicKit avoids the “floaty” or unstable behaviors common in purely kinematic systems. At the same time, its reliance on reference motion ensures behaviors stay stylistically faithful to human movement.

Getting Started: A Simple, Scriptable Workflow

Using MimicKit requires no deep framework expertise. The typical workflow is straightforward:

- Set up your environment: Install your preferred simulator (Isaac Gym or Isaac Lab) and MimicKit’s Python dependencies.

- Download motion assets: Reference motion clips (in

.pklformat) and character models are provided in thedata/directory. - Configure via YAML: Define your environment and agent using human-readable config files (e.g.,

deepmimic_humanoid_env.yaml). - Train or test via CLI: Launch with a single command:

python mimickit/run.py --mode train --env_config data/envs/deepmimic_humanoid_env.yaml --agent_config data/agents/deepmimic_humanoid_ppo_agent.yaml --num_envs 4096 --visualize false

Testing a pretrained model is equally simple:

python mimickit/run.py --mode test --model_file data/models/deepmimic_humanoid_spinkick_model.pt --visualize true

Logs can be tracked via TensorBoard or Weights & Biases, and training scales across multiple GPUs using the --devices flag. For teams that prefer script-based configuration over CLI arguments, .txt argument files (e.g., in args/) offer a reproducible alternative.

Limitations and Practical Considerations

While powerful, MimicKit isn’t a universal solution. Decision-makers should be aware of the following constraints:

- Simulator dependency: Currently only supports Isaac Gym and Isaac Lab, both of which require NVIDIA GPUs and specific driver setups.

- Motion data format: Reference motions must follow MimicKit’s internal representation—3D exponential maps for multi-DoF joints, scalars for 1D joints—and be stored as

.pklfiles. Retargeting from other formats (e.g., BVH) requires preprocessing, though tools like GMR conversion scripts are included. - Compute requirements: Large-scale training (e.g., 4096+ parallel environments) demands substantial GPU memory and is best suited for workstation or cloud setups.

These aren’t dealbreakers—but they do mean MimicKit fits best in environments where GPU-accelerated physics simulation is already part of the stack.

When to Choose MimicKit Over Alternatives

MimicKit excels when your priority is rapid, reliable prototyping of physics-based motion controllers using established imitation learning methods. Its clean codebase and minimal dependencies make it ideal for:

- Academic labs validating new control strategies

- Indie studios experimenting with procedural animation

- Robotics teams bootstrapping humanoid controllers

For projects requiring deeper customization (e.g., novel reward shaping, non-standard kinematic trees) or integration with non-NVIDIA simulators, the more feature-rich ProtoMotions framework (also from the same authors) may be a better fit. But for most teams seeking a balance of simplicity, performance, and research-grade methods, MimicKit hits the sweet spot.

Summary

MimicKit lowers the barrier to creating intelligent, physics-aware characters by packaging cutting-edge motion imitation research into a lightweight, easy-to-use framework. With built-in support for DeepMimic, AMP, ASE, and ADD—and seamless integration with high-performance simulators like Isaac Gym—it enables faster iteration, more realistic behaviors, and greater reproducibility. If your work involves teaching virtual or physical agents to move like humans, MimicKit is a compelling starting point that avoids reinventing the wheel.