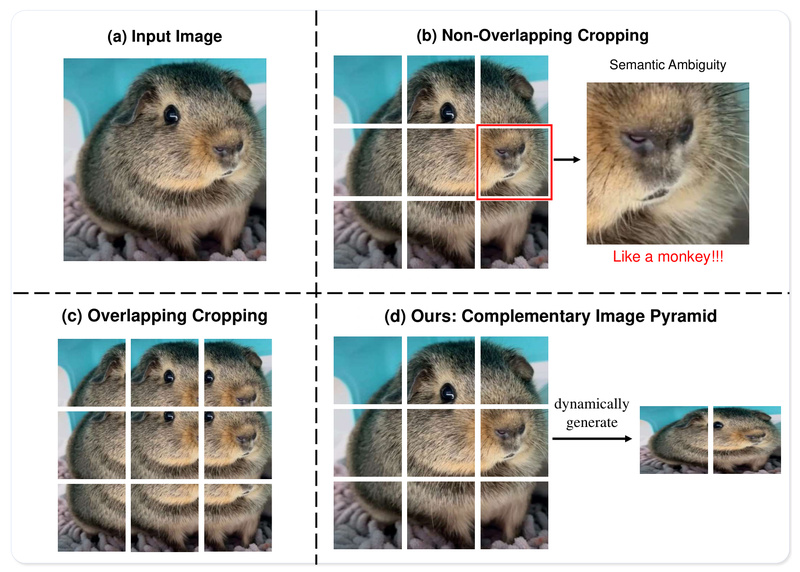

When it comes to deploying multimodal large language models (MLLMs) in real-world applications—especially on cost-sensitive or edge devices—lightweight models are often the only practical option. Yet, these efficient models frequently struggle with high-resolution images. The problem? Standard sliding-window cropping strategies break up visual scenes, cutting off small text, disconnected symbols, or irregularly shaped objects. This leads to what the Mini-Monkey team calls the semantic sawtooth effect: a jarring loss of context and coherence that cripples understanding.

Mini-Monkey directly tackles this issue. Built as a lightweight yet high-performing MLLM, it introduces a simple but powerful architectural enhancement—Complementary Image Pyramid (CIP)—that ensures semantic continuity across image scales without blowing up computational costs. The result? A 2B-parameter model that outperforms much larger 8B counterparts on document understanding benchmarks like OCRBench, all while remaining practical to train and deploy.

Why the “Semantic Sawtooth Effect” Matters in Practice

Most high-resolution MLLMs process images by dividing them into fixed-size crops. While this approach scales to higher resolutions, it often severs critical visual elements at crop boundaries—particularly problematic for dense, structured inputs like invoices, academic papers, or schematics.

For example:

- A receipt might have a product name split across two crops.

- A scientific diagram could have labels partially cut off.

- Handwritten notes with irregular layouts become fragmented.

Lightweight models, which lack the representational capacity of their larger siblings, suffer most from this fragmentation. Their performance drops sharply because they can’t infer missing context. Mini-Monkey identifies this as a structural flaw in current high-resolution pipelines—not just a data or training issue—and proposes a plug-and-play fix that works across model families.

Core Innovations: CIP and SCM

Complementary Image Pyramid (CIP)

Instead of relying solely on a single high-res crop layout, Mini-Monkey dynamically constructs a multi-scale image pyramid. This pyramid includes both the original high-resolution crops and coarser, downsampled versions that retain global structure.

By feeding these complementary views into the vision encoder, the model gains:

- Local detail from high-res crops (e.g., individual characters in fine print).

- Global context from lower-res overviews (e.g., table structure, document layout).

This dual perspective dramatically reduces semantic discontinuity, enabling accurate recognition of small or fragmented visual elements.

Scale Compression Mechanism (SCM)

Adding extra image scales could easily inflate token count and memory usage. To prevent this, Mini-Monkey employs SCM, which selectively compresses redundant visual tokens from lower-resolution layers. This keeps the total input length manageable—critical for maintaining inference speed and GPU memory efficiency.

Importantly, both CIP and SCM are modular. They’ve been validated across diverse backbones like MiniCPM-V-2, InternVL2, and LLaVA-OneVision, in both fine-tuned and training-free settings.

Where Mini-Monkey Excels: Ideal Use Cases

Mini-Monkey shines in scenarios where high-resolution integrity and text-rich understanding are non-negotiable:

- Document Intelligence: Parsing receipts, forms, contracts, and academic PDFs without OCR post-processing.

- OCR-Heavy Applications: Achieving state-of-the-art results on OCRBench—its 2B version scores 802, surpassing InternVL2-8B by 12 points.

- Edge Deployments: Thanks to its 1.8B–2B scale (based on internlm2-chat-1.8b), it runs efficiently on modest hardware, making it viable for on-device or cloud-cost-sensitive use.

- General Multimodal QA: Strong performance across MME, SeedBench_IMG, and MathVista-MiniTest shows it’s not just a document specialist.

If your project involves extracting structured meaning from complex visual layouts—especially where commercial OCR engines fail or add latency—Mini-Monkey offers a compelling alternative.

Getting Started: Easy Integration Paths

Adopting Mini-Monkey is straightforward, whether you’re prototyping or deploying at scale.

Environment Setup

conda create -n monkey python=3.9 conda activate monkey git clone https://github.com/Yuliang-Liu/Monkey.git cd Monkey pip install -r requirements.txt

Install compatible FlashAttention (e.g., for CUDA 11.7 + PyTorch 2.0) as noted in the repo.

Inference in One Line

Once you’ve downloaded the pre-trained weights:

python ./inference.py --model_path Mini-Monkey --image_path your_image.jpg --question "What does this document say?"

Demo and Customization

The repo includes both basic (demo.py) and chat-enhanced (demo_chat.py) interfaces. You can run it offline with local weights or online by pulling from Hugging Face.

Training (If Needed)

Full training scripts are provided (finetune_ds_debug.sh, etc.). Notably, full training requires only eight RTX 3090 GPUs—remarkably accessible for a high-performing MLLM.

Practical Limitations to Consider

While Mini-Monkey is highly capable in its niche, it’s not a universal replacement for massive models:

- License: The code and weights are for non-commercial use only. Commercial applications require direct inquiry.

- Base Model Dependency: Built on internlm2-chat-1.8b, so its language capabilities are bounded by that foundation.

- Training Hardware: Although inference is lightweight, training from scratch still demands multi-GPU setups (8×RTX 3090 as reported).

- Scope: Optimized for high-res understanding and document tasks—not necessarily superior in open-ended image generation or abstract reasoning.

That said, for its target domain—high-fidelity, resolution-sensitive multimodal understanding—it sets a new bar for efficiency and accuracy.

Summary

Mini-Monkey solves a real, overlooked problem in lightweight multimodal AI: the fragmentation of meaning when processing high-resolution images. Through its Complementary Image Pyramid and Scale Compression Mechanism, it delivers robust, context-aware understanding without excessive compute overhead. With top-tier performance on document and OCR benchmarks, easy integration, and modest resource requirements, it’s an excellent choice for developers and researchers building practical, vision-language applications under real-world constraints.