Multimodal Large Language Models (MLLMs) promise to transform how machines understand images, videos, and text—but most top-performing models come with steep computational costs, closed-source licenses, or impractical latency. MiniCPM-V 4.5, an open-source 8B-parameter model from OpenBMB, changes this equation. It delivers vision-language performance that surpasses proprietary systems like GPT-4o-latest and open-source giants like Qwen2.5-VL 72B, all while running efficiently on consumer devices—including iPhones and laptops.

What makes MiniCPM-V 4.5 stand out isn’t just its benchmark scores—it’s how it bridges the gap between cutting-edge capability and real-world deployability. Whether you’re building a mobile document scanner, analyzing surveillance footage, or deploying a privacy-aware vision assistant on the edge, MiniCPM-V 4.5 offers a rare combination of accuracy, efficiency, and ease of integration.

Performance That Defies Scale

MiniCPM-V 4.5 achieves an average score of 77.0 on OpenCompass, a comprehensive evaluation across eight major vision-language benchmarks. This places it ahead of GPT-4o-latest, Gemini-2.0 Pro, and even the much larger Qwen2.5-VL 72B—all with only 8 billion parameters.

On video understanding, the gap widens further. In the Video-MME benchmark, MiniCPM-V 4.5 scores 73.5, nearly matching the 73.6 of GLM-4.1V (10.3B) and far exceeding Qwen2.5-VL-7B (71.6). Crucially, it does so with dramatically lower resource consumption: just 28GB of GPU memory versus 60GB for Qwen2.5-VL-7B, and 0.26 hours of total inference time versus 3 hours—making it over 11x faster on the same hardware.

This isn’t just incremental improvement; it’s a redefinition of what’s possible in efficient multimodal AI.

Efficiency Designed for Real Constraints

Many MLLMs claim “efficiency,” but few deliver in practice. MiniCPM-V 4.5 was built from the ground up to operate within real-world limits:

- 46.7% less GPU memory than Qwen2.5-VL 7B on video tasks

- 8.7% of the inference time (over 11x faster)

- Supports int4, AWQ, and GGUF quantization, enabling deployment on devices with as little as 8GB of RAM

- Runs smoothly on Apple Silicon via MPS and on iOS via a dedicated app

For developers managing cloud costs or building on-device applications, these gains translate directly into lower operational expenses, better user experiences, and the ability to run sophisticated vision models without specialized hardware.

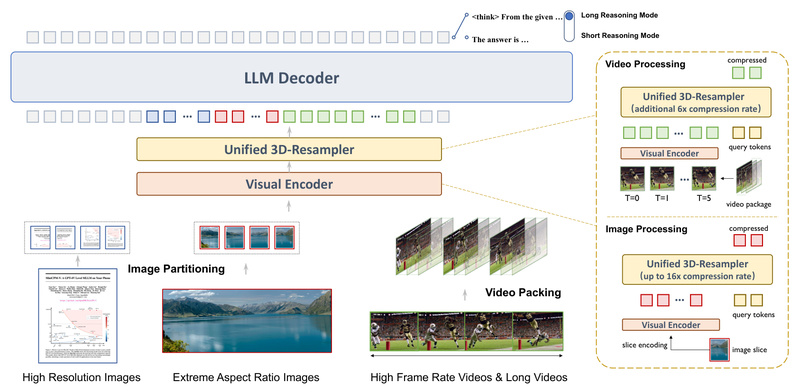

Handling Real-World Visual Inputs with Finesse

Unlike many MLLMs that struggle with practical visual data, MiniCPM-V 4.5 excels at tasks that matter:

- High-resolution document parsing: Processes images up to 1.8 million pixels (e.g., 1344×1344) with 4x fewer visual tokens than typical models

- Handwritten OCR and complex tables: Leads on OCRBench, outperforming GPT-4o-latest and Gemini 2.5

- Multi-image reasoning: Seamlessly compares or reasons across multiple images in a single query

- Long and high-FPS video understanding: Uses a novel 3D-Resampler to compress up to 6 video frames into just 64 tokens, achieving a 96x compression rate. This enables analysis of long videos or high-frame-rate streams (up to 10 FPS) without blowing up context length

This makes MiniCPM-V 4.5 uniquely suited for real applications—scanning invoices, extracting data from medical forms, monitoring live video feeds, or comparing product designs across images.

Controllable Reasoning: Fast for Daily Use, Deep for Complex Tasks

One of MiniCPM-V 4.5’s most practical innovations is its hybrid reasoning mode, which users can toggle at inference time:

- Fast mode: Optimized for quick, accurate responses to routine queries (e.g., “What’s in this photo?”)

- Deep mode: Activates extended reasoning chains for complex problems (e.g., “Explain the physics in this slow-motion video”)

This switchable design solves a common pain point: most models force a trade-off between speed and depth. MiniCPM-V 4.5 delivers both, letting developers match model behavior to user needs—without deploying multiple models.

Easy Deployment Across Environments

MiniCPM-V 4.5 integrates smoothly into diverse workflows:

- On-device: Officially supported by llama.cpp, Ollama, and vLLM, with ready-to-use GGUF and int4 models

- Mobile: Runs on iPhone and iPad via an optimized iOS app

- Cloud and server: Deployable with vLLM or SGLang for high-throughput inference

- Fine-tuning: Compatible with LLaMA-Factory, Transformers, and SWIFT, enabling domain adaptation with LoRA or full training

This flexibility means you can prototype on your laptop, deploy to edge devices, and scale to enterprise servers—all with the same model family.

Limitations to Consider

While MiniCPM-V 4.5 sets a new bar for efficient multimodal AI, it’s not without limits:

- Like many autoregressive models, it can repeat responses under certain input conditions

- In multi-turn video or image chats, context management requires careful prompt engineering

- The web demo may experience latency on international networks (local deployment is recommended for performance-critical use)

These are manageable with standard engineering practices—and far outweighed by the model’s capabilities and efficiency.

Summary

MiniCPM-V 4.5 proves that small models can outperform much larger ones when architecture, data, and training are co-optimized for real-world tasks. By delivering GPT-4o-level vision intelligence in an 8B open-source package—with support for high-res images, long videos, OCR, and switchable reasoning—it empowers developers to build powerful, private, and cost-effective multimodal applications without compromise.

If you’ve been waiting for a truly deployable, high-performance MLLM that works on your hardware and respects your constraints, MiniCPM-V 4.5 is worth your serious consideration.