In today’s data-driven world, organizations often face a fundamental dilemma: they want to build powerful, generalizable AI models, but their data is locked in silos due to privacy regulations, legal restrictions, or competitive concerns. Centralizing data isn’t always an option—and sometimes it’s outright prohibited. This is where NVIDIA FLARE (Federated Learning Application Runtime Environment) steps in.

NVIDIA FLARE is an open-source, Python-based SDK that brings federated learning (FL) from simulation to real-world deployment. It allows data scientists, ML engineers, and platform developers to collaboratively train machine learning models across distributed data sources—without ever sharing or transferring the raw data itself. By keeping data local and only exchanging model updates, FLARE enables secure, compliant, and privacy-aware AI development.

Unlike monolithic frameworks, FLARE is lightweight, modular, and framework-agnostic. Whether you’re using PyTorch, TensorFlow, XGBoost, or even NumPy, you can adapt your existing workflows to a federated setting with minimal code changes. This flexibility, combined with built-in support for advanced privacy techniques and real-world deployment tools, makes FLARE a practical choice for cross-organizational collaboration in sensitive domains like healthcare, finance, and industrial AI.

Why Federated Learning Matters—And Why FLARE Delivers

Traditional machine learning requires aggregating datasets in one place—a non-starter when data resides across hospitals, banks, or autonomous vehicle fleets governed by strict data sovereignty laws. Federated learning solves this by letting models learn from decentralized data while preserving privacy.

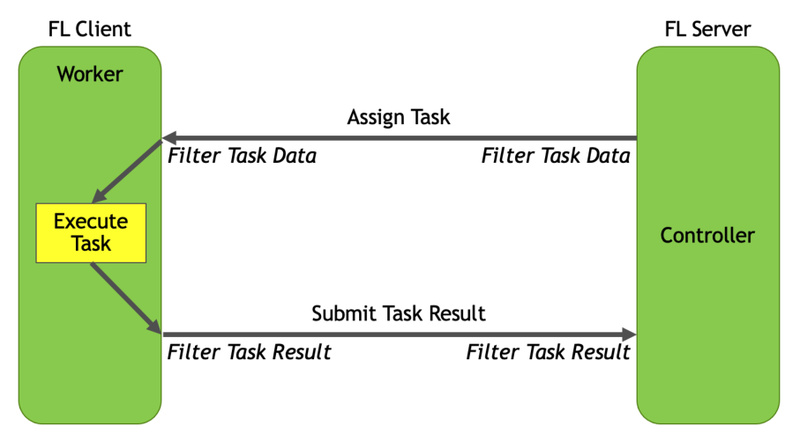

But implementing FL from scratch is complex: you need to manage communication protocols, orchestrate training rounds, enforce security policies, and integrate privacy-preserving mechanisms. NVIDIA FLARE removes this friction. It’s not just a research toy—it’s designed to bridge the gap between academic simulation and production-grade deployment.

Key Features That Solve Real Collaboration Challenges

Framework Flexibility Without Rewrites

FLARE doesn’t force you into a specific deep learning stack. Its plug-and-play architecture works with PyTorch, TensorFlow, Scikit-learn, XGBoost, and more. If you already have a training pipeline, you can federate it using the FLARE Client API with only minor modifications—no need to rebuild from scratch.

Built-In Algorithms for Common and Advanced FL Scenarios

Out of the box, FLARE supports industry-standard federated algorithms like FedAvg, FedProx, FedOpt, SCAFFOLD, and Ditto. Whether you’re running standard horizontal FL (same features, different users) or more complex vertical FL (different features, same users), FLARE provides the tools to implement them correctly and efficiently.

Privacy That Meets Regulatory Standards

Data privacy isn’t an afterthought—it’s core to FLARE’s design. The SDK integrates:

- Differential privacy (adding calibrated noise to model updates)

- Homomorphic encryption (computing on encrypted data)

- Private Set Intersection (PSI) (for secure record matching in vertical FL)

These features help organizations comply with GDPR, HIPAA, and other data protection frameworks while still enabling collaborative AI.

From Prototype to Production with Confidence

FLARE includes a simulator mode for rapid prototyping—ideal for testing algorithms without spinning up real clients. Once validated, you can deploy the same workflow in a proof-of-concept (POC) or full production environment, whether on-premise or in the cloud. The built-in fault tolerance and system resiliency mechanisms ensure training continues even if some clients drop out—critical for real-world robustness.

End-to-End Workflow Management

Beyond training, FLARE supports the entire federated lifecycle:

- Federated data statistics (e.g., compute global dataset summaries without centralizing data)

- Cross-site validation (evaluate model performance across all participants)

- A web dashboard for monitoring jobs, managing participants, and tracking progress

This holistic approach ensures FL isn’t just about training—it’s about governance, validation, and operational control.

Ideal Use Cases: Where FLARE Truly Shines

NVIDIA FLARE excels in multi-party settings where data cannot— or must not—be centralized:

- Healthcare research: Hospitals collaborating on a joint model for predicting disease outcomes (e.g., the published COVID-19 analysis use case) without sharing patient records.

- Cross-enterprise analytics: Financial institutions jointly detecting fraud patterns while keeping customer transaction data private.

- Industrial IoT: Manufacturers pooling sensor data from different factories to improve predictive maintenance models, respecting intellectual property boundaries.

If your project involves regulated data, competitive sensitivity, or logistical barriers to data sharing, FLARE provides a viable path forward.

Getting Started Is Surprisingly Simple

Installation is a one-liner:

pip install nvflare

New users can:

- Run tutorials and DLI courses provided by NVIDIA

- Use POC mode to simulate a multi-client FL environment on a single machine

- Gradually transition to real distributed deployments using the same codebase

The documentation includes curated learning paths, making it accessible even to teams new to federated learning—provided they have foundational ML knowledge.

Limitations and Practical Considerations

While powerful, FLARE is not a magic “push-button” solution. A few realities to keep in mind:

- Basic federated learning understanding is required. FLARE simplifies implementation but doesn’t eliminate the need to grasp FL concepts like client heterogeneity, communication overhead, and convergence behavior.

- Coordination among participants is essential. Real-world deployment requires aligned infrastructure, network access, and governance agreements—not just software.

- Advanced privacy features carry computational costs. Homomorphic encryption, for instance, can significantly slow down training. Teams should benchmark trade-offs between privacy strength and performance.

These aren’t flaws—they’re inherent to the federated paradigm. FLARE’s strength lies in making these trade-offs explicit, manageable, and customizable.

Summary

NVIDIA FLARE is more than a federated learning library—it’s a complete runtime environment that turns theoretical FL into deployable, secure, and scalable solutions. By supporting popular ML frameworks, offering built-in privacy tools, and enabling seamless transitions from simulation to production, it directly addresses the core pain points of modern AI teams: data silos, regulatory compliance, and the need for collaborative yet private model development.

For data scientists and platform engineers working in regulated or multi-stakeholder environments, FLARE isn’t just useful—it’s often the only practical way to build AI that respects data boundaries while delivering real-world impact.