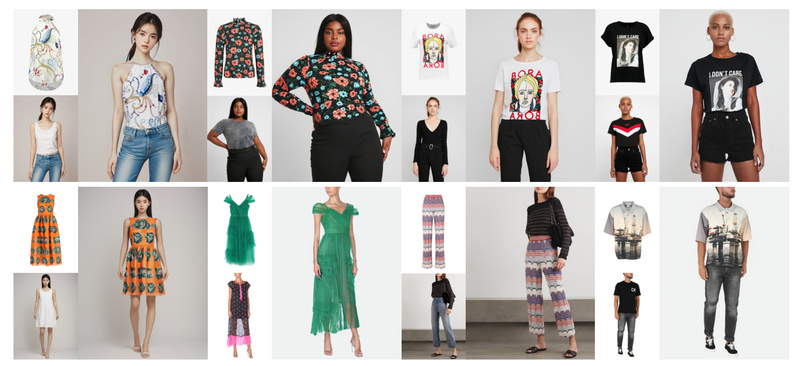

OOTDiffusion represents a significant leap forward in image-based virtual try-on (VTON) technology. Built on the foundation of pretrained latent diffusion models, it delivers photorealistic and highly controllable garment transfer results—without relying on the traditional and often error-prone garment warping step that plagues many existing VTON systems. Whether you’re building an e-commerce fitting room, a digital styling assistant, or an AI-powered fashion design tool, OOTDiffusion offers a streamlined, robust solution that works with arbitrary human and clothing images out of the box.

Why OOTDiffusion Stands Out

No Garment Warping, No Alignment Guesswork

Traditional VTON pipelines typically involve a warping module that attempts to deform a clothing item to match the target human pose. This step is not only computationally expensive but also prone to geometric distortions and texture artifacts—especially under complex poses or diverse body shapes. OOTDiffusion eliminates this bottleneck entirely. Instead, it fuses garment features directly into the self-attention layers of a denoising UNet through a novel mechanism called outfitting fusion. This ensures precise, context-aware alignment between the garment and the human body during the diffusion process itself.

Controllability via Outfitting Dropout

One of OOTDiffusion’s most compelling innovations is its built-in controllability. By introducing outfitting dropout during training, the model learns to generate try-on results with variable garment influence. At inference time, users can fine-tune the strength of the garment appearance using classifier-free guidance (CFG)—adjusting a single --scale parameter (e.g., --scale 2.0) to balance realism and garment fidelity. This level of control is rare in VTON systems and invaluable for product teams needing consistent, tunable outputs.

Leverages Pretrained Diffusion Priors

OOTDiffusion doesn’t train a diffusion model from scratch. Instead, it adapts powerful, general-purpose latent diffusion architectures—already pretrained on massive image datasets—to the specialized task of virtual try-on. This transfer learning strategy not only accelerates convergence but also inherits rich semantic and textural priors, resulting in outputs with remarkable detail, lighting consistency, and natural fabric draping.

Ideal Use Cases

OOTDiffusion excels in scenarios where realism, flexibility, and ease of integration matter:

- E-commerce virtual fitting rooms: Enable shoppers to visualize how any garment looks on their own uploaded photo—or on diverse model avatars—without requiring 3D scans or complex pose normalization.

- Fashion tech prototyping: Rapidly iterate on garment designs by overlaying digital fabrics onto real human images across a range of body types and poses.

- Content creation pipelines: Generate high-quality try-on visuals for marketing campaigns, social media previews, or AI-assisted styling apps using only 2D inputs.

Critically, OOTDiffusion supports both half-body (via VITON-HD) and full-body (via Dress Code) garments, making it suitable for tops, bottoms, and dresses alike—provided the correct garment category is specified (0 for upperbody, 1 for lowerbody, 2 for dress).

Problems It Solves

Product and engineering teams working on VTON often face recurring challenges:

- Misaligned garments: Warping-based methods frequently fail when poses are extreme or body proportions vary.

- Unnatural textures: Seams, folds, and fabric patterns often appear distorted or inconsistent with lighting.

- Rigid preprocessing: Many systems require tightly cropped inputs, canonical poses, or manual segmentation.

OOTDiffusion directly addresses these pain points:

- Its outfitting fusion mechanism aligns garments semantically, not just geometrically.

- Outputs preserve fine-grained fabric details and lighting coherence thanks to diffusion priors.

- The pipeline only requires a full-body human image and a standalone garment image—no pose normalization or manual alignment needed.

Getting Started

Setting up OOTDiffusion is straightforward for teams already familiar with Python and PyTorch:

- Clone the repository:

git clone https://github.com/levihsu/OOTDiffusion

- Create a Python 3.10 environment and install dependencies:

conda create -n ootd python=3.10 conda activate ootd pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 pip install -r requirements.txt

- Download required checkpoints from Hugging Face—including

ootd,humanparsing,openpose, and theclip-vit-large-patch14model—into thecheckpoints/directory. - Run inference:

- For half-body try-on:

python run_ootd.py --model_path <human-image> --cloth_path <garment-image> --scale 2.0 --sample 4

- For full-body (specify category):

python run_ootd.py --model_path <human-image> --cloth_path <garment-image> --model_type dc --category 2 --scale 2.0 --sample 4

- For half-body try-on:

The codebase includes ONNX support for human parsing and a Gradio demo, easing deployment and testing. Note that official testing has been limited to Ubuntu 22.04, and Windows or macOS support is not guaranteed.

Limitations and Considerations

While OOTDiffusion delivers state-of-the-art results, adopters should be aware of several constraints:

- Training code is not yet released, limiting fine-tuning or domain adaptation.

- Linux-only validation: The system is confirmed to work on Ubuntu 22.04; compatibility with other OSes is unverified.

- Garment category must be specified for full-body mode—automatic detection is not included.

- Reliance on external models (CLIP, OpenPose, human parsing) means additional checkpoint management and disk space (~several GBs).

These factors may affect integration timelines in production systems but are manageable for research, prototyping, or controlled commercial deployments.

Summary

OOTDiffusion redefines virtual try-on by replacing fragile warping modules with intelligent, diffusion-based feature fusion. It offers unmatched realism, built-in controllability, and compatibility with arbitrary inputs—making it a compelling choice for any project requiring high-quality, scalable garment visualization. For teams prioritizing visual fidelity and engineering simplicity, OOTDiffusion is a powerful addition to the modern computer vision toolkit.