Spatio-temporal predictive learning aims to forecast future states—like video frames, weather maps, or traffic patterns—based solely on past observations, typically in an unsupervised manner. While this field has seen rapid innovation, comparing methods has remained challenging due to fragmented codebases, inconsistent evaluation protocols, and varying data preprocessing pipelines. Enter OpenSTL: a unified, open-source benchmark that standardizes the development, training, and evaluation of spatio-temporal forecasting models across diverse real-world and synthetic domains.

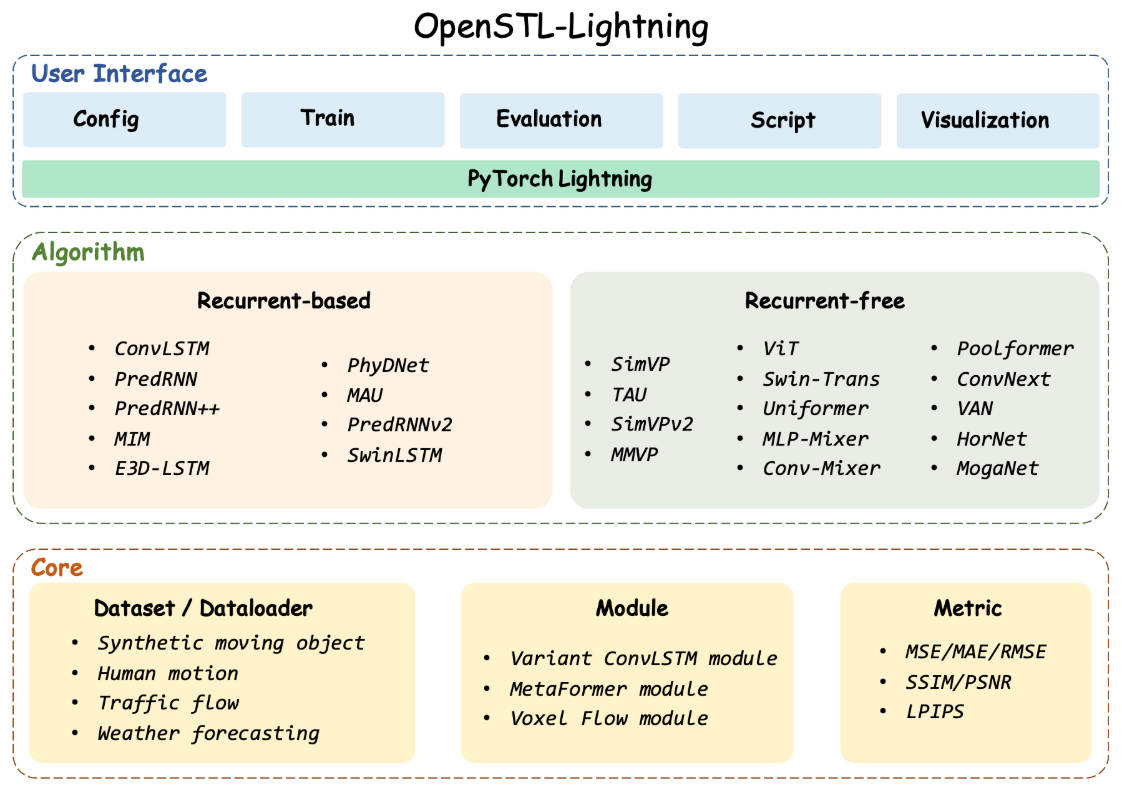

Built with reproducibility and extensibility in mind, OpenSTL categorizes approaches into two main families—recurrent-based (e.g., ConvLSTM, PredRNN) and recurrent-free (e.g., SimVP)—and demonstrates that the latter often strikes a superior balance between speed and accuracy. By offering a clean, modular architecture and support for dozens of state-of-the-art methods and datasets, OpenSTL empowers engineers and researchers to prototype, validate, and deploy predictive models with confidence.

Why Standardization Matters in Spatio-Temporal Learning

Before OpenSTL, evaluating whether Model A truly outperforms Model B required significant effort: reimplementing both from scratch, aligning data formats, and tuning hyperparameters under identical conditions. This lack of standardization led to unreliable comparisons and slowed progress.

OpenSTL solves this by providing:

- Consistent data loaders across 10+ datasets

- Unified training and evaluation APIs

- Reference implementations of 15+ established methods

- Standardized metrics (e.g., MSE, SSIM, LPIPS)

This eliminates “apples-to-oranges” comparisons and allows fair, reproducible benchmarking—critical for both academic research and industrial deployment.

Modular Architecture for Rapid Experimentation

OpenSTL’s codebase is structured into three intuitive layers:

- Core Layer: Handles training loops, logging, and metrics via PyTorch Lightning (recommended) or native PyTorch.

- Algorithm Layer: Separates methods (training logic) from models (network architectures) and modules (reusable building blocks like attention layers).

- User Interface Layer: Offers simple command-line tools (

tools/train.py,tools/test.sh) for launching experiments with minimal configuration.

This separation enables users to:

- Swap backbone architectures (e.g., replace gSTA with Swin Transformer in SimVP)

- Plug in custom training strategies (e.g., curriculum learning, multi-step forecasting)

- Add new datasets without modifying core logic

For example, training SimVP with a gSTA backbone on Moving MNIST requires just two commands:

bash tools/prepare_data/download_mmnist.sh python tools/train.py -d mmnist --lr 1e-3 -c configs/mmnist/simvp/SimVP_gSTA.py --ex_name mmnist_simvp_gsta

Supported Methods: From Classic RNNs to Modern MetaFormers

OpenSTL implements a comprehensive suite of spatio-temporal models, grouped by architectural philosophy:

Recurrent-Based Models

- ConvLSTM (NeurIPS 2015)

- PredRNN / PredRNN++ / PredRNN.V2

- E3D-LSTM (ICLR 2018)

- MIM (CVPR 2019), MAU (NeurIPS 2021)

Recurrent-Free Models

- SimVP (CVPR 2022) and SimVP.V2 — simpler, faster, and often more accurate

- TAU (CVPR 2023), MMVP (ICCV 2023), WaST (AAAI 2024)

Crucially, OpenSTL extends SimVP with 12+ MetaFormer backbones, including:

- Vision Transformer (ViT), Swin Transformer

- ConvNeXt, PoolFormer, UniFormer

- MLP-Mixer, ConvMixer, VAN, HorNet, MogaNet

This makes it easy to test whether a lightweight CNN or a powerful vision transformer works best for your specific forecasting task.

Real-World Applications Covered

OpenSTL isn’t limited to toy datasets. It supports benchmarks across five key domains:

1. Synthetic Motion

- Moving MNIST, Moving FMNIST: ideal for debugging and rapid iteration

2. Human Motion Forecasting

- Human3.6M, KTH Action: predict future poses or action sequences

3. Autonomous Driving & Robotics

- BAIR Robot Pushing, KittiCaltech Pedestrian: forecast object trajectories in dynamic scenes

4. Urban Traffic Prediction

- TaxiBJ: model inflow/outflow of taxi demand in Beijing—useful for smart city planning

5. Weather Forecasting

- WeatherBench: predict temperature, humidity, wind, and cloud cover from historical climate reanalysis data

Each domain includes downloadable dataset scripts and pre-configured training setups, reducing onboarding time from days to hours.

Getting Started with Custom Data

Beyond built-in datasets, OpenSTL provides a detailed tutorial.ipynb in the examples/ directory showing how to:

- Format your video or spatio-temporal tensor data

- Create a custom dataset class

- Train and evaluate on your own sequences

- Visualize predictions side-by-side with ground truth

This lowers the barrier for applied teams working with proprietary sensor data, medical imaging sequences, or financial time-series grids.

Limitations and Practical Considerations

While powerful, OpenSTL has a few constraints to consider:

- Python ≤ 3.10.8: Newer Python versions (e.g., 3.11+) are not supported due to dependency conflicts (e.g.,

xarray==0.19.0). - Dependency Complexity: Installing via

environment.ymlis strongly recommended to avoid version mismatches. - Partial Benchmark Coverage: Datasets like BAIR and Kinetics-400 are included for visualization but lack full benchmark results as of v1.0.0.

Users should verify hardware compatibility (GPU memory requirements vary by model size) and check the documentation for dataset-specific preprocessing steps.

Summary

OpenSTL addresses a critical pain point in spatio-temporal forecasting: the absence of a standardized, reproducible benchmark. By unifying data, models, and evaluation under a single, modular framework, it enables faster prototyping, fairer comparisons, and more reliable technology selection. Whether you’re predicting pedestrian paths for autonomous vehicles, modeling storm systems, or optimizing city traffic, OpenSTL reduces experimentation risk and accelerates progress. With support for both legacy recurrent models and modern recurrent-free architectures—and seamless integration of MetaFormer backbones—it’s a smart foundation for your next predictive learning project.