Modern software development faces persistent bottlenecks: slow iteration cycles, coordination overhead in large teams, opaque AI-assisted coding workflows, and limited…

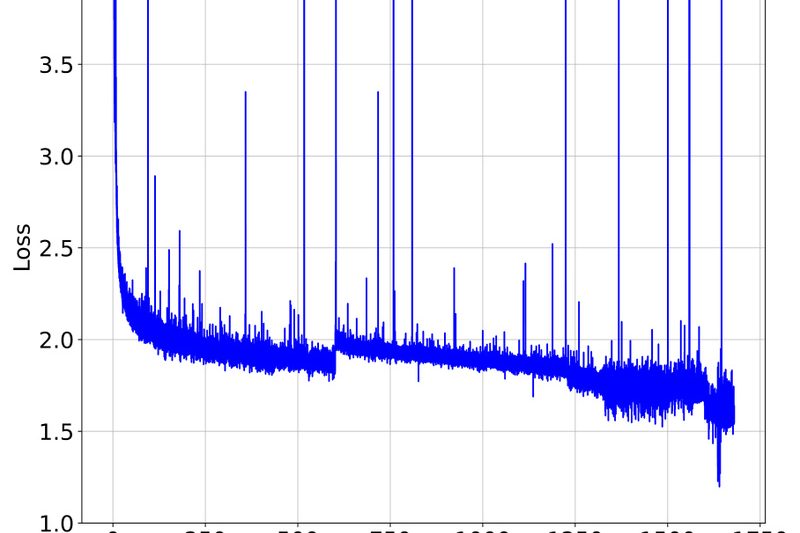

YuLan: A Transparent, Bilingual Open-Source LLM Built from Scratch for Reproducible AI Research 633

YuLan is an open-source large language model (LLM) series developed by the Gaoling School of Artificial Intelligence (GSAI) at Renmin…

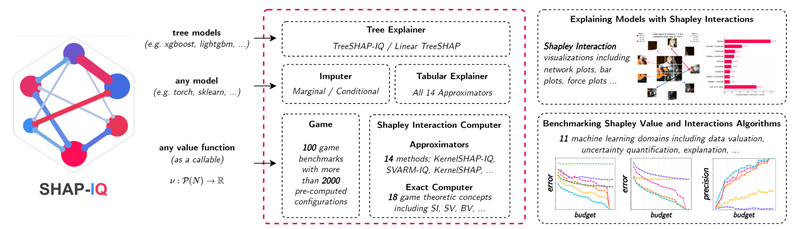

shapiq: Go Beyond Feature Importance with Shapley Interactions for Model Explainability 614

In the world of explainable AI, understanding which features matter is only half the story. What if two seemingly unimportant…

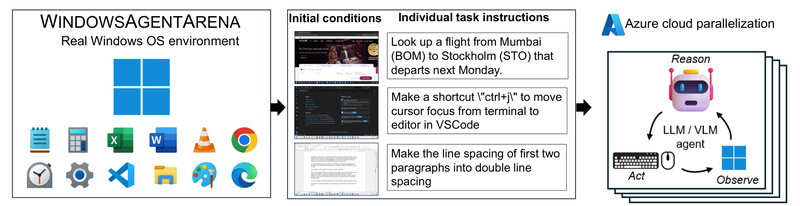

Windows Agent Arena: Benchmark Multimodal AI Agents in Real Windows Environments at Scale 771

Evaluating AI agents that interact with desktop operating systems has long been hampered by artificial or limited test environments. Most…

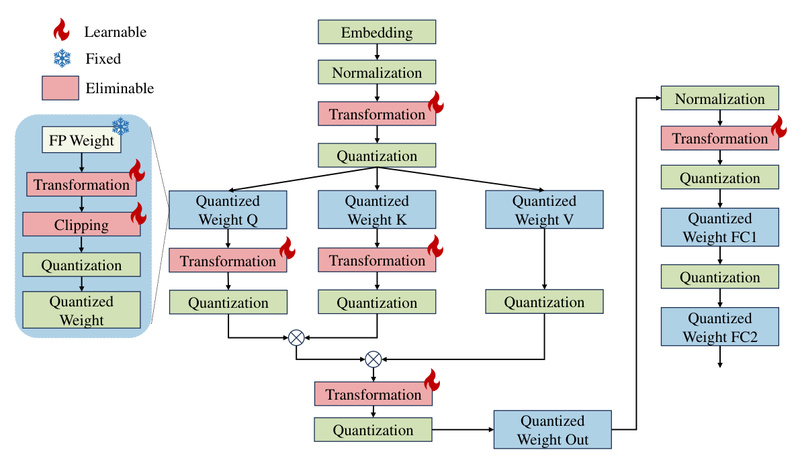

OmniQuant: Near-Lossless LLM Quantization for Real-World Deployment on GPUs and Mobile Devices 857

Deploying large language models (LLMs) in real-world applications remains a major engineering challenge. While models like LLaMA-2, Falcon, and Mixtral…

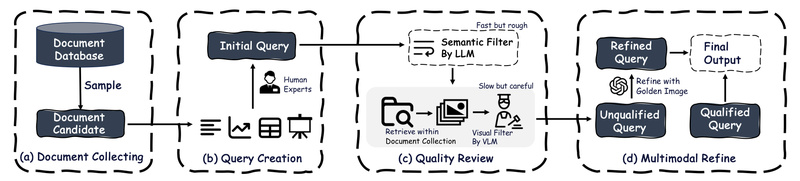

ViDoRAG: Multi-Agent RAG for Visually Rich Documents with Dynamic Reasoning and Hybrid Retrieval 616

Traditional Retrieval-Augmented Generation (RAG) systems excel at answering questions using text-based documents—but they often stumble when faced with visually rich…

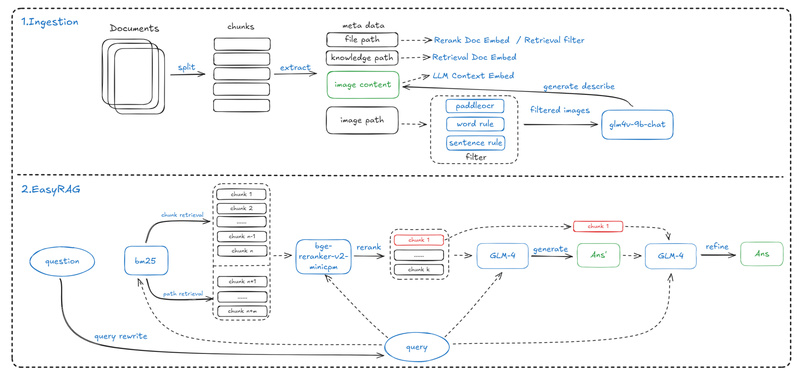

EasyRAG: A Lightweight, High-Accuracy RAG Framework for Resource-Constrained Network Operations and Enterprise QA 584

In today’s fast-paced IT and enterprise environments, teams increasingly rely on retrieval-augmented generation (RAG) systems to provide accurate, context-aware answers…

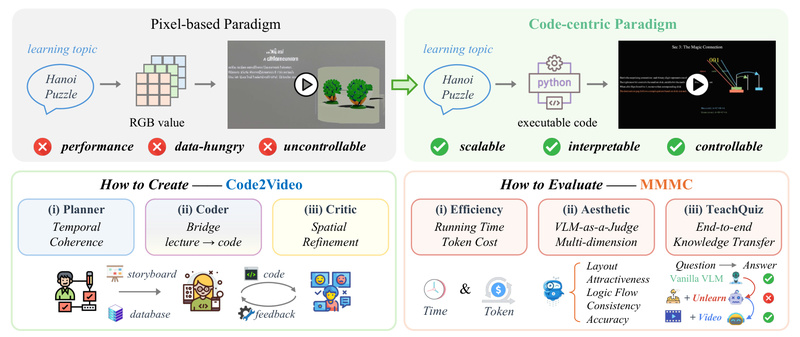

Code2Video: Generate Accurate, Structured Educational Videos Using Executable Code 673

Traditional AI-powered video generators—especially those based on diffusion or pixel-level synthesis—struggle when it comes to creating high-quality educational content. While…

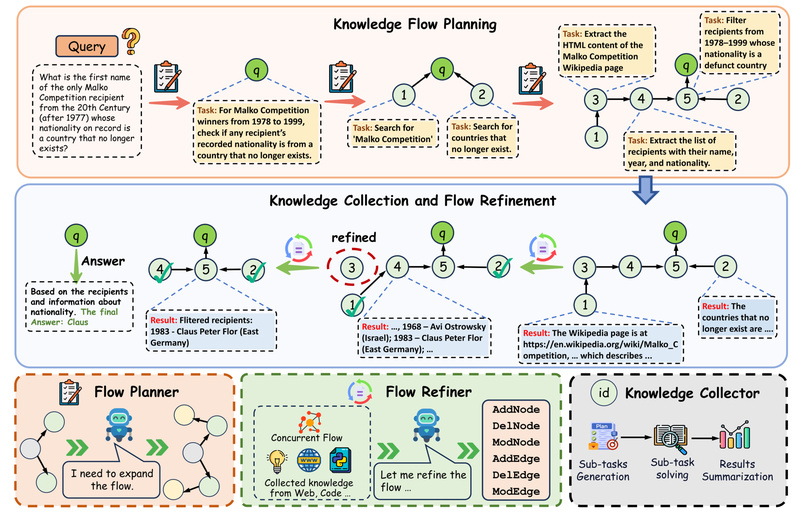

FlowSearch: Dynamic Knowledge Flows for Multi-Agent Deep Research Automation 578

Deep research—whether in scientific discovery, engineering design, or AI innovation—is rarely linear. It demands navigating complex dependencies, synthesizing cross-disciplinary insights,…

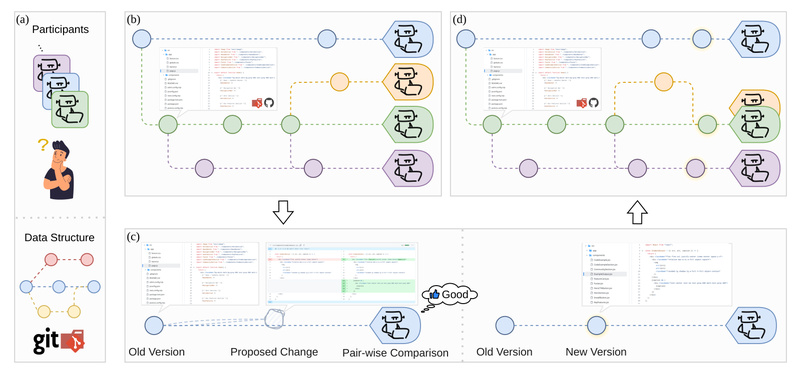

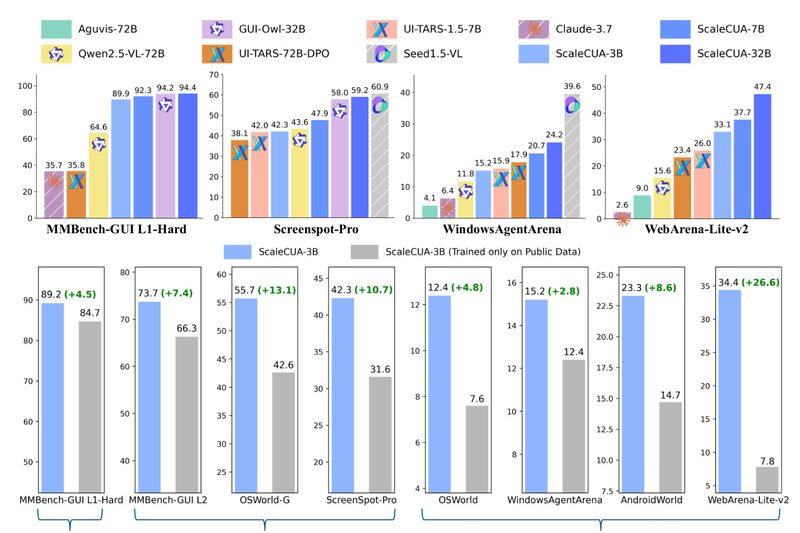

ScaleCUA: Cross-Platform GUI Automation Powered by Large-Scale Open Data 616

Building reliable computer use agents (CUAs)—systems that can autonomously interact with graphical user interfaces (GUIs)—has long been hindered by a…