For teams building real-world AI applications that combine vision and language—whether it’s parsing scanned documents, analyzing instructional videos, or creating…

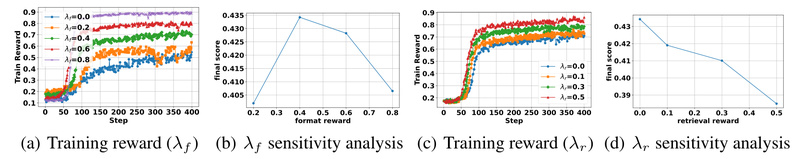

Search-R1: Train LLMs to Reason and Search Like Human Researchers Using Open-Source Reinforcement Learning 3614

In the rapidly evolving landscape of large language models (LLMs), a critical limitation persists: despite their impressive fluency, LLMs often…

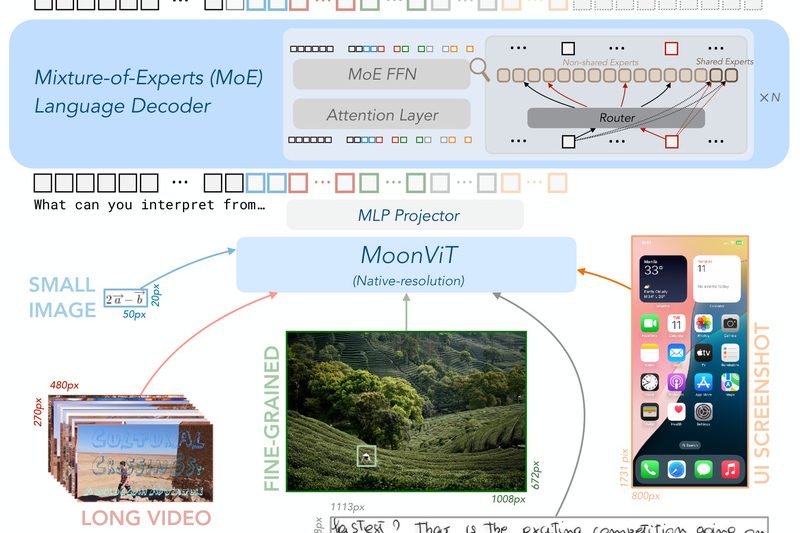

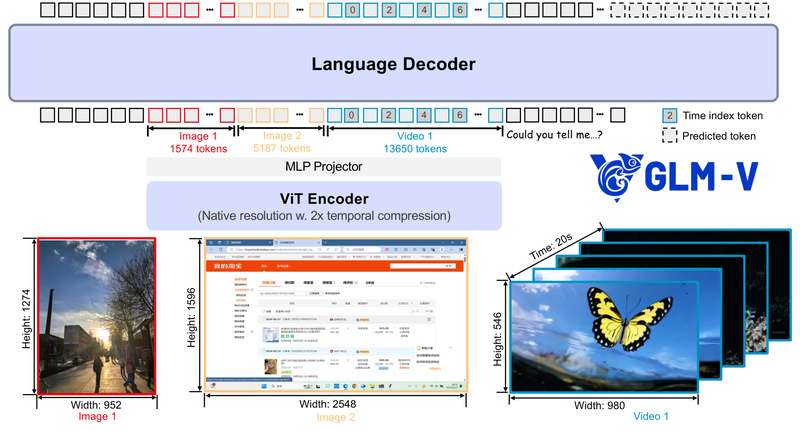

GLM-V: Open-Source Vision-Language Models for Real-World Multimodal Reasoning, GUI Agents, and Long-Context Document Understanding 1899

If your team is building AI applications that need to see, reason, and act—like desktop assistants that interpret screenshots, UI…

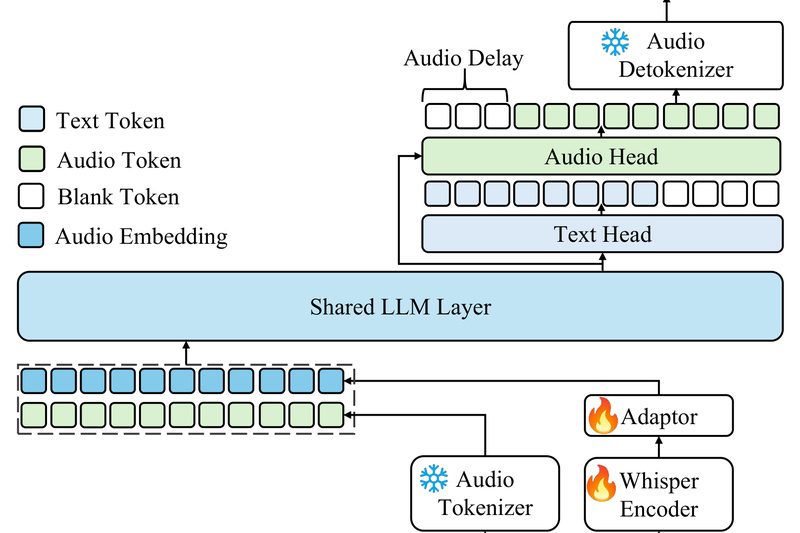

Kimi-Audio: A Unified, Open-Source Foundation Model for Speech, Sound, and Spoken Dialogue 4373

Building voice-enabled applications today often means stitching together separate models for speech recognition, sound classification, audio captioning, and spoken response…

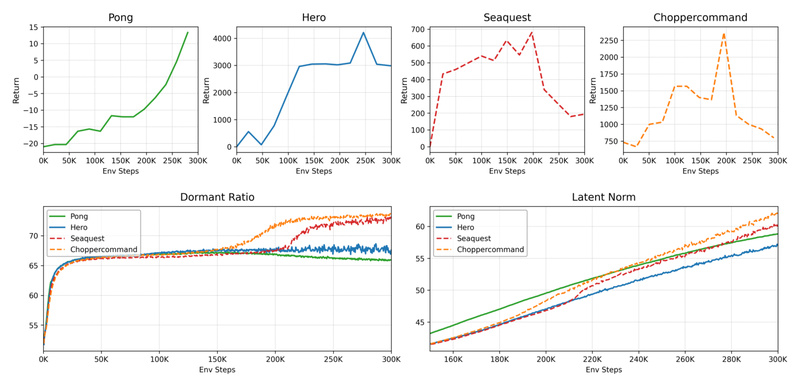

LightZero: One Lightweight Framework for MCTS + Deep Reinforcement Learning Across Games, Control, and Multi-Task Planning 1481

If you’re evaluating tools for building intelligent agents that combine planning and learning—whether for games, robotics, scientific discovery, or general…

Step-Audio 2: Open-Source Multimodal LLM for Paralinguistic-Aware, Tool-Enhanced Speech Understanding and Conversation 1252

Step-Audio 2 is an open-source, end-to-end multimodal large language model (MLM) purpose-built for real-world audio understanding and natural speech conversation.…

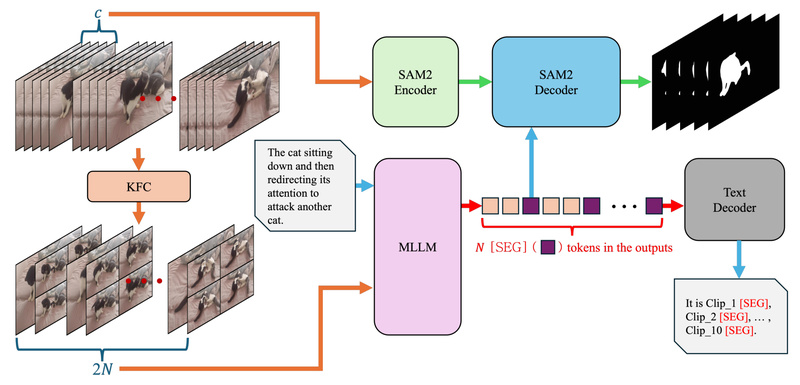

Sa2VA: Unified Vision-Language Model for Accurate Referring Video Object Segmentation from Natural Language 1455

Sa2VA represents a significant leap forward in multimodal AI by seamlessly integrating the strengths of SAM2—Meta’s state-of-the-art video object segmentation…

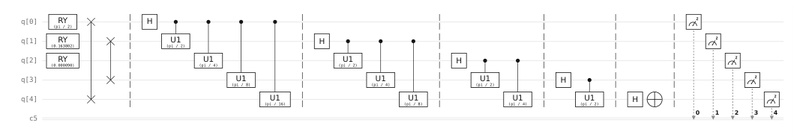

Classiq: Accelerate Quantum Algorithm Development with High-Level Abstraction and Automated Circuit Synthesis 1946

Quantum computing holds immense promise—but building, optimizing, and executing quantum circuits remains a formidable challenge for most developers, researchers, and…

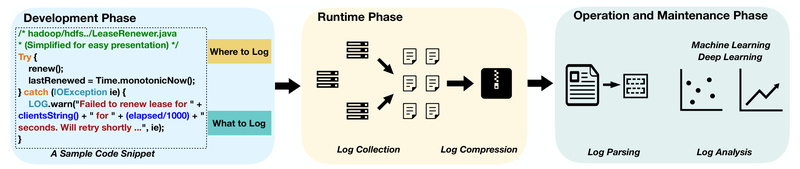

Loghub: Real-World System Log Datasets to Power AI-Driven Log Analytics and Research 2448

In the world of software systems—whether they’re cloud-native applications, distributed infrastructures, or legacy enterprise platforms—logs are the lifeblood of observability.…

Mini-Omni2: Unified Vision, Speech, and Text Interaction Without External ASR/TTS Pipelines 1847

In today’s open-source AI landscape, building truly multimodal applications often means stitching together separate models for vision, speech recognition (ASR),…