MegActor represents a significant leap forward in portrait animation by directly leveraging raw driving videos—rather than simplified proxies like facial…

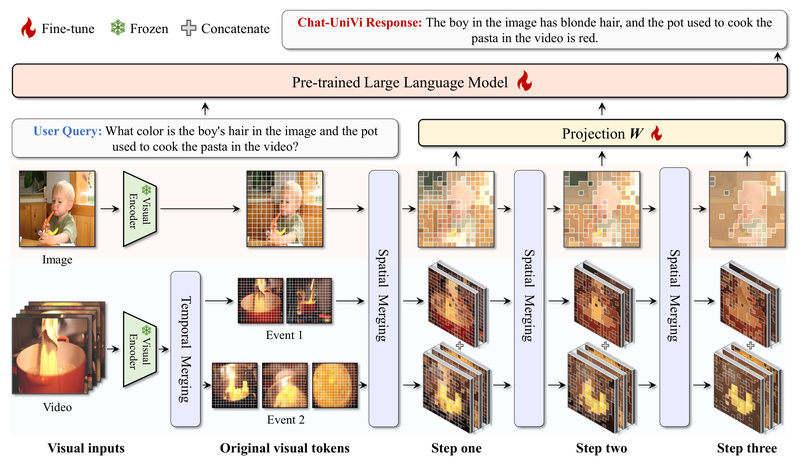

Chat-UniVi: One Unified Model for Image and Video Understanding—No More Separate Systems Needed 939

In today’s AI landscape, multimodal systems that understand both images and videos are increasingly essential—but most solutions force you to…

TF-ICON: Training-Free Cross-Domain Image Composition Without Retraining or Fine-Tuning 821

Image composition—seamlessly inserting a user-provided object into a new visual context—is a long-standing challenge in computer vision and generative AI.…

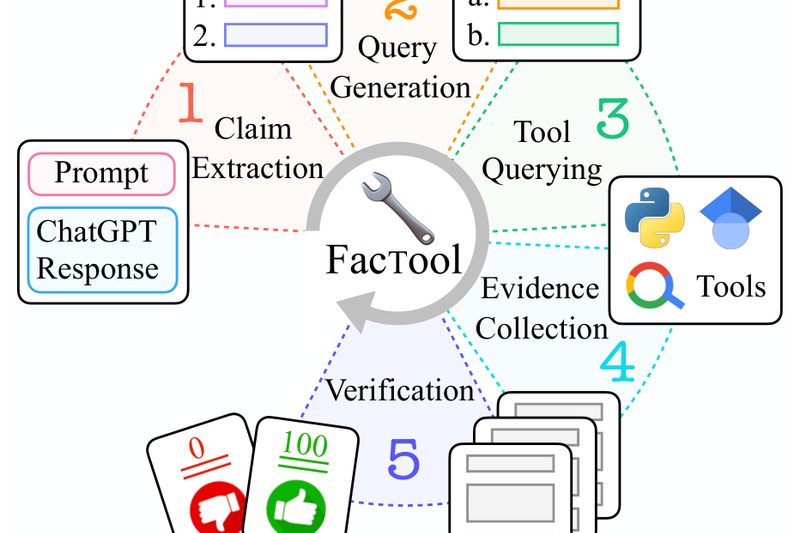

FacTool: Automatically Detect Factual Errors in LLM Outputs Across Code, Math, QA, and Scientific Writing 899

Large language models (LLMs) like ChatGPT and GPT-4 have transformed how we generate text, write code, solve math problems, and…

HQTrack: High-Quality Video Object Tracking and Segmentation Without Complex Tricks 752

HQTrack is a powerful and practical framework designed to solve a persistent challenge in computer vision: accurately tracking and segmenting…

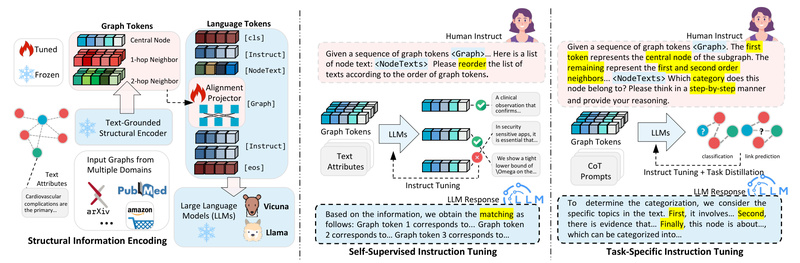

GraphGPT: Enable LLMs to Understand Graphs Without Task-Specific Labels 781

In today’s data-driven world, much of the most valuable information isn’t stored in tables or plain text—it lives in relationships.…

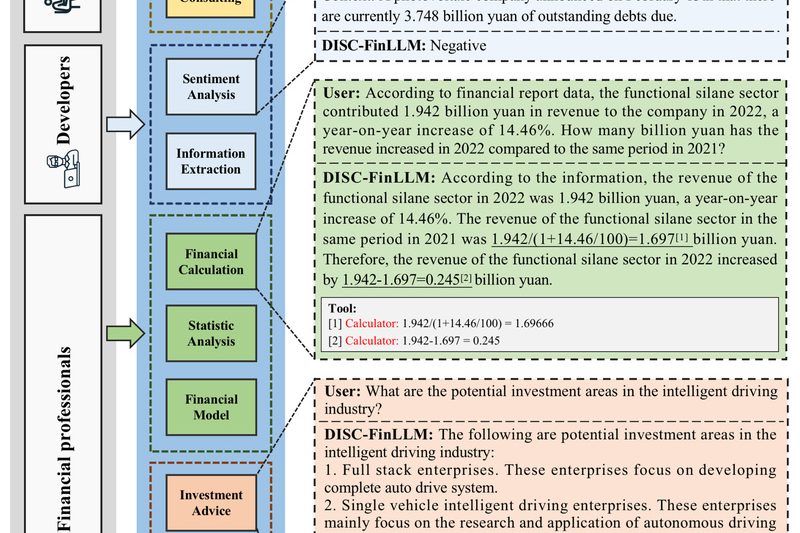

DISC-FinLLM: A Specialized Chinese Financial LLM for Accurate, Context-Aware Financial Intelligence 818

If you’re building AI-powered tools for the Chinese financial sector—whether for banking, fintech, investment research, or regulatory compliance—you’ve likely run…

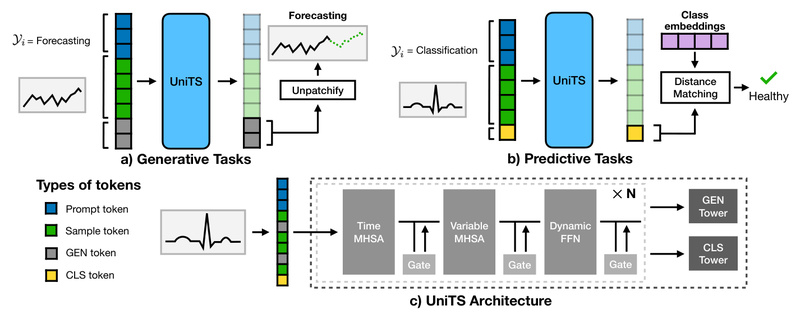

UniTS: One Model to Rule All Time Series Tasks – Forecasting, Classification, Imputation & Anomaly Detection Unified 570

Time series data is everywhere—in financial markets, wearable health monitors, industrial sensors, and smart infrastructure. Yet, despite its ubiquity, building…

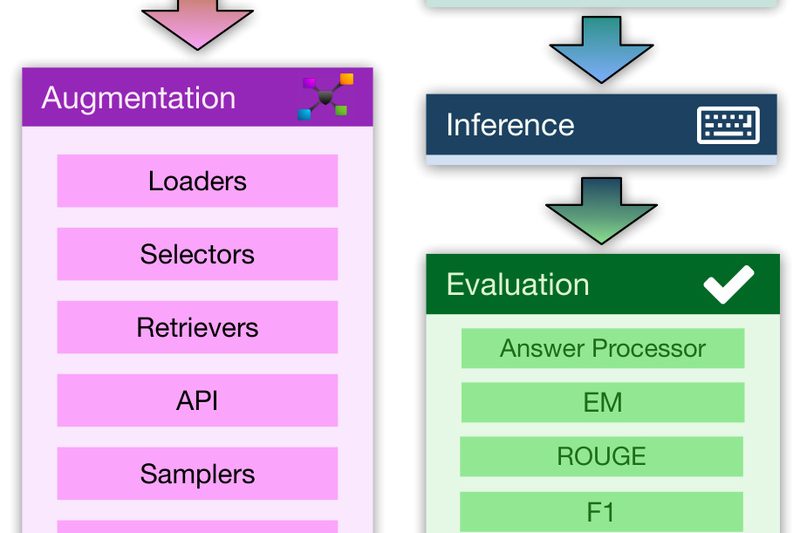

RAG Foundry (RAG-FiT): Build, Train, and Evaluate Domain-Specific RAG Systems Without the Complexity 750

Building effective Retrieval-Augmented Generation (RAG) systems is notoriously difficult. Practitioners must juggle data preparation, retrieval integration, prompt engineering, model fine-tuning,…

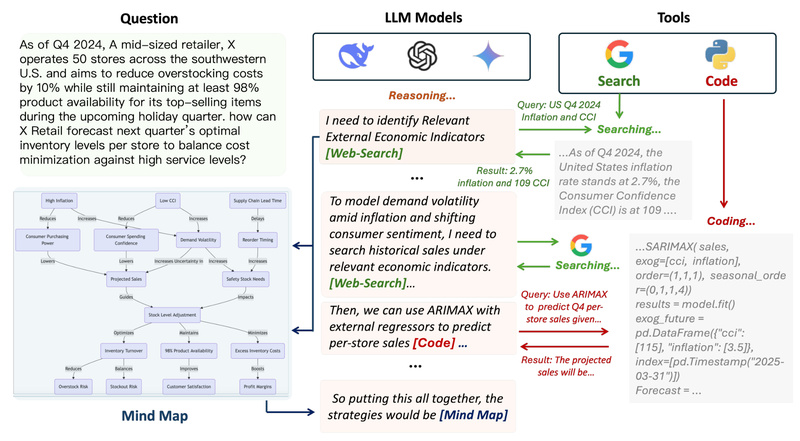

Agentic Reasoning: Supercharge LLM Reasoning with Tool-Augmented Deep Research and Structured Memory 699

Large language models (LLMs) have made remarkable strides in generating coherent, context-aware responses. Yet, when it comes to complex, multi-step…