In today’s fast-moving technical and research environments, teams need reliable, up-to-date answers to complex questions—without the black-box limitations or high costs of commercial APIs. Enter PokeeResearch, an open-source, 7-billion-parameter deep research agent that autonomously searches the web, reads and analyzes content, and synthesizes well-cited, factually grounded responses. Built for real-world knowledge-intensive workflows, PokeeResearch is not just another question-answering model—it’s a robust, self-correcting research engine trained via reinforcement learning from AI feedback (RLAIF), delivering state-of-the-art performance among 7B-scale agents across 10 major benchmarks.

Unlike typical tool-augmented LLMs that fail under ambiguous queries or brittle tool interactions, PokeeResearch features a chain-of-thought-driven multi-call reasoning scaffold that enables iterative search, self-verification, and adaptive recovery from tool errors. This makes it uniquely suited for tasks where accuracy, citation integrity, and resilience matter—such as technical due diligence, competitive intelligence, or rapid literature synthesis.

And because it’s open-sourced under the Apache 2.0 license, teams retain full control over deployment, customization, and integration—without vendor lock-in.

Key Strengths That Set PokeeResearch Apart

State-of-the-Art Performance in a 7B Footprint

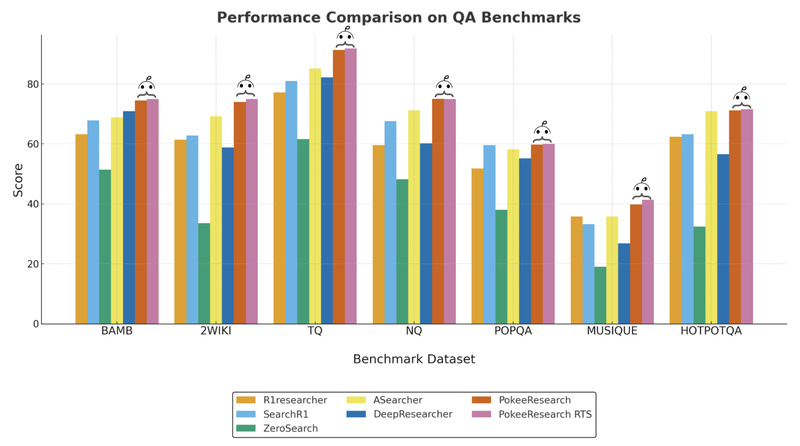

PokeeResearch-7B consistently outperforms other 7B-scale deep research agents across diverse benchmarks—including GAIA, HotpotQA, Musique, 2Wiki, and BrowseComp. For example, it achieves 91.3% accuracy on TriviaQA (TQ) and 74.5% on BAMBOOGLE, significantly ahead of alternatives like DeepResearcher or ASearcher. Even more impressively, its enhanced “Research Threads Synthesis” (RTS) variant pushes performance further—reaching 41.3% on GAIA, a notoriously challenging benchmark for reasoning over real-world web data.

This performance isn’t accidental—it stems from a deliberate architecture focused on efficiency, alignment, and robustness within a compact model size.

Reinforcement Learning from AI Feedback (RLAIF)

One of PokeeResearch’s core innovations is its annotation-free RLAIF training framework. Instead of relying on costly human labels, it uses LLM-generated reward signals to optimize for three critical dimensions:

- Factual accuracy: Does the answer match verified ground truth?

- Citation faithfulness: Are sources correctly attributed and relevant?

- Instruction adherence: Does the response fully address the user’s query?

This ensures the agent doesn’t just “sound smart”—it delivers research-grade outputs that are verifiable and trustworthy.

Robust Multi-Turn Reasoning with Self-Recovery

PokeeResearch decomposes complex questions into sub-queries, performs iterative web searches, reads and cross-references content, and synthesizes answers—all while monitoring its own progress. If a tool call fails (e.g., a webpage doesn’t load or a search returns irrelevant results), the agent can detect the failure and adapt its strategy, such as refining the query or trying an alternative source.

This self-verification and error-recovery mechanism is rare among open-source agents and critical for handling real-world, noisy web data.

Fully Open and Customizable

Unlike proprietary APIs from OpenAI, Perplexity, or Gemini, PokeeResearch is open-source (Apache 2.0), allowing teams to:

- Audit the reasoning logic

- Modify tool integrations

- Fine-tune for domain-specific tasks

- Deploy on-premises or in private clouds

This transparency is invaluable for regulated industries, academic research, or any use case where explainability and control are non-negotiable.

Ideal Use Cases for Technical Teams and Researchers

PokeeResearch shines in scenarios where you need automated, citation-rich, and up-to-date answers—but don’t want to pay premium API fees or sacrifice control. Consider these practical applications:

- Technical Due Diligence: Quickly assess a startup’s claims by automatically gathering and cross-referencing technical documentation, GitHub activity, and expert commentary.

- Competitive Intelligence: Monitor competitors’ product launches, feature updates, or patent filings through synthesized web research reports.

- Rapid Literature Reviews: Automate the first-pass analysis of recent papers, blog posts, or documentation on emerging topics like Mamba architectures or LoRA variants.

- Internal Research Assistants: Deploy a private agent that answers engineering or R&D questions using your team’s approved sources (when extended with custom retrieval).

- Automated Q&A Over Current Events: Build systems that answer time-sensitive questions (e.g., “What are the latest FDA guidelines on AI in medical devices?”) with fresh, cited evidence.

Because PokeeResearch generates full research trajectories—including search queries, retrieved snippets, and reasoning steps—teams can audit, validate, and improve its outputs over time.

How to Get Started

Deploying PokeeResearch is designed for teams with basic DevOps familiarity. Here’s the high-level flow:

- Set up the environment: A prebuilt Docker image handles dependencies (CUDA 12.8, Transformers, sglang, etc.). Runs best on an A100 80GB GPU but may work on smaller setups.

- Configure API keys: You’ll need free or paid keys for:

- Serper (web search)

- Jina (web content extraction)

- Gemini (content summarization and evaluation)

- Hugging Face (to download the model)

- Run evaluations or launch the agent:

- Use

bash run.shto reproduce benchmark results - Launch the CLI app (

python cli_app.py) for scriptable queries - Use the Gradio GUI (

python gradio_app.py) for an interactive interface

- Use

- Synthesize reports: Saved research threads in

val_results/can be refined into final reports using therun_rts.shscript.

The project supports both local inference and vLLM serving for higher throughput, making it adaptable to different infrastructure needs.

Important Considerations and Limitations

While powerful, PokeeResearch isn’t a zero-friction plug-and-play tool. Teams should be aware of:

- Third-party API dependencies: Each search or content read consumes credits from Serper, Jina, or Gemini. Budget accordingly.

- Hardware requirements: Officially tested on A100 80GB GPUs. Smaller GPUs may work but haven’t been validated.

- Internet connectivity: Requires live web access—no offline mode.

- Technical setup: Not a consumer app. Best suited for teams comfortable with Docker, API keys, and basic scripting.

That said, for organizations already managing LLM pipelines or research automation systems, PokeeResearch’s balance of performance, openness, and robustness offers a compelling alternative to expensive, opaque commercial APIs.

Summary

PokeeResearch redefines what’s possible with open-source deep research agents. By combining RL-optimized alignment, self-correcting reasoning, and transparent, citation-aware synthesis, it delivers research-grade answers at a fraction of the cost of proprietary alternatives—while giving teams full control over the stack. If your work involves complex, evidence-based reasoning over dynamic web content, and you value accuracy, auditability, and cost efficiency, PokeeResearch is a strong candidate for integration into your technical workflow.