In today’s era of intelligent edge computing, deploying high-performance computer vision models on resource-constrained devices like smartphones, embedded sensors, and IoT gateways remains a significant engineering challenge. Many developers face a trade-off: either sacrifice detection accuracy for speed, or accept unacceptably long inference times that ruin real-time user experience. Enter PP-PicoDet—a purpose-built, anchor-free, ultra-lightweight object detector developed by the PaddlePaddle team that delivers state-of-the-art (SOTA) accuracy while maintaining blazing-fast inference speeds on ARM CPUs and other mobile hardware.

Designed specifically for on-device deployment, PP-PicoDet redefines what’s possible in real-time object detection under tight computational budgets. With under 1 million parameters and inference speeds exceeding 120 FPS on mobile chips, it outperforms popular lightweight contenders like YOLOX-Nano and NanoDet by significant margins—both in accuracy and latency. If your project demands efficient, reliable, and production-ready object detection on the edge, PP-PicoDet deserves serious consideration.

Why PP-PicoDet Stands Out

PP-PicoDet isn’t just another lightweight detector—it’s the result of systematic architectural and training optimizations tailored for mobile efficiency without compromising accuracy. Its core innovations include:

- Anchor-free design: Eliminates the computational overhead of anchor boxes, simplifying the detection head and reducing memory usage—ideal for edge deployment.

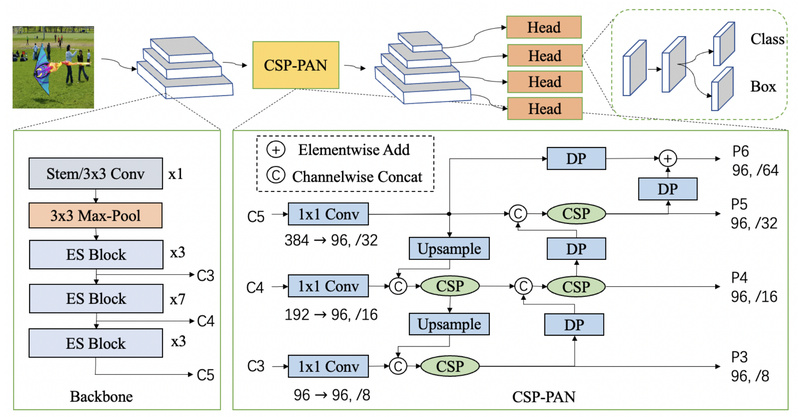

- Optimized backbone and neck: Leverages a lightweight feature extractor (e.g., ESNet) paired with a streamlined neck architecture (e.g., LC-PAN) to enhance multi-scale feature fusion while minimizing FLOPs.

- Improved label assignment and loss functions: Uses a refined training strategy that stabilizes convergence and boosts mAP, especially for small objects.

- Extreme parameter efficiency: The smallest variant, PicoDet-S, has only 0.99 million parameters yet achieves 30.6% mAP on COCO—a 4.8% absolute gain over YOLOX-Nano while being 55% faster on mobile CPUs.

- Scalable performance: PicoDet-L (3.3M parameters) reaches 40.9% mAP, outperforming YOLOv5s by 3.7% mAP and running 44% faster.

These improvements collectively enable PP-PicoDet to occupy a unique sweet spot: near-server-level accuracy at true edge-device speed.

Ideal Use Cases

PP-PicoDet excels wherever real-time detection must run locally—without relying on cloud connectivity or powerful GPUs. Key application scenarios include:

- Mobile applications: Camera-based assistants, AR filters, or retail scanning tools that require instant object recognition.

- Smart city infrastructure: Roadside systems like trash detection on municipal vehicles (as demonstrated in PaddleDetection’s official case studies), where low-latency inference enables immediate action.

- Industrial IoT and embedded vision: Defect inspection on production lines, warehouse inventory tracking, or agricultural monitoring via drones—all running on low-power ARM or RISC-V chips.

- Autonomous machines: Service robots, delivery drones, or security cameras that need to detect people, vehicles, or obstacles with minimal delay.

Because PP-PicoDet is optimized for CPU inference (and supports Paddle Lite), it’s particularly well-suited for Android, embedded Linux, or microcontroller-based systems lacking dedicated AI accelerators.

Getting Started with PP-PicoDet

Integrating PP-PicoDet into your project is straightforward, thanks to PaddleDetection’s modular design and developer-friendly tooling:

- Access pre-trained models: PaddleDetection provides ready-to-use checkpoints for PicoDet-S, PicoDet-L, and other variants, all trained on COCO and optimized for mobile inference.

- Use intuitive configuration files: Each model comes with YAML-based config files that define architecture, training parameters, and data pipelines—easy to customize for your dataset.

- Fine-tune on custom data: With just a few commands, you can retrain PP-PicoDet on your own labeled images using standard COCO format.

- Deploy efficiently: Export models to Paddle Lite for mobile/edge deployment, or use Paddle Inference for server-side CPU/GPU inference. The toolkit also supports one-click model invocation via Python APIs, lowering the barrier for rapid prototyping.

Notably, PaddleDetection now integrates with PaddleX, enabling end-to-end workflows—from data labeling to deployment—with unified APIs across vision tasks, including instance segmentation and keypoint detection.

Limitations and Considerations

While PP-PicoDet sets a new benchmark for lightweight detection, it’s important to recognize its boundaries:

- Accuracy ceiling: Though excellent for its size, PP-PicoDet doesn’t match the absolute accuracy of large server-grade detectors like PP-YOLOE+ (53.3% mAP) or RT-DETR. It’s optimized for efficiency-driven scenarios, not maximum precision.

- Task scope: It targets 2D bounding box detection only. For specialized tasks like 3D object detection, instance segmentation, or pose estimation, you’ll need other models in the PaddleDetection suite (e.g., PP-TinyPose or Mask-RT-DETR).

- Ecosystem dependency: PP-PicoDet is built on PaddlePaddle, not PyTorch or TensorFlow. Teams heavily invested in other frameworks may face adaptation costs, although ONNX export is supported for interoperability.

If your priority is pushing detection accuracy to the limit on a server, PP-PicoDet may not be the best fit. But if you’re building for the edge—where latency, power, and model size matter—its trade-offs are exceptionally well balanced.

Summary

PP-PicoDet delivers what many developers thought was impossible: real-time object detection with competitive accuracy on mobile and embedded devices. By combining a lean anchor-free architecture, efficient backbone design, and robust training strategies, it consistently outperforms prior lightweight models in both speed and precision. Whether you’re building a smart camera, an autonomous robot, or a city-scale monitoring system, PP-PicoDet offers a production-ready solution that reduces development time and hardware costs. For technical decision-makers evaluating on-device vision pipelines, it’s a compelling—and increasingly industry-adopted—choice worth exploring through its open-source repository and pre-trained assets.