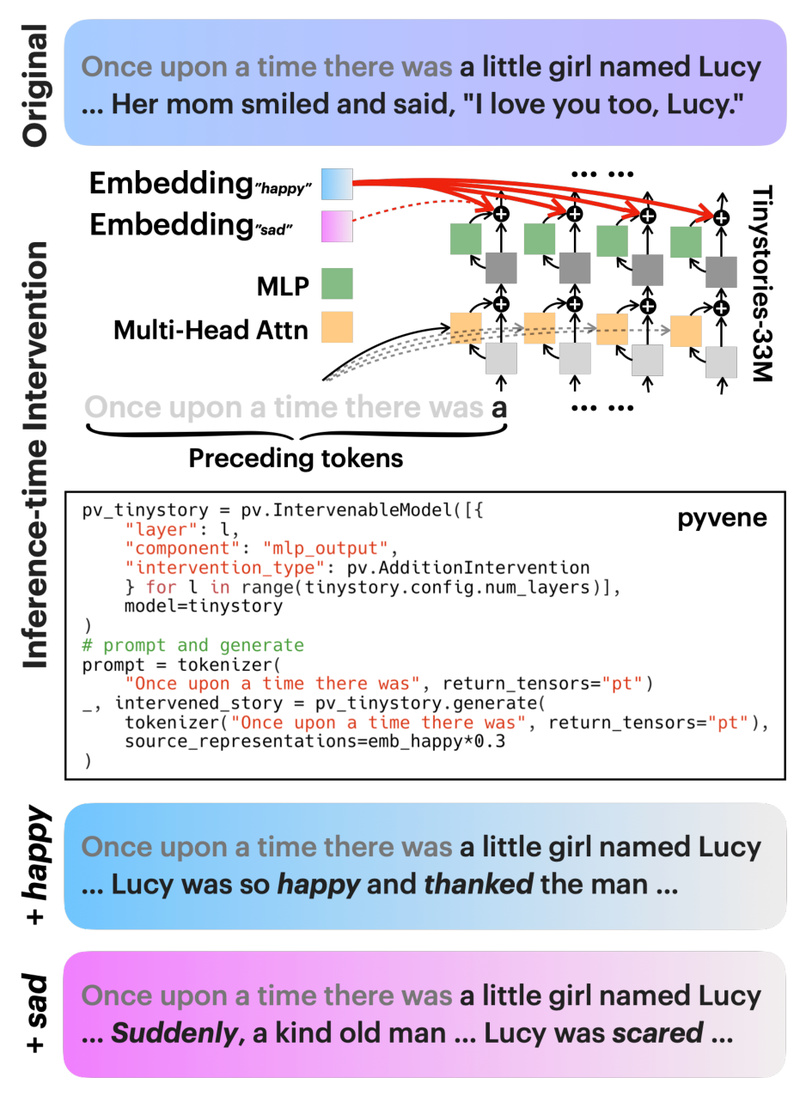

Imagine being able to precisely edit, steer, or probe a trained PyTorch model—without touching its source code or retraining it from scratch. That’s the power pyvene brings to your workflow. Developed by Stanford NLP, pyvene is an open-source Python library designed to make interventions on internal model states simple, composable, and broadly applicable across architectures. Whether you’re debugging unexpected behavior, testing hypotheses about how a model makes decisions, or adjusting outputs on the fly, pyvene gives you surgical control over activations, neurons, or entire layers—right out of the box.

Unlike approaches that require custom model subclasses or invasive modifications, pyvene treats interventions as first-class, declarative operations. This means you can define, share, and reproduce them as easily as saving a JSON file.

Why Interventions Matter in Real Projects

In practical AI development, understanding and modifying model behavior isn’t just academic—it’s essential. Consider these common scenarios:

- Model editing: Correcting factual errors in a language model (e.g., updating a person’s job title across all generations) without full fine-tuning.

- Bias mitigation: Steering outputs away from harmful stereotypes by intervening on specific internal representations during decoding.

- Interpretability: Testing whether a particular layer or neuron group is causally responsible for a model’s decision through controlled perturbations.

- Robustness evaluation: Measuring how sensitive a vision model is to noise injected into intermediate features.

These tasks typically require deep model internals knowledge and significant engineering. pyvene abstracts away much of that complexity, letting practitioners focus on what to intervene on—not how to hook into the model.

Key Features That Enable Practical Flexibility

1. Declarative, Serializable Interventions

Interventions in pyvene are defined as plain Python dictionaries. This design choice has powerful implications:

- You can save interventions to disk and reload them later.

- You can share them via Hugging Face or version control, enabling reproducible research and collaboration.

- Complex intervention logic (e.g., “replace activations in layer 5 and 7 with values from a reference input”) is expressed concisely.

2. Composable and Granular Control

pyvene supports a wide range of intervention patterns:

- Multi-location: Apply changes to multiple layers or modules simultaneously.

- Neuron-level precision: Target arbitrary subsets of features or channels.

- Temporal control: In autoregressive models, intervene at specific decoding steps (e.g., only during the 3rd token generation).

- Sequential or parallel execution: Chain interventions or run them concurrently based on your experimental design.

3. Architecture-Agnostic Compatibility

One of pyvene’s standout strengths is its universal compatibility. It works with:

- Transformers (e.g., BERT, Llama)

- CNNs (e.g., ResNet)

- RNNs

- State-space models like Mamba

You don’t need to subclass your model or rewrite forward passes. Just load a standard PyTorch model, define your intervention, and run inference—the library handles the rest.

Getting Started in Minutes

Using pyvene is intentionally straightforward:

-

Install:

pip install pyvene

-

Import and prepare:

import pyvene as pv model = ... # any pre-trained PyTorch model

-

Define an intervention:

For example, to replace activations in layer 10 with zero vectors:config = { "layer": 10, "intervention_type": "zero", } intervention = pv.Intervention.from_dict(config) -

Apply and run:

with pv.IntervenableModel(model, intervention) as intervened_model: output = intervened_model(input_ids)

The official documentation includes detailed tutorials for common use cases—from causal mediation analysis to knowledge localization—so you don’t need to reverse-engineer internals to get started.

Limitations and Practical Considerations

While powerful, pyvene isn’t a silver bullet:

- It does not train full models. Its focus is on inference-time or fine-tuning-assisted interventions.

- Trainable interventions (e.g., learned projections) are supported but require careful tuning and understanding of gradient flow through the intervention points.

- As an actively developed library, APIs may evolve—though the core design prioritizes stability for practitioners.

That said, for teams needing to diagnose, modify, or control trained models without maintaining forked model codebases, pyvene significantly lowers the barrier to entry.

Summary

pyvene bridges the gap between theoretical intervention frameworks and real-world model debugging, editing, and steering. By providing a lightweight, serialization-friendly, and architecture-agnostic interface, it empowers engineers and researchers to act on model internals with minimal overhead. If your work involves understanding or altering how PyTorch models behave post-training—whether for safety, accuracy, or interpretability—pyvene is worth integrating into your toolkit today.