Generating high-quality, long-form videos with diffusion models remains one of the most computationally demanding tasks in generative AI. Standard attention mechanisms scale quadratically—O(n²)—with sequence length, quickly becoming infeasible as video duration increases. Radial Attention addresses this bottleneck head-on by introducing a physics-inspired, sparse attention pattern that reduces complexity to O(n log n) while preserving visual fidelity. Developed by researchers from MIT, NVIDIA, and other leading institutions, Radial Attention enables existing video diffusion models like Wan2.1-14B and HunyuanVideo to generate videos up to 4× longer with dramatically lower training and inference costs—making high-resolution, extended-duration video generation practical even on a single GPU.

Why Radial Attention Works: The Physics of Attention Decay

At the heart of Radial Attention is a key empirical observation: Spatiotemporal Energy Decay. In video diffusion models, post-softmax attention scores naturally diminish as the spatial and temporal distance between tokens increases—mirroring how physical signals or waves lose intensity over space and time. Instead of fighting this phenomenon, Radial Attention leverages it.

The mechanism uses a static, deterministic attention mask that enforces two principles:

- Spatial locality: Each token attends primarily to nearby pixels within the same frame.

- Temporal decay: As the number of frames between two tokens grows, the width of their attention window shrinks exponentially.

This results in a radial sparsity pattern—dense near the diagonal (close in time and space) and increasingly sparse farther away—translating observed energy decay into efficient compute allocation.

Key Strengths That Solve Real Engineering Problems

O(n log n) Complexity Without Sacrificing Expressiveness

Unlike linear attention approximations that trade off model capacity for speed, Radial Attention maintains the full expressive power of dense attention for nearby tokens while drastically cutting redundant long-range computations. The result is near-identical video quality at a fraction of the cost.

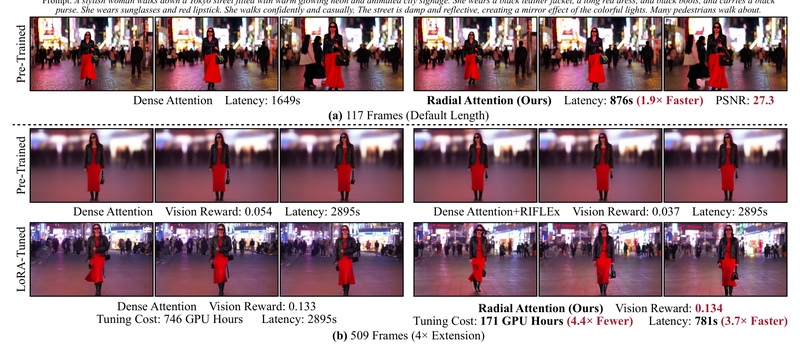

Up to 3.7× Faster Inference and 4.4× Lower Tuning Costs

When generating 4× longer videos (e.g., extending from 128 to 512 frames), Radial Attention:

- Accelerates inference by up to 3.7× compared to dense attention

- Reduces fine-tuning costs by up to 4.4× versus full-model retraining

- Cuts total attention computation by 9× in 500-frame 720p generation

These gains are not theoretical—they’re validated across multiple state-of-the-art models, including Wan2.1-14B, HunyuanVideo, and Mochi-1.

Seamless Integration with Modern Acceleration Stacks

Radial Attention is designed for real-world deployment:

- LoRA-compatible: Extend video length with lightweight, efficient fine-tuning—no full retraining needed.

- Accelerator-ready: Works with SageAttention (v1 and v2) for FP8/INT4 matmul optimizations and FlashInfer for hardware-friendly kernel execution.

- Multi-GPU support: Leverages Ulysses sequence parallelism from xDiT for distributed inference.

- Toolchain integration: Already supported in ComfyUI via the ComfyUI-nunchaku extension.

In practice, this means you can generate high-fidelity videos in as little as 33 seconds on an H100 or 90 seconds on a consumer-grade 4090 GPU when combined with Lightx2v LoRA.

Ideal Use Cases for Technical Teams

Radial Attention shines in scenarios where video length, cost, and latency are critical constraints:

- Media & Entertainment: Generate multi-minute cinematic clips or social media content without cloud-scale GPU budgets.

- Simulation & Training: Produce long-horizon synthetic videos for robotics, autonomous driving, or VR training environments.

- AI-Powered Content Platforms: Offer users the ability to create extended AI videos on-demand while controlling infrastructure costs.

- Research Prototyping: Rapidly experiment with long-context video models without waiting days for a single training run.

If your team is already using Wan2.1-14B or HunyuanVideo and needs to push beyond their native video lengths, Radial Attention provides the most efficient path forward.

How to Get Started—Without Rewriting Your Entire Pipeline

Adopting Radial Attention requires minimal changes to existing workflows:

- Environment Setup: Use Python 3.12, PyTorch 2.5.1, and CUDA 12.4. Install dependencies including FlashAttention and the latest Diffusers from Hugging Face.

- Optional Acceleration: For maximum speed, compile SageAttention and FlashInfer as described in the repo.

- Run Inference: Execute pre-configured scripts:

bash scripts/wan_t2v_inference.shfor Wan2.1-14Bbash scripts/hunyuan_t2v_inference.shfor HunyuanVideo

Because Radial Attention operates as a drop-in replacement for the attention module in supported models, you don’t need to redesign your pipeline. Fine-tuning for longer videos only requires applying a lightweight LoRA—no access to original training data or massive compute clusters needed.

Current Limitations and Considerations

While powerful, Radial Attention has boundaries to keep in mind:

- Model Support: Officially supports Wan2.1-14B and HunyuanVideo; Mochi-1 integration is listed as in-progress.

- Static Sparsity: The attention mask is fixed based on temporal distance, which may not adapt to scene cuts or non-stationary content.

- Dependency on Optimized Backends: Peak performance relies on FlashInfer or SageAttention—vanilla PyTorch runs will be slower.

- LoRA Checkpoints Not Yet Public: While the method supports extended-length LoRAs, the trained weights for 4× generation haven’t been released yet. You’ll need to fine-tune them yourself using the provided tools.

These constraints mean Radial Attention is best suited for teams already working with compatible diffusion models and comfortable with moderate system-level optimization.

Summary

Radial Attention rethinks video attention through the lens of natural signal decay, delivering O(n log n) efficiency without compromising quality. By enabling 4× longer video generation at a fraction of the cost—and integrating smoothly with modern LoRA and acceleration ecosystems—it removes a major barrier to practical, long-form AI video. For engineering teams, product builders, and researchers tired of hitting computational walls, Radial Attention isn’t just an optimization—it’s a gateway to what’s next in generative video.