Large Language Models (LLMs) have unlocked new possibilities for recommender systems—enabling natural-language interactions, personalized explanations, and dynamic user control. Yet a critical flaw persists: generic LLMs often recommend items that simply don’t exist in your domain, such as suggesting nonexistent products, out-of-catalog movies, or irrelevant services. These out-of-domain (OOD) recommendations break user trust, degrade system reliability, and render LLMs impractical for real-world deployment.

RecAI, developed by Microsoft Research, directly addresses this challenge. It’s not just another LLM wrapper—it’s a cohesive, open-source toolkit designed to safely integrate LLMs into recommender systems while guaranteeing domain relevance, interpretability, and interactivity. Built on the principle of “plug-and-play without retraining,” RecAI enables teams to enhance existing LLMs with lightweight, modular components that enforce domain constraints, improve retrieval, and explain decisions—all without sacrificing the flexibility that makes LLMs powerful.

Why OOD Recommendations Are a Dealbreaker—and How RecAI Fixes Them

When deployed naively, LLMs generate recommendations based on broad world knowledge rather than your specific item catalog. In e-commerce, this might mean recommending a “red wireless headphones” that your inventory doesn’t carry. In streaming, it could suggest a film that was never licensed. These hallucinated suggestions confuse users, increase support costs, and undermine business logic.

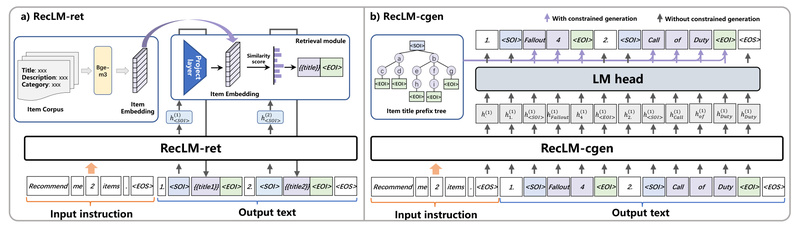

RecAI introduces RecLM-cgen, a constrained generation method that guides LLMs to only recommend items from a predefined, in-domain catalog. Unlike retrieval-augmented alternatives (e.g., RecLM-ret), RecLM-cgen operates during token generation itself, using vocabulary masking and constrained decoding to eliminate OOD outputs at the source. Experiments across three real-world datasets show it consistently outperforms both baseline LLM recommenders and retrieval-based methods in accuracy—while producing zero OOD errors.

Critically, RecLM-cgen requires no full model retraining. It works as a lightweight inference-time module compatible with any decoder-only LLM, making it ideal for teams using commercial APIs (like Azure OpenAI) or open-source models (like Llama or Mistral).

Modular Components for Real-World Recommendation Scenarios

RecAI isn’t a monolithic system—it’s a suite of interoperable tools tailored to different LLM4Rec challenges:

Constrained Generative Recommendation (RecLM-cgen)

Ensures every recommended item exists in your catalog by restricting the LLM’s output vocabulary during generation. Ideal for production systems where reliability and correctness are non-negotiable.

Text-Based Item Retrieval (RecLM-emb)

Optimizes LLMs for dense retrieval by aligning embeddings specifically for item matching—e.g., between user queries and product descriptions. Unlike general-purpose text embeddings, RecLM-emb is fine-tuned to capture recommendation-relevant semantics, improving recall in search and RAG pipelines.

Interactive Recommender Agent (InteRecAgent)

Turns static recommenders into conversational agents by using an LLM as the “brain” and traditional models (e.g., matrix factorization) as “tools.” Users can ask follow-up questions (“Show me cheaper options”), and the agent dynamically retrieves, ranks, and explains results—all grounded in real inventory.

Knowledge Plugin via Prompting

Injects up-to-date, domain-specific patterns (e.g., trending items or user segments) into prompts without fine-tuning. This enables rapid adaptation to shifting catalogs or user behaviors—crucial for news, fashion, or seasonal retail.

Explainability with RecExplainer

Uses LLMs as surrogate models to interpret black-box recommenders. By mimicking the behavior of a target model, RecExplainer generates human-readable justifications (e.g., “Recommended because you liked similar sci-fi films”), boosting transparency for both users and developers.

Comprehensive Evaluation Suite (RecLM Evaluator)

Assesses LLM-based recommenders across multiple dimensions: retrieval accuracy, ranking quality, explanation fidelity, and general instruction-following ability. Works with both local models and hosted APIs, removing the need to build custom evaluation infrastructure.

Ideal Use Cases for RecAI

RecAI excels in domains where recommendations must be accurate, explainable, and aligned with a closed set of items:

- Conversational shopping assistants that answer questions and suggest real, in-stock products.

- Content platforms (news, video, music) needing dynamic, explainable suggestions without hallucinated titles.

- Enterprise recommenders requiring auditability and compliance—e.g., in finance or healthcare—where OOD outputs are unacceptable.

- Research teams prototyping LLM4Rec systems who want to avoid rebuilding evaluation or grounding logic from scratch.

Getting Started: A Practical Adoption Path

Adopting RecAI follows a clear, incremental workflow:

- Define your item catalog: A structured list of valid items (e.g., product IDs with descriptions) is required for constrained methods like RecLM-cgen.

- Choose a module: For generative recommendations, start with RecLM-cgen; for retrieval, use RecLM-emb; for interactivity, prototype with InteRecAgent.

- Integrate with your LLM: Most components work via prompt engineering or lightweight inference hooks—no full fine-tuning needed.

- Evaluate rigorously: Use the RecLM Evaluator to benchmark performance across accuracy, safety, and explainability.

The entire toolkit is open-sourced under the permissive MIT license and designed for ease of integration with existing Python-based recommendation stacks.

Limitations and Practical Trade-offs

RecAI is production-ready but not universally all-encompassing:

- RecLM-emb currently supports only text modalities—no image or multimodal embeddings yet.

- Constrained generation requires a static or periodically updated catalog; real-time item additions may need pipeline adjustments.

- InteRecAgent assumes integration with a backend recommender system (e.g., collaborative filtering or two-tower models) to function as its “tool.”

These are intentional design choices reflecting real-world constraints in LLM4Rec systems—not oversights. They ensure RecAI remains focused, efficient, and deployable.

Summary

RecAI solves the most urgent problem in applying LLMs to recommendation: preventing hallucinated, out-of-domain suggestions while preserving interactivity, explainability, and ease of use. By offering modular, plug-and-play components—from constrained generation to agent-based interaction—it empowers engineering and research teams to build trustworthy, user-centric recommenders without starting from scratch. Backed by Microsoft Research and released under an open license, RecAI represents a mature, practical bridge between the promise of LLMs and the rigor of production recommendation systems.