Imagine being able to take a single, static video shot on your phone and instantly transform it into a cinematic sequence with smooth panning, dramatic zooms, or elegant arcing camera motions—without needing multiple camera angles, depth sensors, or 3D reconstruction. That’s exactly what ReCamMaster enables.

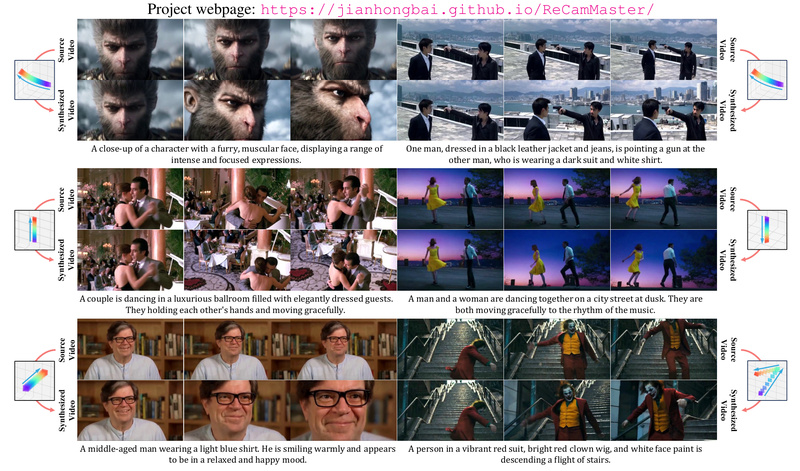

Developed by researchers from Kuaishou Technology and Zhejiang University, ReCamMaster is a novel generative framework that re-renders a given video from entirely new camera trajectories. Unlike conventional approaches that require multi-view inputs or explicit 3D scene modeling, ReCamMaster works directly from a single in-the-wild video and leverages the power of pre-trained text-to-video (T2V) diffusion models—augmented with a lightweight yet highly effective video conditioning mechanism—to generate photorealistic outputs with synchronized motion and appearance.

This capability opens up powerful new possibilities for video creators, editors, and AI researchers who want dynamic camera control without the traditional production overhead.

Why ReCamMaster Stands Out

Single-Video Input, Rich Camera Control

Most methods for altering camera motion rely on stereo footage, depth maps, or structure-from-motion pipelines—requirements that severely limit real-world applicability. ReCamMaster bypasses these constraints entirely. It only needs one input video (with at least 81 frames) and a textual caption describing its content. From there, it can generate new views along 10 predefined, realistic camera trajectories, including:

- Horizontal pans (left/right)

- Vertical tilts (up/down)

- Zoom in/out

- Translational moves with rotation (up/down)

- Arcing motions around the subject (left/right)

These motions mimic real cinematographic techniques, making the output suitable for professional-grade editing.

A Simple Yet Powerful Conditioning Strategy

The core innovation of ReCamMaster lies not in architectural overhaul, but in how it conditions a pre-trained T2V model with temporal and spatial information from the source video. Rather than fine-tuning the entire generative model from scratch, ReCamMaster injects video-level features in a way that preserves dynamic consistency across frames—ensuring that objects move coherently and lighting remains stable even under novel viewpoints. This approach is both computationally efficient and highly compatible with existing T2V backbones.

Trained on a Purpose-Built Synthetic Dataset

To overcome the scarcity of real-world multi-camera synchronized video data, the team built the MultiCamVideo Dataset using Unreal Engine 5. This dataset contains 136,000 synchronized videos across 13,600 dynamic scenes, each captured from 10 different camera trajectories. The scenes combine diverse 3D environments, human characters, and animations, with camera motions calibrated to mirror real-world filming practices—including variable speeds, realistic focal lengths, and natural arc paths.

This synthetic training data allows ReCamMaster to generalize effectively to everyday videos captured on smartphones or consumer cameras.

Practical Extensions Beyond Camera Re-rendering

Beyond novel camera synthesis, ReCamMaster demonstrates surprising versatility in downstream video enhancement tasks. The same framework can be repurposed for:

- Video stabilization: Re-rendering shaky footage along a smooth trajectory.

- Super-resolution: Generating higher-resolution frames with consistent motion.

- Outpainting: Extending the scene beyond the original frame boundaries while maintaining camera-aware perspective.

This multi-functionality makes it a valuable asset not just for content creation, but also for video processing pipelines.

Who Should Use ReCamMaster?

ReCamMaster is ideal for:

- Social media creators who want to add cinematic camera moves to static clips without reshooting.

- Video editors looking to enhance archival or user-generated content with dynamic viewpoints.

- AI researchers exploring camera-aware generative modeling, video re-rendering, or 4D scene understanding.

- Platform developers building next-generation AI video tools (e.g., auto-cinematography, smart editing assistants).

It shines in scenarios where multi-view data is unavailable but high-quality, motion-consistent re-rendering is desired.

Getting Started: Try It in Minutes

The ReCamMaster team offers two easy entry points:

Option 1: Use the Online Trial (No Code Required)

Upload your video via their official trial link, select one of the 10 camera trajectories, and receive the output via email. This is the fastest way to evaluate the method on your own content.

Option 2: Run Inference Locally

For developers and researchers, the open-source implementation supports local inference using the Wan2.1 T2V model:

- Install the environment (requires Rust and Python dependencies).

- Download the Wan2.1 base model and ReCamMaster checkpoint.

- Prepare your video (≥81 frames) and a metadata.csv file with a descriptive caption.

- Run inference with a chosen

cam_type(1–10).

While training is also supported—using the MultiCamVideo dataset and VAE feature extraction—it’s not necessary for most users, as pre-trained weights are provided.

Note: The open-source version uses Wan2.1 due to internal model restrictions. Performance may differ slightly from the paper’s flagship results, but the core capabilities remain fully functional.

Important Limitations to Consider

Before integrating ReCamMaster into your workflow, keep these constraints in mind:

- The best-performing model (used in the paper) is not publicly released due to company policy; the open-source version relies on Wan2.1.

- Input videos should contain sufficient motion and visual diversity—static or highly repetitive scenes may yield less convincing results.

- Only 10 preset trajectories are officially supported out of the box; custom paths require additional engineering.

- Inference demands moderate GPU resources (e.g., 24GB+ VRAM for 81-frame, 480p+ resolution videos).

Despite these, ReCamMaster remains one of the most accessible and effective tools for single-video camera re-rendering available today.

Summary

ReCamMaster redefines what’s possible with a single video input. By intelligently conditioning generative video models and training on a large-scale synthetic dataset, it delivers high-fidelity re-rendering with realistic camera motions—no 3D assets, depth maps, or multi-camera rigs required. Whether you’re a creator seeking cinematic flair or a developer building AI-powered editing tools, ReCamMaster offers a practical, scalable solution to a long-standing challenge in video generation. With code, dataset, and trial access publicly available, now is the perfect time to explore its potential in your own projects.