Attention mechanisms lie at the heart of modern large language models (LLMs) and multimodal architectures—but their quadratic computational complexity remains a major bottleneck, especially during inference. Enter SageAttention3, the latest breakthrough from the THU-ML team that redefines what’s possible in efficient attention computation. Designed for next-generation NVIDIA Blackwell GPUs (like the RTX 5090), SageAttention3 leverages FP4 Tensor Cores to deliver up to 5× faster inference than even the fastest FlashAttention implementations—without sacrificing accuracy.

But that’s only half the story. While most low-bit attention methods stop at inference, SageAttention3 boldly extends quantization into training, introducing a novel 8-bit attention mechanism that works across both forward and backward passes. This dual focus—on ultra-fast inference and practical low-bit training—makes SageAttention3 uniquely valuable for teams deploying or fine-tuning large models under tight latency, cost, or hardware constraints.

Best of all? It’s plug-and-play. You don’t need to retrain your model or overhaul your codebase. Just swap in a single function, and you’re ready to accelerate.

Why SageAttention3 Stands Out

1. Unprecedented Speed via FP4 on Blackwell GPUs

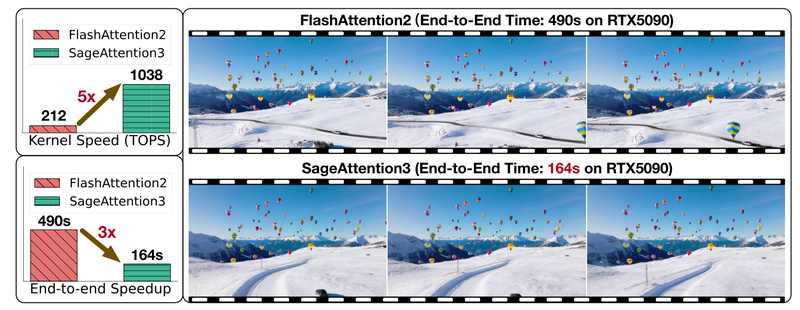

SageAttention3 is the first attention implementation to fully harness the new FP4 Tensor Cores in NVIDIA’s Blackwell architecture. On an RTX 5090, it achieves 1038 TOPS—a 5× speedup over FlashAttention, the previous gold standard. This isn’t just a marginal gain; it’s a transformative leap that enables real-time inference for models that previously struggled with latency, such as video generation systems like CogVideoX.

2. True Plug-and-Play Compatibility

Unlike many optimization techniques that require model-specific tuning or retraining, SageAttention3 works out of the box with existing FP16/BF16 models. By simply replacing torch.nn.functional.scaled_dot_product_attention with sageattn, you can accelerate inference immediately. The library supports common configurations including causal masking, group-query attention, variable sequence lengths (via sageattn_varlen), and both NHD and HND tensor layouts.

3. Pioneering 8-Bit Attention for Training

While FP4 shines in inference, SageAttention3 also explores 8-bit attention for training—a first in the field. The team designed a numerically stable 8-bit attention kernel that supports both forward and backward propagation. Experiments show lossless performance in fine-tuning tasks, making it ideal for parameter-efficient adaptation of large models. However, during full pretraining, convergence is slower—so it’s best suited for fine-tuning scenarios where speed and memory savings outweigh marginal training dynamics.

4. Accuracy Preservation Without Compromise

Despite aggressive quantization, SageAttention3 maintains high fidelity. Techniques like microscaling, outlier-aware smoothing, and two-level accumulation ensure numerical stability, especially in the critical softmax step of attention. The result? No perceptible drop in output quality—even in multimodal generation tasks.

Ideal Use Cases

SageAttention3 isn’t a one-size-fits-all tool, but it excels in specific high-impact scenarios:

- Deploying LLMs or multimodal models on Blackwell GPUs (e.g., RTX 5090, B200) where low-latency inference is critical—think real-time chatbots, video synthesis, or on-device AI.

- Accelerating fine-tuning workflows with 8-bit attention to reduce memory footprint and speed up iterations, without retraining from scratch.

- Rapid prototyping or production rollout where engineering effort must be minimized—thanks to its drop-in replacement design.

It’s particularly valuable for teams already using NVIDIA’s latest hardware and looking to squeeze maximum performance from attention-heavy architectures like Transformers, DiTs (Diffusion Transformers), or Mixture-of-Experts models.

Getting Started: Simple Integration in Minutes

Adopting SageAttention3 requires minimal setup:

-

Install via pip:

pip install sageattention==2.2.0 --no-build-isolation

Or build from source if you need the latest SageAttention3 (Blackwell-optimized) kernel.

-

Replace the attention function:

import torch.nn.functional as F from sageattention import sageattn F.scaled_dot_product_attention = sageattn

-

Run your model as usual—or try the provided examples like

cogvideox-2b.pyto see speedups firsthand.

For models where global function replacement doesn’t work (e.g., complex attention wrappers), manually swap the attention call inside the model’s attention module. The library also integrates smoothly with torch.compile (in non-CuGraph mode) and distributed inference setups.

Important Limitations and Considerations

While powerful, SageAttention3 comes with clear boundaries:

- FP4 acceleration requires Blackwell GPUs (e.g., RTX 5090). On older architectures (Hopper, Ada, Ampere), you’ll fall back to INT8/FP8 variants (SageAttention2 or 2++), which are still fast but don’t unlock the full 5× gain.

- 8-bit training is viable for fine-tuning but not pretraining—expect slower convergence in from-scratch training scenarios.

- Not all models support drop-in replacement via the global

F.scaled_dot_product_attentionoverride. Vision and video models often require targeted replacement in DiT blocks (seeexample/mochi.pyfor guidance). - For precision-sensitive applications, the authors still recommend SageAttention2, which offers higher numerical accuracy than SageAttention3’s FP4 variant.

Summary

SageAttention3 represents a significant leap in attention efficiency—combining record-breaking inference speed on next-gen GPUs with the first practical exploration of low-bit attention in training. Its plug-and-play design lowers the barrier to adoption, while its hardware-aware optimizations ensure you get the most out of NVIDIA’s latest silicon. If you’re deploying or fine-tuning large models on Blackwell hardware and need faster inference without retraining, SageAttention3 is a compelling, production-ready solution worth integrating today.