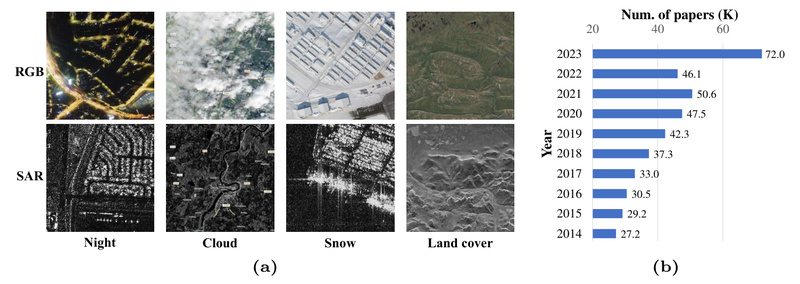

Synthetic Aperture Radar (SAR) imaging offers a unique advantage: it works reliably in all weather conditions, day or night, making it indispensable for defense, maritime surveillance, disaster response, and infrastructure monitoring. Yet, despite its strategic value, SAR-based computer vision has long been held back by two critical bottlenecks: the scarcity of large, diverse public datasets and the lack of accessible, well-documented open-source toolkits.

Enter SARDet-100K—the first COCO-scale, open-source benchmark and toolkit specifically designed for large-scale, multi-class SAR object detection. Built by unifying and standardizing 10 existing SAR datasets, SARDet-100K delivers over 116,000 images and 245,000 annotated instances across six object categories: ships, aircraft, cars, bridges, harbors, and tanks. More than just data, it comes with a full PyTorch-based detection framework built on MMDetection, pretrained models, and a novel pretraining strategy called MSFA (Multi-Stage with Filter Augmentation) that significantly boosts performance across a wide range of architectures.

For researchers and engineers—especially those without access to massive computational resources or proprietary satellite data—SARDet-100K represents a rare “blue ocean” opportunity: a well-structured, reproducible foundation to explore, innovate, and publish in a rapidly growing field.

Why SAR Object Detection Has Been So Challenging

Traditional computer vision models are pretrained on RGB imagery (like ImageNet or COCO), but SAR images are fundamentally different: they are grayscale, speckle-noisy, and encode physical scattering properties rather than visual textures. This domain gap causes standard transfer learning approaches to underperform, even when fine-tuned on SAR data.

Prior to SARDet-100K, most public SAR datasets contained fewer than 2,000 images and focused on a single object class (usually ships). Without scale or diversity, it was nearly impossible to train robust, generalizable detectors or fairly compare methods. Worse, many papers didn’t release code, making replication and progress difficult.

SARDet-100K directly addresses these limitations by providing:

- A large-scale, multi-class benchmark comparable in scale to COCO

- Standardized annotations and image formats across heterogeneous sources

- Open code, configurations, and pretrained weights

Key Features That Make SARDet-100K Stand Out

1. Unprecedented Scale and Diversity

SARDet-100K integrates data from 10 real-world SAR datasets, spanning multiple satellites (e.g., Gaofen-3, Sentinel-1, TerraSAR-X), frequency bands (C, X, Ka, Ku), polarizations (HH, VV, HV, VH), and resolutions (from 0.1m airborne to 25m spaceborne). This heterogeneity ensures models trained on SARDet-100K learn to generalize across sensor types and imaging conditions—a critical requirement for real-world deployment.

The final dataset includes:

- 94,493 training images, 10,492 validation images

- 6 object categories: Ship (S), Aircraft (A), Car (C), Bridge (B), Harbour (H), Tank (T)

- 245,653 total instances, with varying densities (from 1.28 to 11.51 objects per image)

2. MSFA: A Novel Pretraining Framework for SAR

One of the project’s most impactful contributions is the Multi-Stage with Filter Augmentation (MSFA) framework. MSFA tackles the RGB-to-SAR domain shift by:

- Introducing Wavelet-based Signal Transformation (WST) to augment raw SAR pixels with frequency-domain features

- Using a multi-stage pretraining pipeline that progressively adapts models from natural images (ImageNet) → aerial RGB (DIOR/DOTA) → SAR (SARDet-100K)

Experiments show MSFA consistently outperforms traditional ImageNet pretraining (IMP). For example:

- Faster R-CNN + ResNet-50: mAP improves from 49.0 → 51.1 (+2.1)

- GFL + ResNet-50: mAP jumps from 49.8 → 53.7 (+3.9)

- DETR + ResNet-50: a dramatic gain from 31.8 → 47.2 (+15.4)

Crucially, MSFA works across two-stage (e.g., Cascade R-CNN), single-stage (e.g., RetinaNet), and end-to-end (e.g., DETR, Deformable DETR) detectors, as well as diverse backbones (ResNet, ConvNeXt, Swin, VAN).

3. Production-Ready Open-Source Toolkit

The codebase is built on MMDetection, a widely adopted detection framework, ensuring compatibility with modern best practices. It includes:

- Dataset loader for SARDet-100K

- MSFA implementation in

MSFA/msfa/models/backbones/MSFA.py - Ready-to-run config files for 10+ detector architectures

- Pretrained model weights (hosted on Baidu Disk and OneDrive)

Installation is straightforward with conda and pip, and the project provides clear dependency management via mim (OpenMMLab’s package manager).

Ideal Use Cases and Target Users

SARDet-100K is especially valuable for:

- Academic researchers seeking a reproducible baseline for SAR detection papers

- Government and defense labs developing all-weather surveillance systems

- Commercial remote sensing startups building analytics for maritime or infrastructure monitoring

- Students and educators exploring domain adaptation, radar vision, or multimodal learning

Because SAR penetrates clouds, smoke, and darkness, it’s critical in scenarios where optical sensors fail—such as monitoring illegal fishing at night, assessing flood damage under storm clouds, or tracking military assets in obscured conditions. SARDet-100K lowers the entry barrier for these high-impact applications.

Getting Started Is Simple

To begin:

- Download the dataset (train/val splits available via Baidu Disk or OneDrive)

- Set up the environment using the provided conda and pip commands

- Run training or inference using one of the included config files (e.g.,

faster_rcnn_r50_msfa.py) - Fine-tune or adapt MSFA to your own SAR data or detector architecture

The project’s modular design makes it easy to plug in new models or preprocessing steps. Plus, with pretrained weights available, you can skip weeks of training and start evaluating immediately.

Limitations and Practical Considerations

While SARDet-100K is a major leap forward, users should note:

- The test set is not publicly released—it’s withheld to maintain benchmark integrity (common in CVPR/NeurIPS datasets)

- Annotations use axis-aligned bounding boxes; for rotated bounding boxes, the team’s follow-up work RSAR (CVPR 2025) provides an approximate rotation-labeled version of SARDet-100K

- The license is CC BY-NC 4.0, meaning non-commercial use only—commercial applications require special permission

These constraints are transparently documented and align with standard academic dataset practices.

Summary

SARDet-100K solves the long-standing data and code scarcity problems that have hindered SAR object detection research. By delivering the first COCO-scale, multi-class SAR benchmark alongside a powerful, generalizable pretraining framework (MSFA) and a fully open toolkit, it empowers researchers and engineers to build, test, and deploy robust SAR vision systems without starting from scratch. If you’re working on all-weather remote sensing, defense analytics, or domain adaptation in computer vision, SARDet-100K is the foundation you’ve been waiting for.