In the world of explainable AI, understanding which features matter is only half the story. What if two seemingly unimportant features together drastically change a model’s prediction? Or what if a model’s behavior hinges on subtle three-way interactions that standard attribution methods completely miss? This is where shapiq steps in—a powerful, open-source Python package that moves beyond traditional Shapley values by quantifying Shapley interactions among any number of features or data points.

Built for both researchers and practitioners, shapiq enables you to uncover not just who contributes, but how they collaborate. Whether you’re debugging a complex ensemble model, interpreting a vision transformer, or benchmarking new game-theoretic algorithms, shapiq provides a unified, efficient, and application-agnostic framework to compute, analyze, and visualize high-order interactions in machine learning models.

Why Shapley Interactions Matter

Traditional feature attribution methods like SHAP assign individual importance scores to each feature. While useful, they assume features act independently—a dangerous oversimplification in non-linear models like XGBoost, LightGBM, or neural networks. In reality, features often exhibit synergy: their joint effect is greater (or less) than the sum of their individual contributions.

Shapley interactions generalize the Shapley value by assigning credit to subsets of features, capturing these higher-order dependencies. For example:

- A pairwise interaction might reveal that features income and debt_ratio jointly affect loan approval in a non-additive way.

- A third-order interaction could expose how age, location, and device type collectively influence user churn in a recommendation system.

By quantifying these interactions, shapiq provides a more faithful and nuanced explanation of model behavior—especially crucial in high-stakes domains like healthcare, finance, or autonomous systems.

Key Capabilities of shapiq

Any-Order Interaction Support

Unlike tools limited to pairwise effects, shapiq computes Shapley interactions of any order (e.g., up to 4th order or beyond). You specify max_order=3, and it returns all 1st, 2nd, and 3rd-order interactions in one consistent framework.

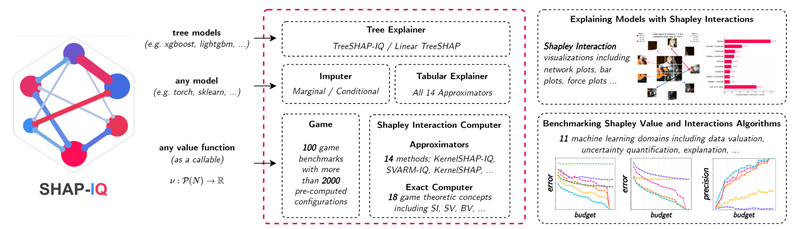

Model-Agnostic Yet Optimized Explainers

shapiq includes dedicated explainers for common model types:

TabularExplainerfor sklearn, XGBoost, and LightGBM (with TreeSHAP-IQ support)TabPFNExplainerfor TabPFN using the “remove-and-recontextualize” paradigm- Flexible interfaces for vision transformers and language models via custom game definitions

This means you get both generality and performance—no need to reimplement the underlying cooperative game logic.

Efficient Approximation for Real-World Use

Exact computation of Shapley interactions is exponentially costly. shapiq addresses this with state-of-the-art approximators, including:

- SPEX (SParse EXplainer): designed for high-dimensional settings with thousands of features

- Budget-controlled sampling that lets you trade off accuracy for speed (

budget=256vs.budget=2000)

This makes shapiq viable even in production-like scenarios where runtime matters.

Intuitive Visualizations

Understanding interactions is easier with the right visuals. shapiq provides:

- Network plots: nodes show individual feature attributions; edges show interaction strength

- Force plots: familiar to SHAP users, extended to show interaction-based explanations

- Direct plotting methods like

interaction_values.plot_network()for one-liner visual diagnostics

These tools help both technical and non-technical stakeholders interpret complex model behaviors.

Benchmarking Suite for Research

For ML researchers, shapiq ships with a comprehensive benchmark covering 11 real-world applications—from tabular regression to NLP and computer vision—with pre-computed ground-truth games. This allows systematic evaluation of new approximation algorithms across domains, fostering reproducible research in cooperative game theory.

Getting Started Is Simple

If you’re already familiar with SHAP, you’ll feel right at home. Installation is straightforward:

pip install shapiq # or uv add shapiq

Here’s how to explain a Random Forest model with 4th-order interactions:

import shapiq from sklearn.ensemble import RandomForestRegressor # Load data and train model X, y = shapiq.load_california_housing(to_numpy=True) model = RandomForestRegressor().fit(X, y) # Set up explainer for k-SII up to order 4 explainer = shapiq.TabularExplainer(model=model,data=X,index="k-SII",max_order=4 ) # Explain a single prediction interaction_values = explainer.explain(X[0], budget=256) print(interaction_values)

The output clearly lists top interactions—like (0, 5): 0.48—showing how features 0 and 5 jointly influence the prediction.

You can also fall back to standard Shapley values by setting index="SV", making shapiq a drop-in extension to existing SHAP workflows.

When Should You Use shapiq?

Consider shapiq when:

- Your model exhibits non-additive behavior (e.g., tree-based ensembles with complex splits)

- Standard feature importance gives misleading insights (e.g., low-importance features that are critical in combination)

- You need to validate or develop new game-theoretic methods in a standardized benchmark environment

- You’re working with TabPFN, transformers, or other models where interaction effects are suspected but hard to quantify

In short: if “which features matter” isn’t enough—and you need to know “how they work together”—shapiq is your tool.

Limitations and Practical Considerations

While powerful, shapiq has practical boundaries:

- Python 3.10+ required—not compatible with older versions

- Computational cost grows rapidly with interaction order and feature count. Even with SPEX, computing 5th-order interactions on 100+ features may be prohibitive

- Not all model types have built-in explainers yet, though custom games can bridge this gap

- The

budgetparameter controls approximation quality—users must consciously balance speed vs. accuracy

That said, for most real-world tabular problems (with <50 features), orders up to 3 or 4 are tractable with modest budgets.

Summary

shapiq isn’t just another explainability library—it’s a paradigm shift. By unifying Shapley values and their higher-order interactions in one efficient, extensible package, it empowers both practitioners and researchers to move beyond simplistic attributions and uncover the true collaborative dynamics inside black-box models. Whether you’re debugging a critical prediction, publishing on cooperative game theory, or simply seeking deeper model insights, shapiq equips you with the tools to see not just the players, but the team.