Text-to-video generation has rapidly evolved, yet technical teams still face a persistent trade-off: high-quality outputs often come at prohibitive computational costs, while efficient models sacrifice fidelity to the input prompt. Show-1, introduced by researchers at Show Lab, National University of Singapore, directly tackles this dilemma by uniquely combining pixel-based and latent-based diffusion models into a single, streamlined pipeline. The result is a system that delivers both strong semantic alignment with text prompts and significantly reduced GPU memory usage—making it a compelling choice for practitioners who need reliable, high-fidelity video synthesis without infrastructure overhauls.

Why the Hybrid Approach Matters

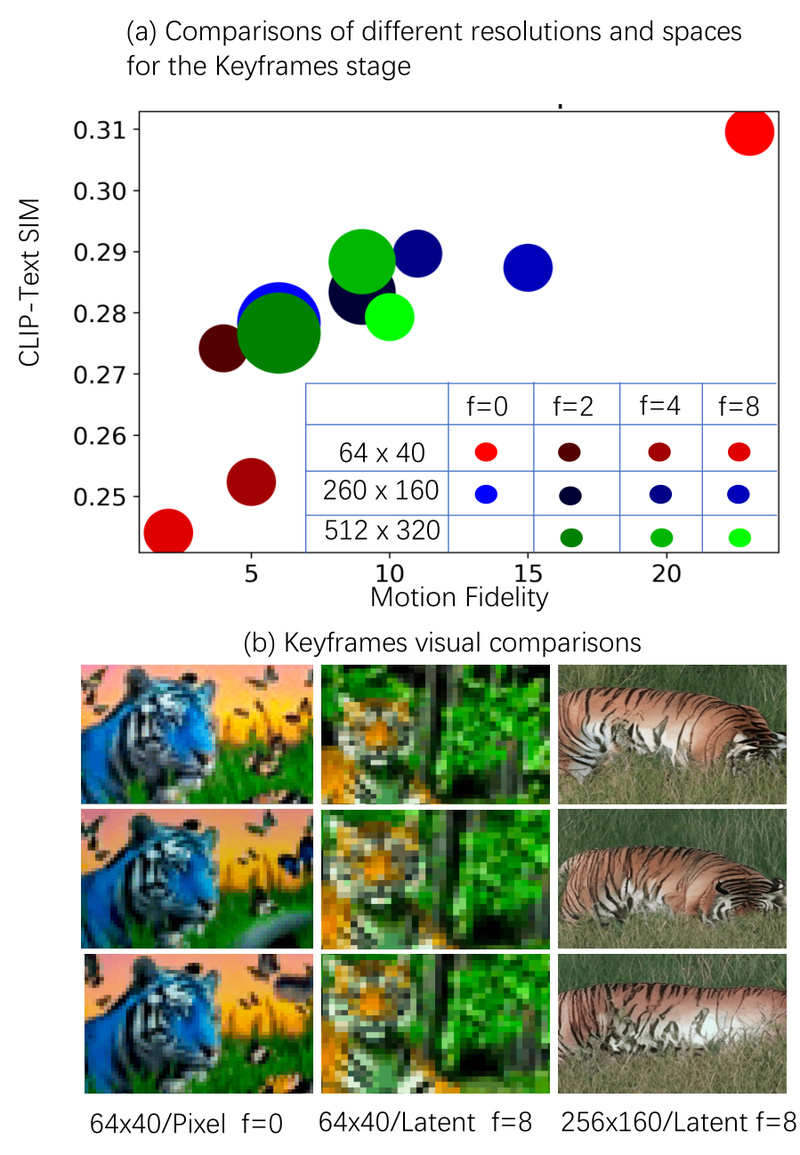

Traditional text-to-video diffusion models fall into two camps. Pixel-based models generate videos directly in pixel space, ensuring accurate alignment between the prompt and visual content—but they require massive GPU memory (often exceeding 70GB) and long inference times. On the other hand, latent-based models compress video data into a lower-dimensional latent space for efficiency, but this often leads to blurry details or semantic drift from the original prompt.

Show-1 bridges this gap. It first leverages a pixel-based diffusion model to generate a low-resolution video that tightly follows the input text. Then, instead of upscaling naively, it uses a novel expert translation mechanism powered by latent-based diffusion models to enhance resolution while preserving—and even refining—temporal and semantic coherence. This two-stage architecture ensures that the final output remains faithful to the prompt while operating at a fraction of the computational cost.

Core Technical Advantages

Show-1’s hybrid design yields several measurable benefits:

- Precise Text-to-Video Alignment: Because the initial generation happens in pixel space, high-level semantics from the prompt are captured accurately from the start.

- Dramatically Lower Memory Footprint: Inference uses only ~15GB of GPU memory compared to 72GB for comparable pixel-based models—enabling deployment on widely available hardware.

- State-of-the-Art Output Quality: Show-1 achieves top performance on standard text-to-video benchmarks, combining clarity, motion smoothness, and prompt relevance.

- Extensible for Customization: With minimal fine-tuning of temporal attention layers, Show-1 can be adapted for motion control or stylistic transformations—opening doors for creative and commercial applications.

These advantages make Show-1 not just a research novelty, but a practical tool for teams building real-world generative video systems.

Ideal Use Cases

Show-1 is particularly well-suited for scenarios where both quality and efficiency are non-negotiable:

- Rapid Content Prototyping: Marketing teams can generate draft videos from textual briefs in minutes, iterating quickly without cloud-scale compute.

- Digital Media Production: News outlets or social platforms can automate short-form video creation aligned precisely with article summaries or captions.

- Academic and Industrial Research: Researchers exploring prompt conditioning, video editing, or temporal modeling benefit from a modular, open-source baseline that balances fidelity and speed.

- Custom Motion & Style Applications: Thanks to its tunable temporal attention, Show-1 can be fine-tuned to impose specific motion patterns or artistic styles—ideal for branded content or interactive media.

Getting Started Is Straightforward

The Show-1 codebase is publicly available on GitHub and designed for ease of use:

- Install dependencies via

pip install -r requirements.txt(PyTorch 2.0+ recommended). - Run inference with a single command:

python run_inference.py. The script automatically downloads required model weights from HuggingFace. - Generated videos (in GIF format) are saved to the

outputsfolder by default. - For interactive exploration, launch the local Gradio demo with

python app.py.

The project provides three key model components—base, interpolation, and super-resolution—hosted on HuggingFace. Note that the super-resolution stage (show-1-sr1) incorporates DeepFloyd-IF’s image upscaling model, so users must separately acquire those weights following DeepFloyd’s instructions.

Limitations and Practical Considerations

While Show-1 represents a significant step forward, adopters should be aware of a few constraints:

- The dependency on DeepFloyd-IF for the first-frame super-resolution adds a manual setup step.

- Automatic model downloading requires internet access unless weights are pre-cached locally.

- Commercial licensing terms are still being finalized by the university. Teams interested in commercial deployment are encouraged to contact the authors directly to express interest and accelerate approval.

These are manageable hurdles, especially given the project’s transparency and active maintenance.

Summary

Show-1 redefines what’s possible in efficient, high-fidelity text-to-video generation. By strategically merging pixel and latent diffusion paradigms, it delivers precise prompt alignment, reduced memory usage, and adaptable architecture—all backed by open-source code and pretrained weights. For technical decision-makers evaluating generative video solutions, Show-1 offers a rare balance: research-grade quality with production-ready efficiency. Whether for prototyping, content automation, or experimental research, it stands out as a pragmatic and powerful choice in today’s evolving generative landscape.