Video generation has seen remarkable progress in recent years, yet most models remain limited to short clips—typically 5 to 10 seconds—often sacrificing motion realism, visual fidelity, or narrative coherence to meet technical constraints. Worse, they struggle to interpret cinematic language: shot composition, camera movement, actor expression, and scene continuity are frequently ignored or misrepresented.

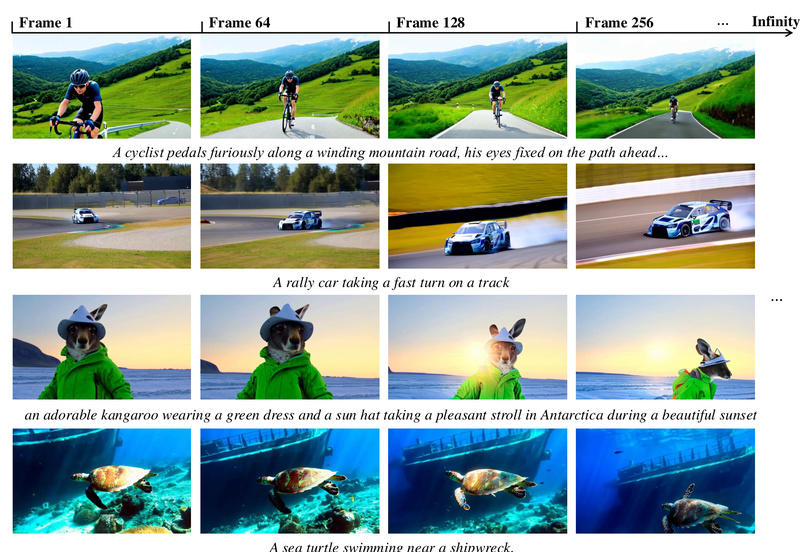

SkyReels-V2 changes this. As the first open-source infinite-length film generative model, it combines cutting-edge techniques—Diffusion Forcing, Reinforcement Learning, and a specialized video captioner—to deliver long-form, high-resolution videos (up to 720P) that respect both textual prompts and cinematic grammar. Whether you’re building a storytelling AI, pre-visualizing film scenes, or extending concept art into motion, SkyReels-V2 offers unprecedented control and quality among publicly available models.

Solving Real-World Video Generation Challenges

Current video generative models face a fundamental trade-off: longer duration often means degraded motion or visual quality. Many systems compromise realism to maintain temporal smoothness or limit output to under 10 seconds to preserve resolution. Additionally, general-purpose multimodal models lack the domain-specific understanding needed for professional-grade film generation—they can’t reliably distinguish a close-up from a wide shot, or infer that a “low-angle tracking shot” implies dynamic camera movement.

SkyReels-V2 directly tackles these pain points through four integrated innovations:

- Infinite-length generation via its autoregressive Diffusion Forcing architecture.

- Cinematic-aware prompting powered by SkyCaptioner-V1, a custom video captioner trained on shot-level annotations.

- Physically plausible motion dynamics enhanced through motion-focused Reinforcement Learning.

- High-resolution output (720P) with strong prompt adherence and visual consistency over time.

This synergy enables realistic, narratively coherent videos that can span 30 seconds, 60 seconds, or longer—without abrupt cuts, drifting subjects, or loss of detail.

Core Technical Innovations

Diffusion Forcing for Truly Long Videos

Unlike standard diffusion models that denoise all frames simultaneously under a shared noise schedule, SkyReels-V2 uses Diffusion Forcing—a framework where each frame (or latent token) is assigned an independent noise level. This allows the model to condition new frames on already-denoised (“clean”) ones, enabling autoregressive extension of videos indefinitely.

In practice, this means you can generate a 30-second clip by chaining 97-frame segments, using overlap and noise conditioning to ensure temporal continuity. The model supports both synchronous (faster) and asynchronous (higher consistency) generation modes, giving developers flexibility based on their quality and speed requirements.

SkyCaptioner-V1: Teaching AI Cinematic Language

To address the “cinematic blindness” of generic MLLMs, the SkyReels team developed SkyCaptioner-V1, a video captioning model fine-tuned on a curated dataset of 2 million balanced videos. It’s specifically trained to recognize shot type, camera motion, character expression, and spatial relationships—categories where standard models like Qwen2.5-VL fall short.

In evaluations, SkyCaptioner-V1 achieves 93.7% accuracy on shot type and 85.3% on camera motion, far outperforming even 72B-parameter baselines. This captioner isn’t just for annotation—it enables the generator to understand prompts like “a dramatic dolly zoom revealing a hidden figure” rather than treating them as generic text.

Motion Quality via Reinforcement Learning

Many generated videos suffer from unnatural motion: floating objects, impossible deformations, or physics-defying movements. SkyReels-V2 introduces a motion-specific Reinforcement Learning (RL) stage using a hybrid dataset of human-annotated and synthetically distorted video pairs. A reward model learns to distinguish physically plausible motion from artifacts, then guides Direct Preference Optimization (DPO) to refine the generator.

Critically, the RL process preserves other qualities like visual fidelity and prompt alignment—only motion is optimized—ensuring holistic improvement without side effects.

Multi-Stage Training for Peak Fidelity

SkyReels-V2’s training pipeline is staged for progressive refinement:

- Pretraining on diverse video data at multiple resolutions.

- Initial SFT to balance conceptual coverage and remove FPS-specific embeddings.

- RL fine-tuning for motion realism.

- Diffusion Forcing adaptation for long-video capability.

- Final high-resolution SFT at 720P to boost visual quality.

This staged approach ensures each capability is optimized without interference, resulting in state-of-the-art performance on both human and automated benchmarks.

Practical Applications for Developers and Creators

SkyReels-V2 isn’t just a research prototype—it’s a production-ready toolkit for real-world use cases:

- Film Pre-Visualization: Generate storyboard-to-video sequences with precise camera directions and shot transitions.

- AI-Assisted Content Creation: Turn marketing scripts or novel excerpts into coherent video narratives.

- Concept Art Animation: Use the Image-to-Video (I2V) model to animate a single illustration into a moving scene, with optional end-frame control for narrative closure.

- Video Extension: Seamlessly extend short clips (e.g., from other models) into longer sequences using the Video-to-Video pipeline.

- Prompt-Enhanced Generation: Leverage the built-in prompt enhancer (based on Qwen2.5-32B) to automatically enrich sparse prompts into cinematic descriptions.

All of this is supported through a user-friendly codebase with both script-based and diffusers-library interfaces, making integration into existing workflows straightforward.

Getting Started Without the Headache

Adopting SkyReels-V2 is designed to be simple:

- Clone the official GitHub repository.

- Install dependencies (Python 3.10+ recommended).

- Download a model variant (e.g.,

SkyReels-V2-DF-14B-720Pfor long T2V, orSkyReels-V2-I2V-14B-720Pfor image animation). - Run inference via one-line commands or

diffusersAPI calls.

The project provides clear examples for:

- Text-to-video and image-to-video generation

- Video extension from existing clips

- Start/end frame conditioning

- Multi-GPU acceleration with xDiT USP

Even advanced features like asynchronous generation or noise-conditioned continuity are well-documented with recommended parameters, reducing trial-and-error.

Limitations to Consider

While powerful, SkyReels-V2 has practical constraints:

- High VRAM usage: The 14B models require ~51GB GPU memory at 540P; 720P demands even more.

- Frame count precision: For long videos,

num_framesmust align with training configurations (e.g., 257 for 10s, 737 for 30s). - Asynchronous mode is slower: Though it improves consistency, it increases inference time.

- Not all models are public yet: The 5B series and Camera Director variants are still “coming soon.”

These are manageable with proper planning, and the team provides VRAM-saving options like --offload and reduced base_num_frames.

Why Technical Leaders Should Pay Attention

SkyReels-V2 stands out not just for its capabilities but for its open, transparent, and community-friendly approach:

- It’s the first open-source model to combine infinite-length generation, cinematic understanding, and 720P resolution.

- It outperforms leading open models on V-Bench (83.9% total score) and matches proprietary systems in human evaluations.

- It’s actively maintained, with regular model releases, diffusers integration, and multi-GPU support.

- It enables full narrative control—from shot design to motion physics—unachievable with black-box commercial APIs.

For teams building next-generation media, entertainment, or simulation tools, SkyReels-V2 offers a rare combination: cutting-edge performance, open access, and cinematic intelligence.

Summary

SkyReels-V2 redefines what’s possible in open-source video generation. By fusing Diffusion Forcing, cinematic-aware captioning, and motion-focused RL, it solves long-standing issues of duration, realism, and narrative coherence. With strong documentation, multiple inference modes, and state-of-the-art benchmarks, it’s a strategic choice for developers, researchers, and creators ready to move beyond 10-second clips and into the era of AI-generated film.