Introducing SLAM3R—a cutting-edge, end-to-end system that reconstructs high-quality, dense 3D scenes in real time using only a monocular RGB video stream. Unlike traditional SLAM (Simultaneous Localization and Mapping) pipelines that rely on explicit camera pose estimation, bundle adjustment, or depth sensors, SLAM3R leverages feed-forward neural networks to directly regress 3D pointmaps from video frames and align them into a globally consistent reconstruction—without ever solving for camera parameters.

This breakthrough makes 3D scene reconstruction dramatically simpler, faster, and more accessible. Whether you’re building AR/VR experiences, deploying robots in unknown environments, or digitizing real-world spaces for digital twins, SLAM3R delivers state-of-the-art accuracy at over 20 frames per second, all from a standard smartphone or webcam video.

Why SLAM3R Stands Out

Real-Time Performance Without Compromise

SLAM3R achieves real-time dense reconstruction at 20+ FPS, a rare feat for systems that produce complete, high-fidelity 3D geometry. Most dense reconstruction methods either sacrifice speed for quality (e.g., neural radiance fields) or require multi-view optimization that prevents live feedback. SLAM3R bridges this gap by using a sliding-window approach: it processes short, overlapping video clips and progressively fuses local 3D predictions into a unified global model—all via efficient neural inference.

No Camera Calibration or Pose Optimization

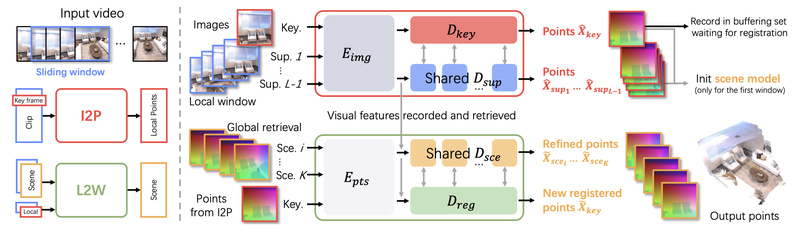

Traditional 3D reconstruction pipelines often fail when camera intrinsics are unknown or when motion is erratic. SLAM3R sidesteps these pitfalls entirely. It does not estimate camera poses or intrinsics; instead, it learns to infer consistent 3D structure directly from image sequences through two core neural modules:

- Image-to-Points (I2P): Regresses a dense 3D pointmap from a single RGB image.

- Local-to-World (L2W): Aligns and deforms overlapping local reconstructions into a globally coherent scene.

This eliminates common failure modes like drift, scale ambiguity, or initialization sensitivity.

End-to-End and Ready to Use

SLAM3R is designed for immediate deployment. Pre-trained models are available via Hugging Face, and the codebase includes scripts for both offline batch processing and online incremental reconstruction. With optional acceleration via XFormers and custom CUDA kernels, it runs efficiently on modern GPUs without requiring specialized hardware.

Practical Applications

SLAM3R shines in scenarios where speed, simplicity, and robustness matter more than theoretical control over every reconstruction parameter:

- Indoor 3D Mapping: Reconstruct rooms or buildings from a walk-through video (e.g., Replica-style environments).

- Outdoor Scene Digitization: Capture parks, streets, or construction sites using drone or smartphone footage.

- Robotics & Autonomous Systems: Enable robots to build real-time 3D awareness from a single RGB camera.

- AR/VR Prototyping: Rapidly generate 3D environments for immersive applications without LiDAR or depth sensors.

- Content Creation: Turn casual video clips into editable 3D assets for gaming, simulation, or digital heritage.

Because it requires only RGB input and no sensor calibration, SLAM3R lowers the barrier to entry for non-experts and resource-constrained teams.

Getting Started in Minutes

Setting up SLAM3R is straightforward:

- Clone the repository and create a Conda environment with Python 3.11 and PyTorch 2.5.

- Install dependencies (

requirements.txt) and optionally accelerate inference with XFormers. - Run pre-configured demos:

- On the Replica dataset using

bash scripts/demo_replica.sh - On your own video (extract frames and use

scripts/demo_wild.sh)

- On the Replica dataset using

For interactive use, launch the Gradio interface:

- Offline mode:

python app.py - Online (incremental) mode:

python app.py --online

The online interface integrates a Viser viewer to visualize the 3D scene as it’s being built—frame by frame. You can also save per-frame predictions and replay the reconstruction process using Open3D.

Evaluation scripts are included for quantitative benchmarking on Replica, and training code is provided if you wish to fine-tune on custom data (using ScanNet++, Co3Dv2, or Aria Synthetic Environments).

Limitations and Considerations

While powerful, SLAM3R has practical constraints worth noting:

- Video Quality Sensitivity: Rapid motion, motion blur, or low-texture regions can degrade reconstruction quality. Smooth, well-lit videos yield best results.

- GPU Memory Requirements: Real-time performance assumes a modern GPU (e.g., RTX 3090 or better). Low-memory devices may need to reduce input resolution or window size.

- Dependency Variability: Results may slightly differ across CUDA, PyTorch, or xformers versions due to numerical precision in neural inference and alignment—though overall fidelity remains consistent.

- Pre-trained Model Reliance: The out-of-the-box experience depends on models trained on synthetic and indoor datasets; performance on highly novel domains (e.g., underwater or night-time scenes) may vary.

These are not dealbreakers but important factors when assessing fit for your specific use case.

Summary

SLAM3R redefines what’s possible with monocular 3D reconstruction. By replacing fragile geometric optimization with robust neural regression and alignment, it delivers real-time, dense, and globally consistent 3D scenes from ordinary video—no calibration, no depth sensors, no expert tuning required.

For project leads, researchers, and developers who need a fast, reliable way to turn video into 3D geometry, SLAM3R offers a compelling alternative to legacy SLAM systems. Its combination of speed, accuracy, and ease of use makes it an excellent first-choice solution for rapid prototyping, deployment in dynamic environments, or any application where simplicity and performance are non-negotiable.

If your project involves turning video into 3D—and you want it done now, not after weeks of calibration and debugging—SLAM3R is worth a serious look.