Large AI models—from language generators to video diffusion systems—are bottlenecked by the attention mechanism, whose computational cost scales quadratically with sequence length. This has led to a flurry of optimization techniques, but most require model-specific modifications, retraining, or extensive calibration. Enter SpargeAttention: a universal, training-free sparse attention method that accelerates inference across any model—language, image, or video—without altering weights, architecture, or training pipelines.

SpargeAttention stands out because it delivers speedups out of the box. You don’t need to fine-tune, distill, or even profile your model beforehand. It plugs directly into existing PyTorch code with a single line change, making it ideal for practitioners who need faster inference today, not after weeks of engineering.

Why SpargeAttention Solves a Real Pain Point

If you’ve deployed a large language model (LLM) or a multimodal generative system, you’ve likely faced one of two trade-offs:

- Accuracy vs. speed: Pruning or quantizing attention often degrades output quality.

- Generality vs. specialization: Many sparse attention methods work only on specific architectures (e.g., only on Llama or only on Vision Transformers).

SpargeAttention eliminates both dilemmas. It’s model-agnostic, training-free, and accuracy-preserving—proven across diverse tasks including text generation, image synthesis, and video creation (e.g., Mochi). This makes it uniquely suited for real-world deployment where time, hardware budgets, and model integrity are non-negotiable.

Key Features That Make SpargeAttention Practical

Works with Any Model—No Retraining Needed

Unlike methods that require sparsity-aware fine-tuning or knowledge distillation, SpargeAttention operates entirely at inference time. It dynamically identifies and skips negligible attention computations without prior assumptions about model structure or data distribution.

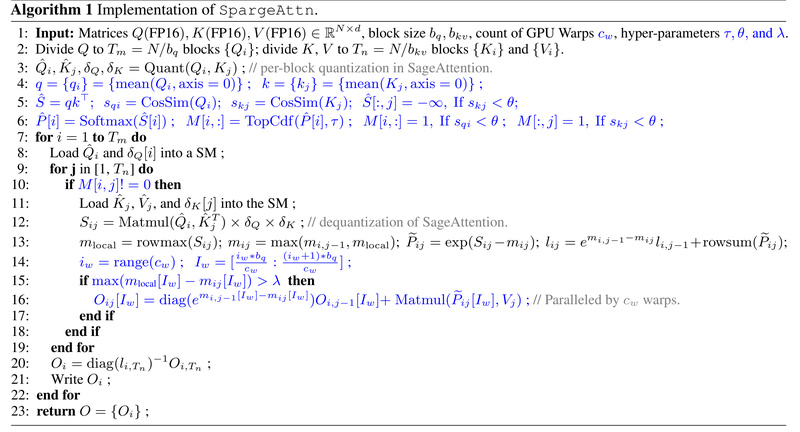

Combines Sparsity and Quantization Efficiently

SpargeAttention builds on the SageAttention family, which integrates 8-bit (and even 4-bit) quantization with outlier-aware smoothing. The “two-stage online filter” first predicts which attention scores are trivial, then skips corresponding matrix multiplications in both the QKᵀ and PV steps—reducing FLOPs without measurable quality loss.

Drop-in Replacement for PyTorch Attention

Integration is as simple as swapping one function call:

# Before attn_output = torch.nn.functional.scaled_dot_product_attention(q, k, v) # After attn_output = spas_sage2_attn_meansim_topk_cuda(q, k, v, topk=0.5)

No model surgery. No config files. Just faster inference.

Tuning-Free Defaults for Immediate Gains

The recommended API (spas_sage2_attn_meansim_topk_cuda) includes sensible defaults like simthreshd1=-0.1, pvthreshd=15, and topk=0.5. These work across a wide range of models without manual calibration—ideal for rapid prototyping or production rollouts.

Ideal Use Cases for Practitioners

SpargeAttention shines in scenarios where latency, throughput, or hardware efficiency matters most:

- LLM Serving at Scale: Reduce per-token latency in chatbots, code assistants, or RAG pipelines without recompiling or quantizing the entire model.

- Video Generation Acceleration: Speed up compute-heavy diffusion video models (e.g., Mochi) where attention dominates runtime.

- Multimodal Edge Deployment: Deploy vision-language models on resource-constrained servers using sparsity + quantization without accuracy cliffs.

- Research Prototyping: Test large models faster during experimentation cycles, especially when working with long-context or high-resolution inputs.

Because it’s training-free, it’s also perfect for closed-weight models (e.g., via APIs or compiled binaries) where you can’t modify internal layers—but can intercept attention calls.

How to Get Started in Minutes

Prerequisites

- Python ≥ 3.9

- PyTorch ≥ 2.3.0

- CUDA ≥ 12.0 (≥12.8 for Blackwell GPUs)

Install via:

pip install ninja python setup.py install

Basic Plug-and-Play Usage

Replace the standard attention call:

from spas_sage_attn import spas_sage2_attn_meansim_topk_cuda # For causal (decoder) or non-causal (encoder) attention attn_output = spas_sage2_attn_meansim_topk_cuda(q, k, v,topk=0.5, # Fraction of top elements to keep (0.0–1.0)is_causal=False # Set to True for autoregressive generation )

- Higher

topk→ more accurate, less speedup - Lower

topk→ sparser, faster, slight quality trade-off

Start with topk=0.5—it’s been validated across language, image, and video benchmarks.

Advanced: Block-Sparse Patterns (Optional)

For custom sparsity patterns (e.g., sliding window + global tokens), use:

from spas_sage_attn import block_sparse_sage2_attn_cuda output = block_sparse_sage2_attn_cuda(q, k, v,mask_id=your_block_mask, # Shape: (B, H, q_blocks, k_blocks)pvthreshd=20 # Lower = more PV sparsity )

Note: Block size is fixed at 128×64 (Q×K), aligned with GPU warp efficiency.

Limitations and Practical Considerations

While powerful, SpargeAttention has a few constraints to keep in mind:

- Hardware Requirements: Requires modern NVIDIA GPUs with CUDA ≥12.0 (Ampere or newer). Not compatible with older architectures or non-CUDA backends.

- Manual

topkTuning: There’s no auto-tuning yet. You’ll need to experiment slightly (e.g., try 0.3, 0.5, 0.7) to balance speed and quality for your use case. - Block-Sparse API Complexity: The

block_sparse_sage2_attn_cudainterface requires precomputed block masks and assumes specific tensor layouts (HNDby default). Only use this if you’re already designing sparse attention patterns. - Diminishing Returns on Short Sequences: Sparsity benefits grow with sequence length. For inputs under 256 tokens, speedups may be modest.

That said, in long-context LLMs, high-res image models, or video generators, speedups of 1.5–2.5× are common—with zero degradation in metrics like NIAH recall or video FVD scores.

Summary

SpargeAttention delivers what many sparse attention methods promise but few achieve: universal acceleration without compromise. By combining dynamic sparsity prediction, quantization, and a truly plug-and-play API, it removes the biggest barriers to inference optimization—retraining, calibration, and model lock-in. Whether you’re shipping a production LLM, experimenting with generative video, or optimizing a multimodal agent, SpargeAttention lets you go faster now, with minimal risk and zero model changes.