End-to-end autonomous driving systems promise a streamlined alternative to traditional modular pipelines—where perception, prediction, and planning are handled by separate components—by unifying these tasks into a single, differentiable framework. However, many existing end-to-end approaches still struggle with safety, computational efficiency, and real-time performance.

Enter SparseDrive, a novel end-to-end autonomous driving paradigm that rethinks how driving scenes are represented and processed. By embracing a sparse-centric design, SparseDrive significantly reduces computational overhead while dramatically improving safety-critical metrics like collision rates. Evaluated on the challenging nuScenes benchmark, SparseDrive not only outperforms prior state-of-the-art methods across all tasks but also achieves up to 9.0 FPS (frames per second) and 7x faster training compared to leading alternatives like UniAD.

For technical decision-makers evaluating next-generation autonomous driving architectures, SparseDrive offers a compelling blend of performance, efficiency, and safety—without sacrificing modularity in design philosophy.

Why SparseDrive Matters

Traditional autonomous driving systems suffer from error propagation: inaccuracies in perception compound through prediction and ultimately lead to unsafe planning decisions. End-to-end models aim to solve this by optimizing the entire pipeline jointly, but many still rely on dense Bird’s Eye View (BEV) representations that are computationally expensive and suboptimal for real-time deployment.

SparseDrive addresses these limitations head-on by:

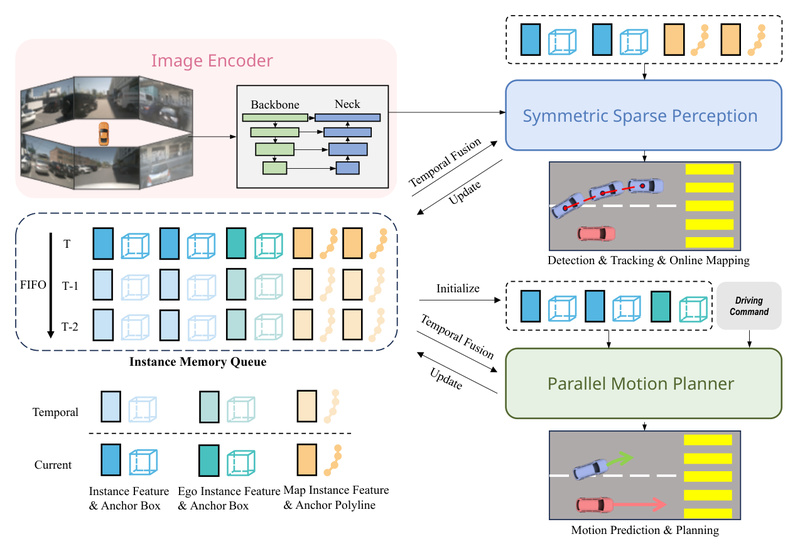

- Replacing dense BEV features with a fully sparse scene representation, drastically cutting memory and compute demands.

- Unifying detection, tracking, and online mapping into a symmetric sparse perception module, enabling consistent and efficient feature learning across time.

- Recognizing the inherent similarity between motion prediction and planning, and modeling them in parallel rather than sequentially.

This architectural shift isn’t just theoretical—it translates into measurable gains: SparseDrive-B achieves a 0.06% average collision rate, compared to 0.61% for UniAD, demonstrating a 10x improvement in safety on open-loop planning.

Key Technical Innovations

Unified Sparse Perception

Rather than processing each sensor input independently and fusing results downstream, SparseDrive encodes multi-view images into sparse instance-level features using a symmetric encoder-decoder structure. This design allows the model to maintain object-centric representations throughout the pipeline, supporting simultaneous detection, tracking, and mapping—all within a single sparse tensor. The result is a compact yet rich scene understanding that scales efficiently with scene complexity.

Parallel Motion Planning

SparseDrive makes a crucial observation: motion prediction (forecasting other agents’ trajectories) and ego-vehicle planning (deciding where to drive) are fundamentally similar tasks—both involve reasoning about future states in dynamic environments. Instead of cascading one after the other, SparseDrive executes them in parallel using shared context.

To ensure safety, the system employs a hierarchical planning selection strategy: multiple candidate trajectories are scored not only on smoothness and progress but also on collision risk via a dedicated rescore module. This step filters out plausible-but-dangerous paths before final output, directly targeting real-world safety failures.

Efficiency by Design

Thanks to its sparse foundation, SparseDrive trains in as little as 20 hours (SparseDrive-S) versus 144 hours for UniAD—a 7x speedup. Inference is equally impressive: 9.0 FPS for the smaller variant enables near real-time performance on standard hardware, a critical requirement for research prototyping and eventual deployment.

Ideal Use Cases

SparseDrive is particularly well-suited for:

- Research teams developing next-generation end-to-end driving models who need a high-performing, open-source baseline.

- Autonomous vehicle startups prioritizing safety and computational efficiency in perception-to-planning pipelines.

- Benchmark-driven development on camera-based datasets like nuScenes, where SparseDrive sets new state-of-the-art results across detection, tracking, mapping, motion forecasting, and planning.

If your project demands low collision rates, fast iteration cycles, and scalable architecture, SparseDrive provides a strong foundation.

Practical Implementation Notes

SparseDrive is open-sourced on GitHub with released models, training configurations, and evaluation scripts. The system uses a two-stage training process:

- Stage 1: Train the sparse perception module for detection, tracking, and mapping.

- Stage 2: Freeze perception components and train the parallel motion planner with collision-aware trajectory selection.

The codebase builds on established frameworks like mmdet3d and draws inspiration from projects like StreamPETR and UniAD, making it accessible to teams already familiar with modern 3D vision pipelines. Evaluation metrics—including updated collision rate calculations—are included to ensure fair comparison with prior work.

Limitations and Considerations

While SparseDrive represents a significant advance, potential adopters should consider the following:

- Sensor modality: Current results are based on camera-only inputs (nuScenes). Integration with LiDAR or radar would require architectural extensions.

- Closed-loop deployment: All results are from open-loop simulation; real-world closed-loop performance remains to be validated.

- Data dependence: Like all end-to-end models, SparseDrive requires large-scale, high-quality driving datasets for training—teams without access to such data may face adaptation challenges.

- Interpretability: The unified design trades modular debuggability for end-to-end optimization; teams needing granular failure analysis may need to supplement with external tools.

Nonetheless, for researchers and engineers focused on pushing the boundaries of efficient, safe end-to-end driving, SparseDrive offers a robust and well-documented starting point.

Summary

SparseDrive redefines what’s possible in end-to-end autonomous driving by replacing dense, inefficient representations with a principled sparse framework. Its symmetric perception module, parallel planning architecture, and collision-aware trajectory selection deliver unmatched safety, speed, and scalability on standard benchmarks.

For technical leaders evaluating autonomous driving stacks, SparseDrive isn’t just another incremental improvement—it’s a paradigm shift toward safer, faster, and more practical self-driving systems. With clean code, strong baselines, and clear documentation, it lowers the barrier to exploring the future of unified driving AI.