Recent advances in speech language models (SLMs) have made it possible to generate highly realistic speech—often indistinguishable from human voices at first listen. Yet, realism alone doesn’t guarantee user satisfaction. Many current systems fall short when it comes to aligning output with what users actually prefer: natural rhythm, emotional tone, clarity, and contextual appropriateness. This mismatch between model capabilities and human expectations is what SpeechAlign directly addresses.

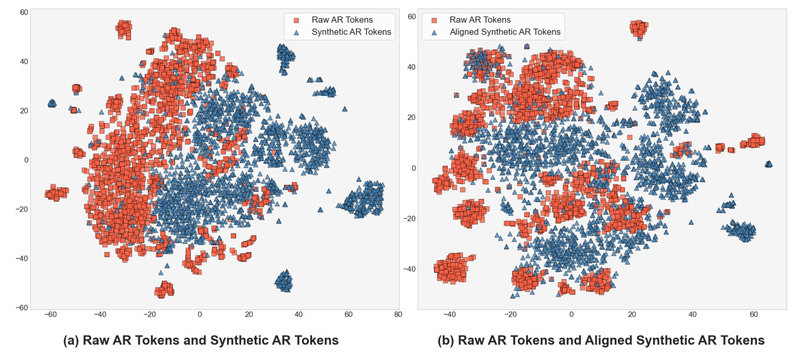

Introduced in the paper SpeechAlign: Aligning Speech Generation to Human Preferences, this method tackles a critical but often overlooked issue in speech synthesis: the distribution gap between training and inference phases in neural codec language models. This gap leads to degraded performance during real-world deployment, even when training metrics look strong. SpeechAlign closes this gap by incorporating human preferences directly into the model refinement loop—making it not just technically proficient, but perceptually aligned with human listeners.

Why Human Preferences Matter in Speech Generation

Traditional speech generation systems are typically optimized for reconstruction fidelity or log-likelihood on codec token sequences. However, these objectives don’t always correlate with subjective quality. For example, a model might produce technically accurate speech that sounds robotic, unnatural, or emotionally flat—exactly the opposite of what’s needed in conversational AI or media applications.

SpeechAlign recognizes this disconnect. Instead of relying solely on automated metrics, it leverages preference data that contrasts “golden” (high-quality, human-approved) codec tokens against synthetic, lower-quality alternatives. This human-in-the-loop strategy ensures that iterative improvements move the model closer to what real users value—not just what the loss function favors.

How SpeechAlign Works

At its core, SpeechAlign follows an iterative self-improvement cycle:

- Initial Generation: A base codec language model generates synthetic speech representations (as discrete codec tokens).

- Preference Dataset Construction: Human evaluators (or high-quality reference data) label pairs of outputs—identifying which version better aligns with human preferences.

- Preference Optimization: The model is fine-tuned using preference-based objectives (e.g., Direct Preference Optimization or similar techniques) to increase the likelihood of generating “golden” tokens.

- Iteration: The refined model generates new samples, which are again evaluated and used to build an updated preference dataset—enabling continuous improvement.

This loop allows even relatively small speech language models to converge toward high perceptual quality without requiring massive retraining from scratch.

Key Advantages of SpeechAlign

Human-Centric Quality Improvement

Unlike conventional methods that optimize for waveform similarity or token prediction accuracy, SpeechAlign explicitly optimizes for human satisfaction. This makes it especially valuable in user-facing applications where listener experience is paramount.

Effective for Smaller Models

SpeechAlign demonstrates strong generalization and works effectively even with compact models. This lowers the barrier to entry for teams without access to large-scale compute, enabling high-quality speech generation on more modest infrastructure.

Mitigates the Training-Inference Distribution Gap

By aligning the token distribution during inference with that of high-quality reference speech, SpeechAlign reduces the performance drop commonly observed when models move from controlled training environments to real-world usage.

Ideal Use Cases

SpeechAlign is particularly well-suited for scenarios where perceptual quality directly impacts user engagement or trust:

- Voice assistants and conversational agents that need to sound natural and responsive

- Audiobook and podcast narration requiring expressive, fatigue-free listening experiences

- Virtual avatars and digital humans in gaming, education, or customer service

- Accessibility tools that convert text to emotionally appropriate speech for diverse audiences

In all these contexts, technical fluency isn’t enough—speech must feel human. SpeechAlign provides a practical pathway to achieve that.

Practical Implementation

To adopt SpeechAlign, you’ll need:

- A neural codec-based speech language model (e.g., one built on SpeechTokenizer)

- A mechanism to collect or simulate human preference data (e.g., A/B listening tests)

- Tools for preference optimization (the SpeechGPT repository includes reference implementations)

The official code and models are available at the SpeechGPT GitHub repository, which also hosts related assets like the SpeechInstruct dataset and SpeechTokenizer—key components that support the SpeechAlign pipeline.

Note that SpeechAlign is not a standalone speech generator. It’s a refinement framework designed to enhance existing codec language models through preference-guided iteration.

Limitations and Considerations

While powerful, SpeechAlign does come with practical constraints:

- It relies on access to meaningful preference signals—either from human annotators or high-fidelity reference data.

- Its effectiveness depends on the quality of the initial model and the representativeness of the preference dataset.

- It assumes a discrete codec representation of speech, so integration requires a compatible tokenizer (like SpeechTokenizer).

Teams should assess their capacity for data curation and evaluation before adopting this approach. However, for those prioritizing user experience over raw synthesis speed or minimal resource usage, the investment pays off in perceptual quality and model robustness.

Summary

SpeechAlign offers a principled, iterative solution to a persistent challenge in speech generation: aligning synthetic output with human preferences. By closing the distribution gap between training and inference and enabling continuous self-improvement through preference learning, it empowers developers to build speech systems that don’t just sound real—but feel right. For project leads and technical decision-makers focused on user-centric AI, SpeechAlign represents a significant step toward truly human-aligned speech synthesis.