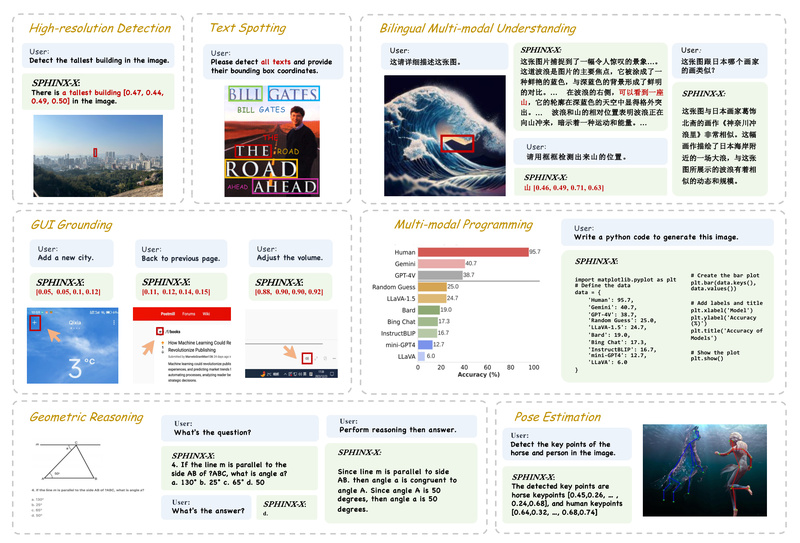

SPHINX-X is a next-generation family of Multimodal Large Language Models (MLLMs) designed to streamline the development, training, and deployment of vision-language systems. Built within the open-source LLaMA2-Accessory toolkit, SPHINX-X addresses critical bottlenecks that engineering and research teams face when working with multimodal AI—such as fragmented training pipelines, redundant architectures, and limited data diversity. By unifying training into a single stage, eliminating inefficiencies in visual processing, and supporting a range of base language models from 1.1B to 56B+ parameters, SPHINX-X enables teams to rapidly prototype, scale, and deploy robust multimodal applications without reinventing the wheel.

Developed as an evolution of the original SPHINX architecture, SPHINX-X is backed by a carefully curated, multi-domain dataset that spans public vision-language resources and novel in-house collections—especially rich in OCR-intensive content and structured visual reasoning tasks like Set-of-Mark. This data foundation, combined with architectural refinements, results in strong empirical performance across benchmarks like CMMMU, MMVP, and AesBench—often rivaling or surpassing proprietary systems like GPT-4V.

Why SPHINX-X Solves Real Engineering Challenges

Eliminates Redundant and Inefficient Components

Traditional MLLM pipelines often rely on multiple visual encoders or complex multi-stage training—first pretraining visual features, then aligning them with language, and finally instruction-tuning. SPHINX-X simplifies this by removing redundant visual encoders and collapsing the entire process into a one-stage, all-in-one training paradigm. This not only reduces engineering overhead but also cuts computation time and infrastructure costs.

A key innovation is the use of skip tokens to bypass fully padded sub-images during visual processing. This avoids wasting computation on empty regions—common in document or UI screenshot analysis—making inference and training significantly more efficient without sacrificing accuracy.

Scales Seamlessly Across Model Sizes and Languages

SPHINX-X isn’t a single model—it’s a family of models built on different base LLMs:

- TinyLlama-1.1B → SPHINX-Tiny (ideal for experimentation or edge deployment)

- InternLM2-7B → Balanced performance for multilingual tasks

- LLaMA2-13B → Strong generalist capabilities

- Mixtral-8x7B (MoE) → SPHINX-MoE, delivering state-of-the-art results on benchmarks like MMVP (49.33%)

This spectrum allows teams to choose the right trade-off between performance, latency, and cost—whether you’re building a research prototype or a production-grade vision assistant.

Powered by High-Diversity, Real-World Multimodal Data

Beyond standard image-text pairs from LAION or COYO, SPHINX-X leverages two novel datasets:

- OCR-intensive data: Critical for document understanding, invoice parsing, or multilingual signage analysis.

- Set-of-Mark: A structured visual reasoning dataset that challenges models to interpret diagrams, charts, and annotated images—key for technical, educational, or scientific applications.

This data diversity directly translates to broader generalization and stronger performance on real-world tasks where off-the-shelf models often fail.

Ideal Use Cases for SPHINX-X

Vision-Language Assistants with Bounding Box & Mask Support

SPHINX-X can generate precise bounding boxes and segmentation masks (via integration with SAM) in response to natural language prompts—e.g., “Highlight all traffic signs in this image”. This makes it ideal for robotics, content moderation, or accessibility tools.

Document Intelligence Systems

With its OCR-rich training, SPHINX-X excels at analyzing complex documents containing mixed text, tables, figures, and handwritten notes—useful in legal, financial, or academic automation workflows.

Rapid Prototyping of Multimodal Products

Thanks to pre-trained checkpoints like SPHINX-Tiny (1.1B) and SPHINX-MoE, teams can validate multimodal ideas in hours, not months. The LLaMA2-Accessory toolkit provides fine-tuning scripts for custom image-text instruction data, enabling adaptation to domain-specific needs.

Multilingual Multimodal Applications

Models built on InternLM2 or Mixtral support strong multilingual capabilities, making SPHINX-X suitable for global applications—e.g., travel assistants that interpret foreign menus or signage.

Getting Started Without Heavy Lifting

The LLaMA2-Accessory toolkit lowers the barrier to entry:

- Pretrained models are publicly available on Hugging Face and GitHub.

- Fine-tuning support includes QLoRA, FSDP, and FlashAttention 2 for memory-efficient training.

- Flexible visual encoders: Plug in CLIP, DINOv2, ImageBind, or Q-Former based on your task.

- Evaluation-ready: Full compatibility with OpenCompass for standardized benchmarking.

You don’t need a massive GPU cluster to start—SPHINX-Tiny runs comfortably on consumer hardware, while larger variants scale gracefully with distributed training.

Limitations and Practical Considerations

While SPHINX-X offers remarkable flexibility, adopters should note:

- Licensing: Base models like LLaMA2 require Meta’s license approval for commercial use.

- Hardware demands: Running SPHINX-MoE (Mixtral-8x7B) efficiently typically requires multiple high-end GPUs.

- Data infrastructure: To fine-tune on custom multimodal data, you’ll need pipelines for pairing images with rich text annotations—though the toolkit provides templates to accelerate this.

These constraints are manageable for most technical teams and are outweighed by the project’s open, modular, and performance-optimized design.

Summary

SPHINX-X redefines what’s possible in open-source multimodal AI by combining architectural efficiency, data richness, and model scalability in a single, accessible framework. Whether you’re a startup building a visual chatbot, a researcher exploring cross-modal reasoning, or an enterprise automating document workflows, SPHINX-X offers a fast, reliable path from idea to deployment—without the usual complexity tax of multimodal systems. With all code, models, and documentation openly released, it’s one of the most practical MLLM toolkits available today for real-world adoption.