Recommender systems are foundational to modern digital experiences—from streaming platforms to e-commerce—but they face a persistent challenge: user interaction data is often sparse, noisy, and incomplete. Traditional supervised approaches struggle under these conditions, requiring extensive labeled data that’s rarely available at scale. Enter self-supervised learning (SSL): a paradigm that leverages the inherent structure of user-item interactions to generate supervisory signals without external labels.

While dozens of SSL-enhanced recommendation models have emerged in recent years, researchers and engineers have been hindered by fragmented implementations, inconsistent evaluation protocols, and scenario-specific codebases that make fair comparison or rapid prototyping nearly impossible.

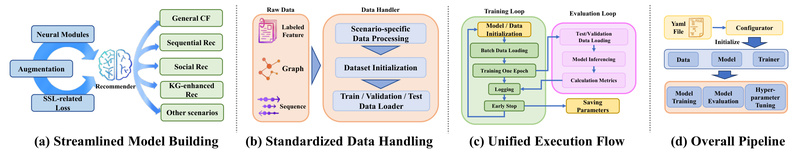

SSLRec directly addresses these pain points. Developed by the HKU Data Science team and accepted as an oral presentation at WSDM 2024, SSLRec is an open-source, PyTorch-based framework that unifies self-supervised recommendation research across five major domains: General Collaborative Filtering, Sequential Recommendation, Multi-Behavior Recommendation, Social Recommendation, and Knowledge Graph-enhanced Recommendation. With its modular design, standardized pipelines, and pre-integrated state-of-the-art models, SSLRec lowers the barrier to entry for practitioners while enabling rigorous, reproducible research.

Why Self-Supervised Learning Matters for Recommenders

At the heart of recommendation lies a data sparsity problem: most users interact with only a tiny fraction of available items. This leads to unreliable user and item representations, especially for new or infrequent users (the “cold-start” problem).

Self-supervised learning tackles this by creating auxiliary tasks—such as contrasting augmented views of the same interaction graph or predicting masked sequences—that encourage models to learn robust, generalizable representations from the data itself. No extra labels are needed; the supervision comes from the data’s internal structure.

SSLRec was built to make this powerful approach accessible, consistent, and extensible—whether you’re benchmarking existing methods or designing the next breakthrough algorithm.

A Unified Framework Across Recommendation Scenarios

Before SSLRec, implementing an SSL-based recommender often meant stitching together disparate codebases tailored to one scenario—say, graph-based collaborative filtering—while ignoring others like sequential or knowledge-aware settings. This fragmentation made cross-scenario innovation difficult.

SSLRec solves this by offering a single, coherent architecture that supports five major recommendation paradigms:

- General Collaborative Filtering (e.g., user-item rating prediction)

- Sequential Recommendation (e.g., next-item prediction from user histories)

- Multi-Behavior Recommendation (e.g., modeling clicks, purchases, and likes jointly)

- Social Recommendation (e.g., leveraging friend networks)

- Knowledge Graph-enhanced Recommendation (e.g., using entity relationships to enrich item semantics)

Each category shares the same data pipeline, training loop, and evaluation protocol—ensuring that improvements or comparisons aren’t skewed by implementation differences.

Plug-and-Play Access to 20+ State-of-the-Art Models

SSLRec ships with more than 20 recent, peer-reviewed SSL-enhanced models—fully implemented and ready to run. Examples include:

- LightGCN, SimGCL, and SGL for collaborative filtering

- BERT4Rec, CL4SRec, and DuoRec for sequential tasks

- KGCL, KGIN, and DiffKG for knowledge-aware settings

- MHCN and DSL for social recommendation

- MBGMN and CML for multi-behavior modeling

Instead of spending weeks reimplementing baselines from scratch, you can compare them instantly. Want to evaluate LightGCN on the Gowalla dataset? Just run:

python main.py --model LightGCN

Configuration lives in simple YAML files (e.g., lightgcn.yml), so tuning hyperparameters or swapping datasets requires no code changes.

Modular Design for Rapid Prototyping

SSLRec’s architecture is deliberately modular. Core components—encoders, data augmentations, contrastive losses, and training strategies—are decoupled and interchangeable.

This means you can:

- Swap a GNN encoder for a Transformer in a sequential model

- Try a new graph augmentation (e.g., edge dropout vs. node masking)

- Plug in a custom contrastive loss without rewriting the training loop

For researchers exploring novel SSL objectives or engineers adapting recommenders to niche domains, this eliminates boilerplate and accelerates iteration.

Fair, Reproducible Evaluation Out of the Box

Recommender system research has long suffered from inconsistent evaluation: different data splits, metrics, or preprocessing steps make it hard to trust published results.

SSLRec enforces standardized protocols across all models and datasets:

- Public datasets (e.g., Gowalla, Yelp, Amazon) are processed uniformly

- Train/validation/test splits follow community best practices

- Metrics like Recall@K and NDCG@K are computed consistently

- Early stopping is supported to prevent overfitting

This ensures that when you compare SimGCL to LightGCL, you’re measuring algorithmic differences—not implementation quirks.

Getting Started in Minutes

SSLRec is designed for simplicity. Requirements are minimal:

- Python 3.10.4

- PyTorch 1.11.0

- DGL 1.1.1

- NumPy, SciPy

After cloning the repository, you can launch any implemented model with a single command. The framework handles data loading, model instantiation, training, and evaluation automatically.

For deeper customization—such as adding your own dataset or model—the included User Guide walks you through extending every layer of the system, from data feeders to loss functions.

Practical Limitations to Consider

While powerful for research and prototyping, SSLRec has boundaries to keep in mind:

- It targets academic and public datasets, not proprietary or industrial-scale data pipelines.

- It depends on specific versions of PyTorch and DGL (e.g., torch==1.11.0), which may require environment isolation.

- It does not include production deployment tools like model serving, A/B testing, or real-time inference APIs.

Thus, SSLRec excels in experimentation and validation phases but should be integrated with production ML platforms (e.g., TensorFlow Serving, TorchServe) for live systems.

Summary

SSLRec is more than a model zoo—it’s a cohesive ecosystem for self-supervised recommendation. By unifying diverse scenarios under one framework, standardizing evaluation, and offering plug-and-play access to cutting-edge models, it empowers:

- Practitioners to prototype and validate ideas quickly,

- Researchers to benchmark fairly and innovate systematically,

- Students to learn SSL concepts through runnable, well-documented code.

If you’re working on recommendation systems and want to harness the power of self-supervised learning without reinventing the wheel, SSLRec is a strategic starting point. The code is open, the design is extensible, and the community is growing.