Evaluating large language models (LLMs) on synthetic coding benchmarks often fails to reflect their real-world utility. Enter SWE-Lancer—a rigorously constructed benchmark that captures the economic and technical reality of freelance software engineering. Built from over 1,400 actual tasks sourced from Upwork, SWE-Lancer represents (1 million in verified freelance payouts, offering an unprecedented bridge between AI capability and market-driven engineering performance.

Unlike academic datasets that prioritize volume or abstract correctness, SWE-Lancer grounds evaluation in tasks that real clients paid real engineers to complete—from minor )50 bug fixes to complex $32,000 feature implementations. This benchmark doesn’t just ask whether an LLM can write code; it asks whether that code would have earned money in a competitive freelance marketplace.

What Makes SWE-Lancer Unique

Real Tasks, Real Money

SWE-Lancer’s core innovation lies in its origin: every task was posted by a real client on Upwork and compensated at market rates. This economic grounding ensures that success on SWE-Lancer correlates directly with commercial viability—a rare trait among AI benchmarks.

Dual Task Structure: Coding and Management

The benchmark includes two distinct categories:

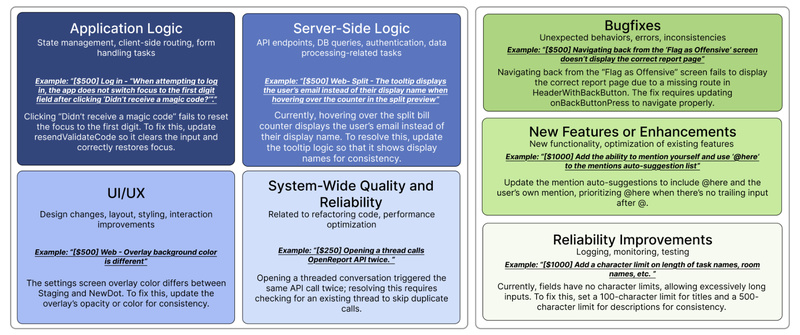

- Independent engineering tasks: Full-stack coding assignments requiring end-to-end implementation.

- Managerial decision tasks: Scenarios where models must evaluate and choose between multiple technical proposals—mirroring real-world engineering leadership decisions.

This dual structure tests not only code generation but also architectural judgment and cost-aware planning.

Triple-Verified Evaluation Rigor

All independent tasks are validated using end-to-end tests that have been reviewed and confirmed by three experienced software engineers. For managerial tasks, model choices are scored against the decisions made by the actual engineering managers who were hired for those jobs. This multi-layered verification ensures high reliability and minimizes subjectivity.

Who Should Use SWE-Lancer—and Why

SWE-Lancer is designed for technical decision-makers who need honest signals about LLM capabilities:

- AI researchers seeking benchmarks that reflect production-level software engineering challenges.

- Engineering leads evaluating whether to integrate LLMs into development workflows.

- Product teams assessing the ROI of AI-assisted coding tools.

- Technical evaluators comparing frontier models beyond toy problems or competitive programming.

By mapping model performance to dollar-value outcomes, SWE-Lancer helps stakeholders move beyond hype and make data-driven adoption decisions.

Solving Real Pain Points in LLM Evaluation

Traditional coding benchmarks often suffer from three critical gaps:

- Lack of economic context: Passing LeetCode-style tests doesn’t translate to shipping maintainable features.

- Synthetic or simplified tasks: Many datasets omit real-world complexities like legacy codebases, ambiguous requirements, or integration constraints.

- No manager-level reasoning: Few benchmarks assess high-level technical judgment, a key skill in professional software roles.

SWE-Lancer directly addresses these issues by embedding tasks in authentic freelance contexts, complete with client expectations, budget constraints, and delivery requirements.

Getting Started with SWE-Lancer

The SWE-Lancer team has open-sourced a public evaluation subset called SWE-Lancer Diamond, along with a unified Docker image to ensure reproducible runs.

Note: The original repository (github.com/openai/SWELancer-Benchmark) has been merged into the openai/preparedness repository. To run evaluations, visit https://github.com/openai/preparedness.

This streamlined setup lowers the barrier to entry, allowing researchers and practitioners to evaluate models consistently without wrestling with environment configuration or data preprocessing.

Current Limitations and Realistic Expectations

Despite its rigor, SWE-Lancer has important boundaries:

- Performance ceiling is low: Even state-of-the-art models currently fail to solve the majority of tasks, highlighting the significant gap between lab results and real-world readiness.

- Freelance-centric scope: Tasks reflect Upwork-style engagements, which may underrepresent enterprise-scale systems, regulated domains (e.g., healthcare or finance), or long-term maintenance work.

- Repository migration: Users must use the updated preparedness repo—attempting to use the deprecated SWELancer-Benchmark repo will lead to outdated or incomplete tooling.

These limitations don’t diminish SWE-Lancer’s value; instead, they provide honest guardrails for responsible interpretation.

Summary

SWE-Lancer redefines how we measure LLMs in software engineering—not by academic metrics alone, but by their ability to deliver economically validated solutions in real freelance scenarios. By combining authentic tasks, managerial reasoning, and rigorous verification, it offers a rare window into the practical utility of AI in professional coding. For anyone deciding whether—and how—to deploy LLMs in engineering pipelines, SWE-Lancer provides the grounding that synthetic benchmarks cannot.