If you’ve ever struggled to compare BART, T5, and a custom Chinese language model on summarization, translation, or dialogue generation—only to find yourself wrestling with inconsistent APIs, scattered datasets, or incompatible evaluation scripts—you’re not alone. TextBox 2.0 was built to solve exactly this problem. It’s a modern, PyTorch-based Python library that provides a unified, standardized, and extensible framework for text generation using pre-trained language models (PLMs). Whether you’re a researcher prototyping a new controllable generation method or an engineer deploying a lightweight summarizer in production, TextBox 2.0 streamlines the entire workflow—from data loading to training, evaluation, and even pre-training—without forcing you to reinvent the pipeline each time.

Unlike ad-hoc scripts or fragmented toolkits, TextBox 2.0 treats text generation as a cohesive discipline. It standardizes interfaces across models, tasks, and metrics, so your experiment today can seamlessly scale tomorrow. And thanks to its one-command execution and sensible defaults, you can go from cloning the repo to running meaningful experiments in under five minutes.

Broad Task and Dataset Coverage for Real-World Applications

TextBox 2.0 supports 13 common text generation tasks, including machine translation, abstractive summarization, story generation, style transfer, dialogue response generation, and more. Each task is backed by real-world, widely used datasets—83 in total—so you can validate your approach on benchmarks that matter.

For example, need to test a model on meeting summarization? SAMSum is ready to go. Want to explore narrative coherence in long-form story generation? Try WritingPrompts or ROCStories. Working on Chinese-to-English translation? TextBox includes appropriate datasets with proper tokenization and preprocessing handled automatically.

This breadth eliminates the need to stitch together separate data loaders or rewrite evaluation logic for each new use case. Instead, you declare your task and dataset once, and the library handles the rest consistently—ensuring reproducibility and reducing debugging time.

Extensive Model Support Out of the Box

One of the biggest friction points in text generation research is model integration. Different PLMs come with different tokenizers, input formats, and generation quirks. TextBox 2.0 abstracts this complexity away by providing built-in support for 47 pre-trained language models across eight categories:

- General-purpose (e.g., BART, T5, GPT-2)

- Translation-specific (e.g., mBART, M2M100)

- Chinese-optimized (e.g., Chinese-BERT-wwm, CPMA)

- Dialogue models (e.g., DialoGPT, BlenderBot)

- Controllable generation (e.g., CTRL)

- Distilled or lightweight variants (e.g., DistilBART)

- Prompting-friendly architectures

- Custom modules for extensibility

Switching from BART to a distilled Chinese summarization model requires only changing the --model and --model_path flags—no code rewrite needed. This plug-and-play design dramatically accelerates comparative studies and deployment across language domains.

Streamlined End-to-End Workflow

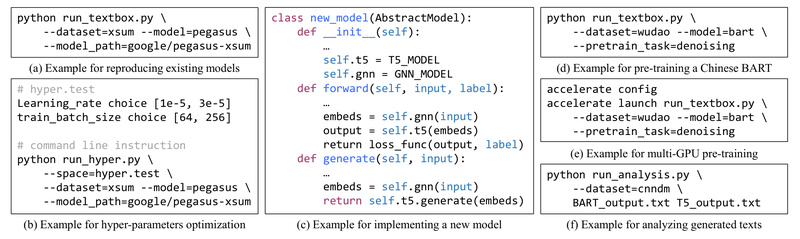

TextBox 2.0’s greatest strength is its unified interface. The entire pipeline—data loading, model instantiation, training, and evaluation—follows a consistent structure. You can run experiments via a simple command-line interface or a clean Python API.

For instance, to fine-tune Facebook’s BART-base on the SAMSum dataset:

python run_textbox.py --model=BART --dataset=samsum --model_path=facebook/bart-base

That’s it. The library automatically downloads (or locates) the dataset, configures the tokenizer, sets up the optimizer, and begins training with sensible defaults. Advanced users can override any parameter via config files or command-line arguments, but beginners aren’t overwhelmed by boilerplate.

This standardization also ensures reproducibility: TextBox 2.0 has been validated to faithfully reproduce results from original papers across multiple models and tasks—a rare and valuable trait in open-source NLP libraries.

Flexible Training and Evaluation for Rapid Experimentation

Beyond fine-tuning, TextBox 2.0 supports pre-training from scratch with four objectives:

- Language modeling

- Masked sequence-to-sequence modeling

- Denoising auto-encoding

- Masked span prediction

It also includes four efficient training strategies:

- Distributed data parallel (for multi-GPU training)

- Efficient decoding (to speed up inference)

- Hyperparameter optimization support

- Repeated experiment utilities (for statistical reliability)

On the evaluation side, the library integrates 17 automatic metrics across four categories—including ROUGE, BLEU, METEOR, BERTScore, and more—plus built-in tools for qualitative analysis and visualization of generated outputs. This lets you assess not just how well a model performs, but why—a critical capability for debugging and iteration.

Limitations and Considerations

While powerful, TextBox 2.0 isn’t a one-size-fits-all solution. A few practical considerations:

- It uses a modified version of Hugging Face Transformers, so installation requires a dedicated conda environment to avoid conflicts.

- Custom datasets must be placed in the

dataset/folder in a specific format (though documentation guides you through this). - It focuses on standard text generation architectures; highly specialized or non-Transformer-based models may require custom integration.

That said, for mainstream PLM-based generation tasks, TextBox 2.0 offers unmatched cohesion and ease of use.

Getting Started in Under 5 Minutes

Ready to try it? Here’s the minimal setup:

- Create a new conda environment:

conda create -n TextBox python=3.8 conda activate TextBox

- Clone and install:

git clone https://github.com/RUCAIBox/TextBox.git cd TextBox bash install.sh

- Run your first experiment:

python run_textbox.py --model=BART --dataset=samsum --model_path=facebook/bart-base

No deep NLP expertise required—just a clear task, a dataset, and a model name.

Summary

TextBox 2.0 eliminates the fragmentation that plagues text generation research and development. By unifying tasks, models, training strategies, and evaluation under a single, well-documented interface, it empowers users to move faster, compare fairly, and reproduce reliably. If your work involves generating text—whether in English, Chinese, or multilingual settings—TextBox 2.0 is a compelling foundation that turns weeks of pipeline engineering into minutes of configuration.