As Large Vision-Language Models (LVLMs) grow increasingly capable—and increasingly complex—evaluating their multimodal reasoning, perception, and reliability has become a significant bottleneck for both researchers and practitioners. Full-scale benchmarks often require thousands of samples, extensive compute, and time-consuming human annotation. Enter TinyLVLM-eHub, a streamlined evaluation framework that delivers comprehensive, human-aligned assessment of LVLMs using just 2.1K image-text pairs.

Developed as a lightweight variant of the broader LVLM-eHub benchmark by OpenGVLab, TinyLVLM-eHub enables rapid offline evaluation of models like Google Bard, LLaVA, Qwen-VL, and others—without needing cloud-scale infrastructure. It’s designed specifically for teams that need to validate, compare, or iterate on LVLMs quickly and efficiently.

Why TinyLVLM-eHub Stands Out

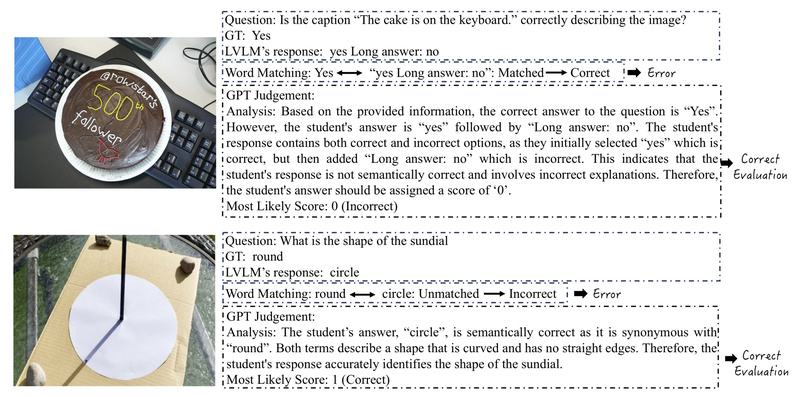

TinyLVLM-eHub isn’t just “smaller”—it’s smarter in how it evaluates. Unlike naive accuracy metrics based on exact word matching, it incorporates the ChatGPT Ensemble Evaluation (CEE) method, which aligns far more closely with human judgment. This means evaluation scores better reflect real-world model quality, especially for open-ended or reasoning-intensive visual questions.

Moreover, the benchmark systematically covers six core multimodal capabilities:

- Visual perception (e.g., object detection, scene understanding)

- Visual reasoning (e.g., spatial or logical inference from images)

- Visual commonsense (e.g., understanding everyday contexts)

- Visual knowledge acquisition (e.g., factual recall from images)

- Object hallucination (measuring when models “see” things not present)

- Embodied intelligence (e.g., navigation or instruction-following in visual environments)

Each capability is probed through a curated subset of 42 standard text-related visual benchmarks, with only 50 samples per dataset—just enough to provide signal without the noise of scale.

Ideal Use Cases for Teams and Practitioners

TinyLVLM-eHub shines in real-world scenarios where speed, reproducibility, and interpretability matter most:

- Model development cycles: Quickly assess whether a fine-tuned LVLM improves over its base version across multiple reasoning dimensions.

- Open-source model selection: Before deploying an LVLM in production, compare its performance against peers using a consistent, public benchmark.

- Academic prototyping: Graduate students or small labs can evaluate novel architectures without access to massive GPU clusters.

- Regression testing: Ensure that model updates don’t degrade performance on core multimodal tasks.

- Fair comparison: All evaluation code, inference outputs, and dataset splits are publicly available, enabling full reproducibility.

Because the dataset is tiny by design, you can run a full evaluation on a single consumer-grade GPU in under an hour—something impossible with full benchmarks like LVLM-eHub (which includes 47 datasets).

Getting Started: Practical Usage

The TinyLVLM-eHub evaluation suite is part of the Multi-Modality Arena GitHub repository. To use it:

-

Set up the environment:

conda create -n arena python=3.10 conda activate arena pip install numpy gradio uvicorn fastapi

-

Access the evaluation materials: The Tiny LVLM-eHub dataset, along with precomputed model predictions and evaluation scripts, is available in the

tiny_lvlm_evaluationdirectory of the repo. -

Run evaluations: Use the provided scripts to score your model on the 2.1K sample benchmark. Results can be directly compared to the public leaderboard, which ranks models like InternVL, Bard, Qwen-VL, and LLaVA-1.5 across the six capability categories.

-

Interpret results: The leaderboard breaks down performance not just by overall score, but by skill type—helping you understand where your model excels or struggles.

For teams integrating custom LVLMs, the repo also includes clear contribution guidelines, including a ModelTester interface for plug-and-play evaluation.

Limitations and Practical Considerations

While TinyLVLM-eHub offers remarkable efficiency, it’s important to understand its scope:

- It’s a diagnostic tool, not a final validator: The “tiny” dataset is ideal for rapid iteration but shouldn’t replace full-scale evaluation before high-stakes deployment.

- Text-related visual tasks only: The benchmark focuses on image + text QA. It does not cover audio, video, 3D, or other multimodal inputs.

- CEE depends on external APIs: The ChatGPT Ensemble Evaluation requires access to an LLM API (like OpenAI’s), which may incur costs or latency in automated pipelines. However, results and evaluation logic are fully documented for offline analysis.

That said, these trade-offs are intentional—TinyLVLM-eHub prioritizes accessibility and alignment over exhaustiveness.

How TinyLVLM-eHub Solves Real Evaluation Pain Points

Traditional LVLM evaluation suffers from three major issues:

- Scale fatigue: Running full benchmarks takes days and terabytes of data.

- Poor alignment with human judgment: Word-overlap metrics often miss nuanced reasoning failures.

- Lack of transparency: Many leaderboards don’t publish inference outputs or evaluation code.

TinyLVLM-eHub directly addresses all three:

- By reducing the benchmark to 2.1K samples, it cuts evaluation time from days to hours.

- By using ChatGPT Ensemble Evaluation, it produces scores that better reflect how humans would judge responses.

- By open-sourcing everything—data, code, model outputs—it enables full reproducibility and trust.

This makes it uniquely suited for individual developers, startups, and research labs that need reliable feedback without enterprise-grade resources.

Summary

TinyLVLM-eHub democratizes high-quality evaluation of Large Vision-Language Models. It offers a fast, reproducible, and human-aligned way to assess multimodal capabilities across six critical dimensions—using less than 0.1% of the data in full benchmarks. Whether you’re comparing open-source models, validating a fine-tuned checkpoint, or prototyping a new architecture, TinyLVLM-eHub provides the signal you need without the computational burden.

With its public leaderboard, transparent methodology, and minimal footprint, it’s an essential tool for any team working with vision-language models in 2026 and beyond.