Video generation using diffusion models has long suffered from a crippling bottleneck: speed. Even the most advanced models can take tens of minutes—or hours—to produce a short clip, rendering them impractical for real-time applications, rapid iteration, or deployment on consumer hardware. TurboDiffusion directly tackles this problem by accelerating end-to-end video diffusion generation by 100 to 200 times, reducing generation time from 184 seconds to just 1.9 seconds on a single RTX 5090 GPU—while maintaining competitive visual quality.

Developed by researchers at THU-ML, TurboDiffusion isn’t just another optimization trick. It’s a cohesive framework combining cutting-edge techniques in attention acceleration, knowledge distillation, and quantization to deliver unprecedented performance without sacrificing fidelity. Whether you’re building AI-powered creative tools, prototyping multimodal systems, or deploying video generation at the edge, TurboDiffusion makes high-speed, high-quality video synthesis feasible on a single GPU.

Why Speed Matters in AI Video Generation

Traditional diffusion-based video models are computationally monstrous. Generating a 5-second, 480p clip with a 1.3B-parameter model like Wan2.1-T2V can take over three minutes on high-end hardware. For 14B-parameter models at 720p, that jumps to nearly 80 minutes. This latency kills interactivity, hampers creative workflows, and inflates cloud inference costs—making real-world adoption nearly impossible.

TurboDiffusion changes the game. By compressing the entire diffusion process into just 1–4 sampling steps, it enables near-instantaneous video synthesis. For context:

- Wan2.1-T2V-1.3B-480P: 184s → 1.9s

- Wan2.1-T2V-14B-480P: 1676s → 9.9s

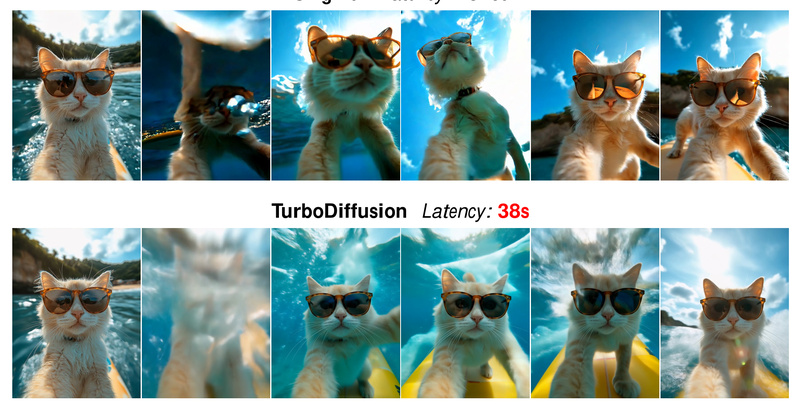

- Wan2.2-I2V-14B-720P: 4549s → 38s

This isn’t marginal improvement—it’s a paradigm shift that unlocks interactive and scalable AI video applications previously deemed infeasible.

Core Acceleration Techniques

TurboDiffusion achieves its dramatic speedups through three synergistic innovations:

1. Attention Acceleration with SageAttention and SLA

The attention mechanism in diffusion transformers is a major computational hotspot. TurboDiffusion replaces standard attention with two optimized alternatives:

- SageAttention: An 8-bit plug-and-play attention module that quantizes activations and weights while preserving numerical stability through outlier-aware smoothing.

- Sparse-Linear Attention (SLA): A trainable sparse attention variant that retains only the top-k most important attention scores (e.g., top 10–15%), drastically reducing FLOPs without quality loss.

When combined as SageSLA, these approaches accelerate attention computation while maintaining coherence in motion and detail.

2. Step Distillation via rCM

Instead of running hundreds of diffusion steps, TurboDiffusion leverages rCM (regularized Continuous-time Consistency Models) to distill the full diffusion process into just 1–4 steps. This step distillation preserves the model’s ability to generate diverse, high-fidelity outputs while collapsing inference time by orders of magnitude.

3. W8A8 Quantization

All linear layers in TurboDiffusion are quantized to 8-bit weights and activations (W8A8). This reduces memory bandwidth pressure, shrinks model size, and accelerates matrix multiplication—particularly effective on modern GPUs with hardware support for INT8 operations. Quantized checkpoints are provided for consumer GPUs like the RTX 4090/5090, while unquantized versions remain available for datacenter-class hardware (e.g., H100).

Practical Use Cases

TurboDiffusion excels in scenarios where speed, accessibility, and single-GPU feasibility are critical:

- Creative prototyping: Generate multiple video variations in seconds for ad campaigns, concept art, or film pre-visualization.

- AI content platforms: Power real-time video generation features in apps without relying on costly multi-GPU clusters.

- Research & development: Rapidly iterate on prompt engineering, motion control, or multimodal alignment without waiting hours per sample.

- Edge deployment: Run high-quality video synthesis on workstations or local servers, avoiding cloud dependency.

The framework supports both text-to-video (T2V) and image-to-video (I2V), enabling rich creative control—from cinematic prompts to animating still images with dynamic motion.

Getting Started in Minutes

TurboDiffusion is designed for immediate usability:

-

Install via pip or source (requires Python ≥3.9, PyTorch ≥2.7.0):

pip install turbodiffusion --no-build-isolation

-

Download checkpoints:

- For RTX 4090/5090: Use quantized models (e.g.,

TurboWan2.1-T2V-1.3B-480P-quant.pth) - For H100: Use unquantized models (omit

-quantsuffix)

Also download shared components: VAE and umT5 text encoder.

- For RTX 4090/5090: Use quantized models (e.g.,

-

Run inference with a single command:

python turbodiffusion/inference/wan2.1_t2v_infer.py --model Wan2.1-1.3B --dit_path checkpoints/TurboWan2.1-T2V-1.3B-480P-quant.pth --prompt "A stylish woman walks down a Tokyo street..." --resolution 480p --num_steps 4 --quant_linear --attention_type sagesla

Options like resolution (480p/720p), aspect ratio, and sampling steps are easily configurable. The system automatically selects optimal settings based on your hardware and checkpoint type.

Limitations and Considerations

While TurboDiffusion dramatically improves speed, users should note:

- Hardware: RTX 4090/5090 (24GB+ VRAM) recommended for quantized models; H100 for unquantized.

- Checkpoint maturity: The team notes that models and documentation are still being refined—quality may improve in future releases.

- End-to-end timing: Reported speedups exclude text encoding and VAE decoding, which add modest overhead.

- Quality trade-offs: Using a higher

sigma_max(e.g., 1600 vs. default 80) can enhance visual fidelity but reduces motion diversity.

Despite these nuances, TurboDiffusion sets a new standard for practical, high-performance AI video generation.

Summary

TurboDiffusion solves the most pressing barrier to real-world adoption of diffusion-based video models: unbearable inference latency. By integrating SageAttention, SLA, rCM distillation, and W8A8 quantization into a unified, easy-to-use framework, it delivers 100–200× speedups on a single consumer GPU without compromising quality. For developers, researchers, and creators seeking to build responsive, scalable, or locally deployable AI video systems, TurboDiffusion is a breakthrough worth adopting today.