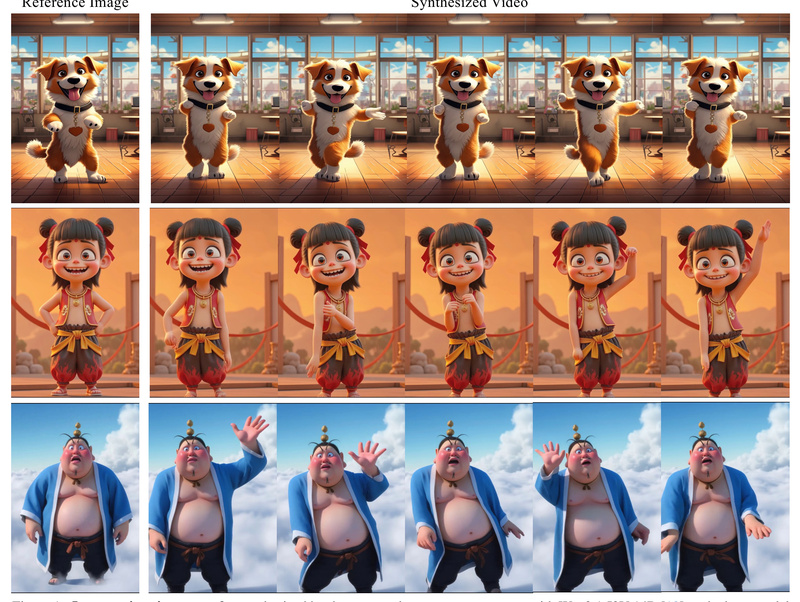

Animating a static human image into a realistic, temporally coherent video used to require massive datasets, complex pipelines, or retraining large generative models from scratch. UniAnimate-DiT changes that. Built on the powerful Wan2.1-14B-I2V video diffusion transformer, UniAnimate-DiT enables high-quality human image animation from just one reference image and a sequence of driving poses—without modifying the core foundation model.

What makes this approach practical for real-world teams is its balance of visual fidelity, computational efficiency, and deployment flexibility. Whether you’re building virtual avatars, interactive characters, or personalized video content, UniAnimate-DiT delivers professional-grade results while keeping training costs low and inference viable on a single high-end GPU.

How It Works: Leveraging a Pretrained Video Diffusion Transformer

At its core, UniAnimate-DiT is an extension of the Wan2.1-14B-I2V model—a state-of-the-art DiT (Diffusion Transformer)-based image-to-video generator. Rather than discarding or retraining this massive 14-billion-parameter model, UniAnimate-DiT preserves its original generative capabilities and instead introduces minimal, targeted adaptations.

This is achieved through Low-Rank Adaptation (LoRA), which fine-tunes only a small subset of parameters. The result? You retain the foundation model’s ability to generate rich textures, realistic lighting, and natural motion while reducing memory overhead and training time dramatically.

To inject motion, UniAnimate-DiT uses a lightweight 3D convolutional pose encoder that processes the driving pose sequence (e.g., extracted from a reference video). Crucially, the system also encodes the pose of the reference image itself, enabling better alignment between the static input and the animated output—leading to fewer artifacts and smoother transitions.

Key Advantages for Technical Decision-Makers

Drastically Reduced Training Cost with LoRA

Full fine-tuning of a 14B-parameter video diffusion model is prohibitively expensive. UniAnimate-DiT sidesteps this by using LoRA, which adds only a few million trainable parameters. This allows teams to adapt the model to custom motion styles or domains using modest datasets (e.g., ~1,000 videos) and a single A100/A800 GPU.

Efficient Inference with Real-World Speed Optimizations

Generating high-res video is often slow, but UniAnimate-DiT includes multiple practical speedups:

- Teacache: Enables ~4× faster inference by caching intermediate features. While it may slightly affect visual quality in edge cases, it’s ideal for rapid prototyping or seed selection.

- Configurable VRAM usage: Adjust

num_persistent_param_in_ditto trade between speed and memory (e.g., 14 GB instead of 23 GB for 480p). - Optional classifier-free guidance: Setting

cfg_scale=1.0disables guidance for 2× speedup with minimal quality loss.

On a single A800 GPU, you can generate 5-second 480p videos in ~3 minutes and 720p videos in ~13 minutes—remarkable for a model of this scale.

Seamless 480p-to-720p Generalization—No Extra Upscaling Needed

The model was trained exclusively on 832×480 video clips. Yet, it generalizes robustly to 1280×720 resolution during inference without any architectural changes or post-processing. This eliminates the need for separate super-resolution modules and ensures consistent visual quality at higher resolutions.

Practical Use Cases

UniAnimate-DiT is ideal for applications where you have a character image and want to bring it to life with expressive motion:

- Animated avatars for customer service bots or virtual assistants

- Dynamic game NPCs driven by motion capture or predefined poses

- Personalized marketing videos using customer-uploaded photos

- Content creation tools for social media influencers or digital artists

All that’s required is a single reference image and a pose sequence—making it far more accessible than methods requiring 3D reconstruction, multi-view inputs, or full-body scans.

Getting Started: A Straightforward Workflow

Setting up UniAnimate-DiT involves four clear steps:

- Install dependencies via Conda and pip, including PyTorch 2.5+ and the UniAnimate-DiT package.

- Download two model components:

- The base Wan2.1-14B-I2V-720P model (from Hugging Face or ModelScope)

- The UniAnimate-DiT LoRA weights (lightweight adapter)

- Align poses between your reference image and driving video using the provided

run_align_pose.pyscript, which normalizes scale and orientation. - Run inference with preconfigured scripts for 480p, 720p, or long videos—with easy toggles for speed/memory trade-offs.

The codebase is built on DiffSynth-Studio, ensuring modularity and ease of integration into existing pipelines.

Scalability for Production Environments

While usable on a single GPU, UniAnimate-DiT also supports multi-GPU inference via Unified Sequence Parallel (USP), leveraging the xfuser framework for distributed generation. This is critical for batch processing or real-time applications requiring low latency.

Long-video generation is also supported, though note that teacache acceleration for 720p long videos may cause background inconsistencies—a known limitation the team is actively addressing.

Current Limitations and Realistic Expectations

UniAnimate-DiT is powerful but not magic. Keep these constraints in mind:

- GPU memory: Requires 23 GB VRAM for 480p and 36 GB for 720p under default settings (reducible with parameter tuning).

- Teacache trade-offs: Acceleration may slightly degrade visual consistency, especially in long 720p sequences.

- Research focus: The project is released for academic and research use, with a clear disclaimer against misuse.

- Motion dependency: Animation quality hinges on clean, aligned pose inputs—garbage in, garbage out.

Summary

UniAnimate-DiT represents a pragmatic advance in human image animation. By combining the generative power of Wan2.1 with efficient LoRA adaptation and smart inference optimizations, it delivers high-fidelity, temporally consistent animations with minimal training overhead and practical inference speeds. For teams exploring character animation, virtual humans, or personalized video generation, it offers a compelling balance of quality, efficiency, and accessibility—making it a strong candidate for integration into research prototypes and production pipelines alike.