Autonomous driving systems must balance accuracy, safety, and real-time performance. Traditional approaches often rely on dense rasterized representations of the environment—such as occupancy grids or semantic maps—which are computationally expensive and lose critical instance-level structural details. Enter VAD (Vectorized Autonomous Driving): a state-of-the-art, end-to-end framework that reimagines scene understanding through a fully vectorized representation, enabling both faster inference and safer trajectory planning.

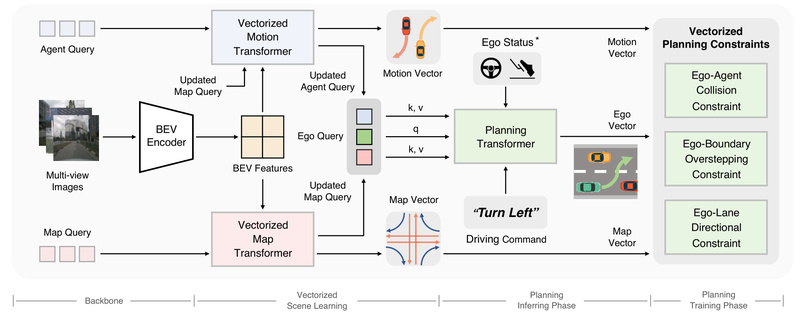

Developed by researchers from Huazhong University of Science and Technology and Horizon Robotics, VAD replaces pixel-based maps with explicit vectors for agents (vehicles, pedestrians) and map elements (lanes, road boundaries). This shift not only streamlines the planning pipeline but also introduces interpretable, instance-aware constraints directly into the decision-making process—without requiring hand-crafted post-processing logic.

Published at ICCV 2023 and open-sourced on GitHub, VAD delivers compelling results on the nuScenes benchmark and CARLA simulator, making it a compelling choice for practitioners focused on deployable, efficient autonomous driving solutions.

Why Vectorization Matters in Autonomous Driving

Most end-to-end driving models encode the environment as high-resolution images or BEV (Bird’s Eye View) grids. While effective in capturing spatial context, these rasterized formats suffer from two key drawbacks:

- High computational cost: Dense feature maps demand significant memory and processing power.

- Loss of semantic granularity: Individual objects and lane segments are flattened into pixels, obscuring their identity and relationships.

VAD addresses both by modeling the world as a set of vector primitives—each representing a dynamic agent’s trajectory or a static map feature. These vectors preserve geometry, semantics, and topology, enabling the planner to reason about interactions at the object level.

This design allows VAD to implicitly learn scene dynamics through cross-attention while explicitly enforcing vectorized constraints during trajectory generation—leading to more interpretable and collision-averse behaviors.

Key Advantages for Real-World Deployment

VAD isn’t just theoretically elegant—it delivers measurable gains that matter in production:

- 29% lower collision rate: VAD-Base reduces average collision rates by 29.0% compared to prior methods on nuScenes.

- Dramatic speedup: VAD-Tiny achieves up to 9.3× faster inference than previous end-to-end planners while maintaining competitive planning accuracy.

- No post-processing needed: The entire pipeline—from perception to planning—is unified in a single neural network, eliminating brittle rule-based modules.

- Strong simulation performance: In closed-loop CARLA evaluations, VAD-Base outperforms established methods like Transfuser and ST-P3 across multiple urban driving scenarios.

These improvements make VAD uniquely suited for real-time systems where latency and safety are non-negotiable.

Ideal Applications and Use Cases

VAD shines in contexts where efficiency, safety, and end-to-end simplicity are priorities:

- Real-time autonomous driving stacks: Its lightweight variants (e.g., VAD-Tiny) enable deployment on edge hardware with constrained compute.

- End-to-end planning research: Researchers can build upon VAD’s vectorized paradigm to explore probabilistic planning (as in VADv2) or integration with vision-language models (e.g., Senna).

- Simulation-based validation: With official support for CARLA via the Bench2Drive framework, VAD is ready for closed-loop testing in photorealistic environments.

- Urban driving scenarios: Trained on nuScenes—a dataset focused on complex city driving—VAD is optimized for multi-agent, lane-rich environments.

If your project involves perception-to-control integration in structured urban settings, VAD offers a streamlined, high-performance foundation.

Getting Started with VAD

The VAD codebase is publicly available on GitHub and includes pre-trained models, training configurations, and evaluation scripts. Here’s how to begin:

- Clone the repository: Access the official code at https://github.com/hustvl/VAD.

- Prepare data: Download and organize the nuScenes dataset following the provided instructions.

- Choose a model variant: Start with VAD-Base for best performance or VAD-Tiny for speed-critical applications.

- Run evaluation: Use the provided configs to reproduce reported results or adapt the model to your own data pipeline.

- Visualize outputs: The repo includes tools to render predicted trajectories and vectorized scene representations for qualitative analysis.

Both VAD v1 and VAD v2 (which introduces probabilistic planning) are supported, with modular components that simplify experimentation.

Limitations and Practical Considerations

While VAD represents a significant step forward, users should be aware of its current scope:

- Dataset dependency: Training and validation are centered on nuScenes, which captures specific urban driving conditions. Performance may vary in rural, highway, or non-Western traffic contexts without fine-tuning.

- Backbone constraints: Official models use ResNet-50 as the image encoder; integrating alternative backbones (e.g., Vision Transformers) requires non-trivial modifications.

- Real-world readiness: Although VAD excels in simulation and open-loop benchmarks, full real-world deployment demands additional safety layers, sensor calibration, and regulatory validation beyond the scope of the research codebase.

That said, VAD’s clean architecture and strong baseline make it an excellent starting point for both academic exploration and industrial prototyping.

Summary

VAD redefines end-to-end autonomous driving by replacing inefficient rasterized maps with a compact, interpretable vectorized scene representation. It delivers faster inference, lower collision rates, and a simplified pipeline—all critical for real-world adoption. Whether you’re a researcher prototyping next-gen planners or an engineer optimizing for embedded deployment, VAD offers a robust, open-source foundation backed by state-of-the-art results on nuScenes and CARLA. With its balance of performance, safety, and efficiency, VAD is a compelling choice for anyone building the future of autonomous mobility.