In the rapidly evolving world of generative AI, video generation has remained a particularly challenging frontier—especially when it comes to realistic motion, visual fidelity, and flexible content creation. Many existing solutions either specialize narrowly (e.g., text-to-image only), struggle with dynamic movement, or require complex pipelines to achieve acceptable quality. Enter Waver, a next-generation foundation model that unifies text-to-video (T2V), image-to-video (I2V), and text-to-image (T2I) generation in a single, high-performance architecture.

Developed by FoundationVision, Waver delivers lifelike, 1080p videos up to 10 seconds long, with industry-leading motion realism and temporal consistency. It doesn’t just generate videos—it captures the physics of motion, from a tennis swing to a dancer’s pirouette, with amplitude and fluidity that set it apart from most open-source—and even commercial—alternatives. For technical decision-makers, creators, and researchers seeking a reliable, all-in-one solution for short-form video generation, Waver offers a compelling combination of quality, flexibility, and benchmark-proven performance.

Unified Generation Without Compromise

One of Waver’s standout features is its all-in-one design. Instead of maintaining separate models for image synthesis, video-from-text, and video-from-image tasks, Waver handles all three within a single DiT (Diffusion Transformer) framework. This integration simplifies deployment, reduces maintenance overhead, and ensures consistent visual style across modalities.

The model achieves this through a clever input-channel strategy: video tokens, image tokens (as the first frame), and a task-specific mask are fed into the same architecture. During training, image conditioning is included 20% of the time, enabling seamless switching between T2I, T2V, and I2V at inference—no retraining or model swapping required.

High-Fidelity Output with Smart Upscaling

Waver generates videos natively at 720p resolution (2–10 seconds in length) and then upscales them to crisp 1080p using a dedicated Cascade Refiner. This two-stage approach isn’t just about resolution—it’s about efficiency and quality.

The refiner, built on the same rectified flow Transformer architecture, uses windowed attention and flow matching to cut inference time by up to 60% compared to end-to-end 1080p generation. This means faster turnaround without sacrificing detail, motion smoothness, or visual coherence—critical for iterative creative workflows or batch processing in production environments.

Motion That Moves Realistically

Perhaps Waver’s most significant technical achievement is its superior motion modeling. While many video generators produce sluggish, repetitive, or physically implausible movements, Waver excels at high-amplitude, complex actions—jumping athletes, swirling leaves, rotating machinery—thanks to its training strategy and architecture.

The team behind Waver invested heavily in low-resolution (192p) pre-training to capture motion dynamics before scaling up to 480p and 720p. They also adopted a mode-based timestep sampling strategy (instead of uniform sampling) during T2V/I2V training, which empirically enhances motion intensity. Additionally, the model is evaluated on custom benchmarks like the Hermes Motion Testset (96 prompts covering 32 sports), where it consistently outperforms competitors in motion realism.

Flexible, Style-Aware Generation

Waver supports variable video lengths (2–10 seconds), multiple aspect ratios, and a wide range of visual styles—from photorealistic footage to Ghibli-inspired animation, Disney-style cartoons, or voxel-based 3D renderings. This versatility stems from a technique called prompt tagging.

During training, captions are augmented with style descriptors (prepended) and quality indicators (appended). At inference, users can invoke specific aesthetics by including style tags (e.g., “in the style of a 2D cartoon picture book”), while negative prompts can suppress artifacts like “slow motion” or “low definition.” This gives creators fine-grained control without requiring model fine-tuning.

Optimized Inference for Real-World Use

Generating high-quality video isn’t just about the model—it’s about the inference pipeline. Waver integrates Adaptive Projected Guidance (APG), a technique that decomposes the classifier-free guidance update into parallel and orthogonal components. By down-weighting the parallel component, APG reduces oversaturation and visual artifacts while preserving realism.

The team also found that normalizing latents along the [C, H, W] dimensions (rather than [C, T, H, W]) further minimizes temporal flickering. With a guidance scale of 8 and a normalization threshold of 27, Waver strikes an optimal balance between fidelity and stability—crucial for predictable, production-ready outputs.

Benchmark Leadership and Open Validation

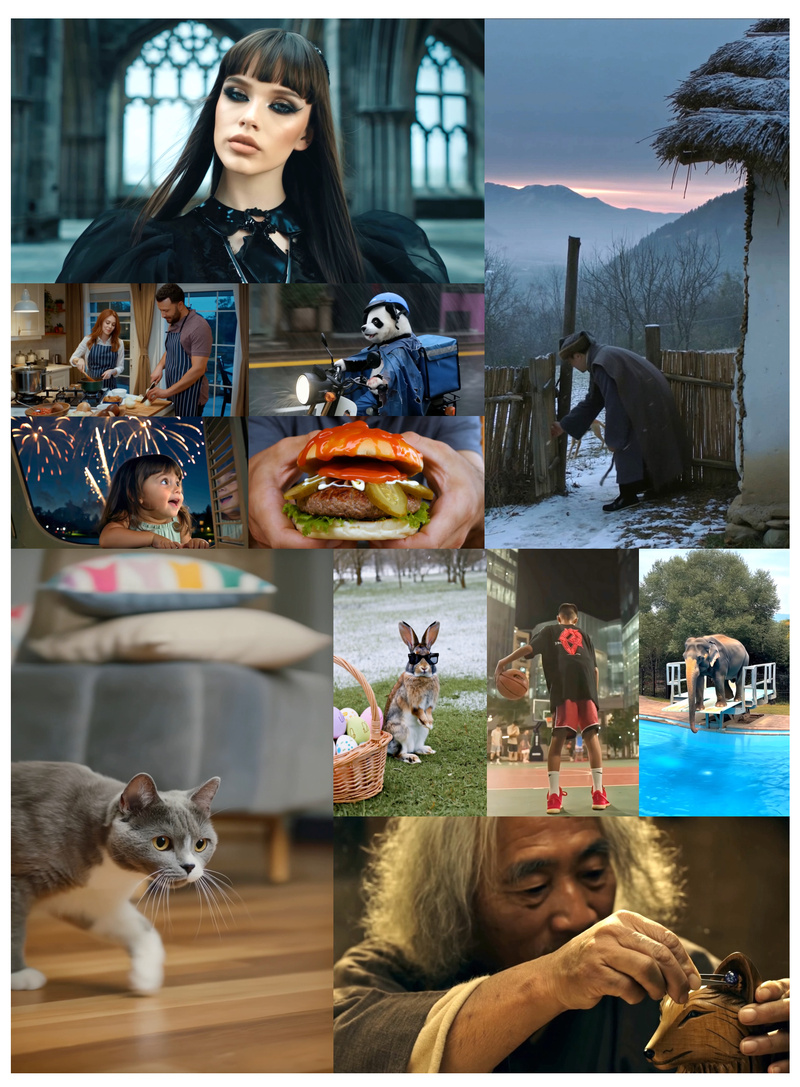

As of mid-2025, Waver ranks Top 3 on both the Text-to-Video and Image-to-Video leaderboards at Artificial Analysis, outperforming nearly all open-source models and matching or exceeding leading commercial systems. These results are validated not just on generic benchmarks but on Waver-Bench 1.0—a 304-sample test covering sports, animals, landscapes, surreal scenes, and more—and the motion-focused Hermes Testset.

This transparency and third-party validation give technical evaluators confidence in Waver’s real-world capabilities, not just its theoretical potential.

Ideal for Creators, Developers, and Researchers

Waver is particularly well-suited for:

- Content creators needing dynamic, stylized short videos for social media or ads.

- Game developers prototyping cinematic cutscenes or animated assets.

- Marketing teams generating personalized video content from product images or text briefs.

- AI researchers studying multimodal generation, motion modeling, or scalable video synthesis.

Its open technical report and detailed training/inference recipes lower the barrier to adoption, while its unified architecture streamlines integration into existing pipelines.

Current Limitations to Consider

Despite its strengths, Waver isn’t a panacea. Key limitations include:

- Maximum video length of 10 seconds, making it unsuitable for long-form narrative content.

- High GPU memory requirements, as with most high-resolution diffusion video models.

- No frame-level temporal control, meaning users can’t specify exact actions at precise timestamps—generation remains prompt-driven and holistic.

These constraints make Waver best suited for short, high-impact visual storytelling rather than cinematic editing or interactive video applications.

Summary

Waver represents a significant leap in unified, high-motion video generation. By combining T2I, T2V, and I2V in one model, delivering 1080p output with intelligent upscaling, and excelling at complex motion realism, it addresses core pain points that have plagued earlier systems. Backed by rigorous benchmarks, practical inference optimizations, and flexible style control, Waver empowers technical teams to generate professional-grade video content efficiently and consistently. For anyone evaluating next-generation video foundation models, Waver deserves serious consideration.