YOLOv9 marks a significant leap forward in real-time object detection by directly confronting a long-standing but often overlooked problem in deep learning: information loss during feature extraction. As data passes through successive layers of a neural network, critical details can degrade or vanish—especially in deep architectures—leading to suboptimal gradients and weaker predictions.

What sets YOLOv9 apart is its novel Programmable Gradient Information (PGI) mechanism, which ensures that full input information is preserved and utilized when computing gradients for weight updates. This innovation enables models trained from scratch to outperform state-of-the-art detectors that rely on large-scale pretraining. Coupled with a new lightweight backbone called Generalized Efficient Layer Aggregation Network (GELAN), YOLOv9 achieves superior accuracy with better parameter efficiency—using only standard convolutions, not depthwise ones.

Whether you’re building autonomous drones, industrial inspection systems, or privacy-sensitive vision pipelines, YOLOv9 offers a compelling combination of accuracy, flexibility, and independence from pretrained weights—making it a strong candidate for both research and production.

Core Innovations: Solving Information Loss in Deep Networks

Programmable Gradient Information (PGI)

Traditional object detectors optimize a loss function based on predictions made from increasingly abstract feature maps. However, the deeper the network, the more likely it is that fine-grained spatial and semantic details are lost—creating an information bottleneck. YOLOv9 introduces PGI to circumvent this issue.

PGI doesn’t just pass gradients backward through the main prediction path. Instead, it creates auxiliary pathways that preserve and deliver complete input-level information to the loss computation. This ensures that the gradients used to update early-layer weights remain informative and aligned with the original input, even after dozens of transformation layers.

The result? Models that learn more effectively from limited or domain-specific data—without needing ImageNet pretraining. In fact, YOLOv9 demonstrates that train-from-scratch performance can surpass pretrained baselines, a rare and valuable property for teams working under data privacy constraints or in novel domains.

GELAN: A Lightweight, Efficient Architecture

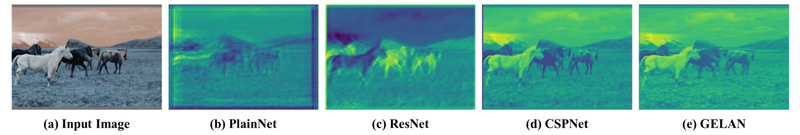

YOLOv9 also debuts GELAN (Generalized Efficient Layer Aggregation Network), a new backbone designed through gradient path planning. Unlike many modern detectors that rely on depthwise convolutions to reduce parameters, GELAN uses only standard convolutions yet achieves better parameter utilization and higher accuracy.

This makes GELAN not only more hardware-friendly (standard convolutions are better optimized on most GPUs and accelerators) but also more stable during training. The architecture scales elegantly from tiny to large models, enabling consistent gains across the board.

Performance Across Use Cases

YOLOv9 isn’t just a theoretical advance—it delivers state-of-the-art results on the MS COCO benchmark across multiple model sizes:

| Model | AP<sup>val</sup> | Parameters | FLOPs |

|---|---|---|---|

| YOLOv9-T | 38.3% | 2.0M | 7.7G |

| YOLOv9-S | 46.8% | 7.1M | 26.4G |

| YOLOv9-M | 51.4% | 20.0M | 76.3G |

| YOLOv9-C | 53.0% | 25.3M | 102.1G |

| YOLOv9-E | 55.6% | 57.3M | 189.0G |

Notably, YOLOv9-C achieves 53.0% AP with only 25.3M parameters, outperforming many heavier models that depend on complex operators or pretrained checkpoints.

Beyond detection, YOLOv9’s framework supports instance segmentation, panoptic segmentation, and (in future releases) image captioning—all using the same core architecture and training methodology. This multi-task readiness makes it ideal for unified perception systems in robotics or autonomous vehicles.

Practical Deployment and Integration

YOLOv9 is built with real-world deployment in mind. The official repository provides robust tooling for:

- Inference with pre-trained or custom-trained models

- Fine-tuning on your own datasets using the provided

train_dual.pyscript - Model conversion via re-parameterization (critical for optimal inference speed)

- Export to industry-standard formats: ONNX, TensorRT, OpenVINO, TFLite, and more

- Integration with tracking pipelines: StrongSORT, ByteTrack, and DeepSORT are already supported

- Deployment on edge devices: ROS wrappers, AX650N support, and OpenCV compatibility

For quick experimentation, Colab and Hugging Face demos are available. Tools like AnyLabeling also simplify annotation and model validation.

Training is straightforward—just prepare your COCO-format dataset and run:

python train_dual.py --data data/coco.yaml --cfg models/detect/yolov9-c.yaml --weights '' --batch 16 --img 640 --epochs 500

The --weights '' flag explicitly indicates training from scratch, showcasing PGI’s power without external dependencies.

Limitations and Considerations

While YOLOv9 offers compelling advantages, several practical factors should be considered:

- Larger variants (e.g., YOLOv9-E) demand significant compute (189 GFLOPs), making them less suitable for ultra-low-power edge devices.

- Re-parameterization is required before deployment to merge auxiliary branches and achieve optimal speed. Skipping this step leaves the model slower and larger than necessary.

- Image captioning and some advanced multi-task features are mentioned in the paper but not yet publicly released.

- Training memory usage may be higher than standard YOLO models due to PGI’s auxiliary pathways—plan accordingly for GPU memory.

Despite these, the trade-offs are often justified by the gains in accuracy and training flexibility—especially when pretraining data is unavailable or undesirable.

Summary

YOLOv9 redefines what’s possible in object detection by tackling information loss head-on. With Programmable Gradient Information and the GELAN architecture, it delivers high accuracy even when trained from scratch—eliminating reliance on large pretrained models while maintaining hardware efficiency.

Its support for detection, segmentation, and future multi-task vision, combined with mature deployment tooling, makes YOLOv9 a versatile choice for researchers, engineers, and product teams building next-generation vision systems. If you need a detector that’s both powerful and principled—without the baggage of external pretraining—YOLOv9 deserves serious consideration.