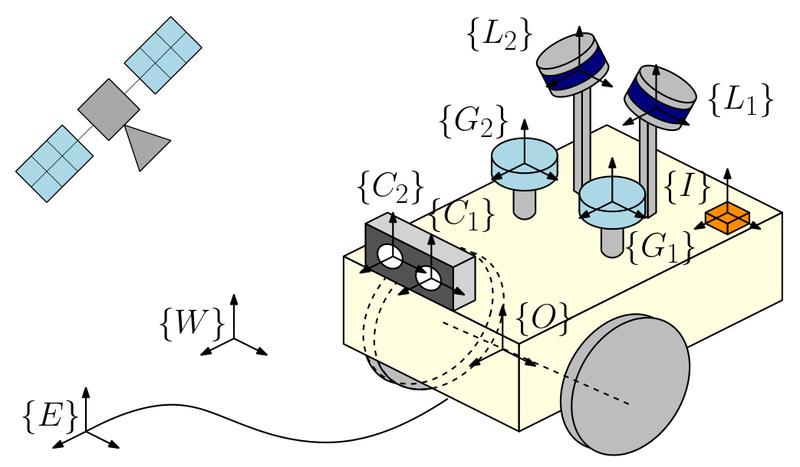

In the world of autonomous systems—whether robots, drones, or self-driving vehicles—accurate and reliable state estimation is non-negotiable. Yet real-world deployments are plagued by sensor failures, poor calibration, noisy environments, and computational bottlenecks. Enter MINS (Multisensor-aided Inertial Navigation System): an open-source, tightly-coupled navigation framework that fuses data from up to five sensor modalities—IMU, wheel encoders, cameras, LiDAR, and GNSS—into a single, consistent, and computationally efficient estimate of pose and motion.

Unlike loosely coupled approaches that treat sensors independently, MINS integrates all measurements directly into a unified state estimator, preserving statistical consistency while maximizing robustness. Developed by the Robot Perception and Navigation Group (RPNG) at the University of Delaware, MINS addresses long-standing challenges in multisensor fusion: asynchronous data streams, calibration drift, and scalability across sensor combinations. For engineers and researchers building resilient autonomy stacks, MINS offers a production-ready solution that balances performance, accuracy, and practicality.

Why Multisensor Fusion Matters—and Why MINS Stands Out

Autonomous systems rarely rely on a single sensor. IMUs provide high-frequency motion data but drift over time. Cameras offer rich visual features but fail in darkness or textureless scenes. LiDAR gives precise geometry but is expensive and sensitive to weather. GNSS works outdoors but vanishes in tunnels or urban canyons. Wheel encoders are great for ground robots—but only if the wheels don’t slip.

The key insight behind MINS is redundancy through fusion: by intelligently combining complementary sensors, the system becomes more than the sum of its parts. But doing this tightly and efficiently is hard. Many existing frameworks either sacrifice consistency for speed or become computationally intractable as sensor counts grow.

MINS solves this with three core innovations:

1. Consistent High-Order On-Manifold Interpolation

Sensors don’t fire in sync. Cameras might run at 30 Hz, LiDAR at 10 Hz, and IMUs at 200 Hz. Traditional filters struggle to align these streams without introducing error or lag. MINS introduces a high-order interpolation scheme on the manifold of poses (i.e., respecting the geometry of SE(3)), enabling accurate state estimation between discrete IMU updates—even when other sensors arrive asynchronously. This ensures all measurements are fused at the right point in time, without approximating the system dynamics.

2. Dynamic Cloning for Adaptive Complexity

Not all motion requires the same level of state representation. MINS uses dynamic cloning—a strategy that adaptively creates or removes state clones (historical pose estimates) based on motion-induced observability. During high-dynamic maneuvers, more clones are kept to capture trajectory curvature; during steady motion, clones are pruned to reduce computation. This keeps the estimator lightweight without sacrificing accuracy.

3. Full Online Spatiotemporal Calibration

Poor extrinsic (position/orientation) or temporal (time offset) calibration between sensors is a silent killer of fusion performance. MINS performs online calibration of all onboard sensors, continuously refining intrinsic parameters (e.g., camera intrinsics), extrinsics (e.g., LiDAR-to-IMU transform), and time offsets—even during operation. This compensates for mechanical wear, thermal drift, or imperfect initial setup.

Initialization Without External Cues

Most visual-inertial systems require observing a static environment to initialize. But what if you’re in a moving elevator, a dense forest, or a featureless warehouse? MINS introduces a proprioceptive-only initialization method using just IMU and wheel encoder data. By leveraging motion dynamics (e.g., non-holonomic constraints from wheels), it bootstraps the system without needing visual landmarks or GNSS fixes—making it uniquely robust in highly dynamic or unstructured environments.

Flexible Sensor Configurations for Real-World Needs

MINS isn’t locked into one sensor combo. It supports arbitrary combinations, including:

- VINS: mono, stereo, or multi-camera + IMU

- GPS-IMU: single or multiple GNSS receivers

- LiDAR-IMU: for 3D map alignment

- Wheel-IMU: ideal for ground robots

- Full-stack: Camera + LiDAR + GNSS + wheel + IMU

This flexibility makes MINS a universal backend for research platforms, commercial autonomy stacks, and edge robotics—where sensor suites evolve over time.

Proven Performance on Challenging Real-World Data

MINS has been validated on large-scale datasets like KAIST Urban, which features complex city driving with frequent GNSS outages, rapid motion, and multimodal sensor noise. Benchmarks show MINS outperforms state-of-the-art methods in localization accuracy, estimation consistency (via NEES tests), and computational efficiency—even under sensor dropouts or calibration errors.

Getting Started: Simulate, Evaluate, Deploy

MINS is built on ROS (Melodic/Noetic) and runs on Ubuntu 18.04/20.04. Setup is straightforward:

mkdir -p (MINS_WORKSPACE/catkin_ws/src/ && cd )MINS_WORKSPACE/catkin_ws/src/ git clone https://github.com/rpng/MINS cd .. && catkin build source devel/setup.bash

You can immediately:

- Simulate multisensor scenarios:

roslaunch mins simulation.launch cam_enabled:=true lidar_enabled:=true - Run real datasets: Process KAIST or EuRoC bags with preconfigured launch files

- Visualize trajectories, sensor poses, and point clouds in RViz

- Evaluate consistency, accuracy, and timing using the included toolbox

The codebase also includes a multisensor simulator (extending OpenVINS) to generate synthetic IMU, camera, LiDAR, GNSS, and wheel data—ideal for stress-testing your fusion pipeline before real-world deployment.

Limitations and Practical Considerations

While powerful, MINS has a few constraints to consider:

- ROS dependency: Designed for ROS 1 (Melodic/Noetic); not compatible with ROS 2 or non-ROS systems without significant porting.

- Eigen version sensitivity: Requires Eigen 3.3.7 or lower due to compatibility with

libpointmatcher(used for LiDAR processing). Newer Eigen versions may cause build failures. - Linux-only: Tested exclusively on Ubuntu 18.04 and 20.04. Support for other OSes isn’t provided.

If your stack aligns with ROS and Ubuntu, these are minor trade-offs for a battle-tested, open-source navigation core.

Summary

MINS delivers what many multisensor navigation systems promise but few achieve: robustness through tight fusion, efficiency through adaptive state management, and reliability through continuous self-calibration. Whether you’re building an autonomous delivery robot that must navigate downtown alleys, a research platform comparing sensor configurations, or a safety-critical system that can’t afford localization failure, MINS provides a scalable, transparent, and high-performance foundation. With its open-source release—including simulation, evaluation, and real-world examples—it lowers the barrier to deploying professional-grade sensor fusion in your next project.