In clinical radiology, interpreting chest X-rays (CXRs) demands more than just identifying abnormalities—it requires synthesizing visual findings, clinical context, patient history, and diagnostic logic into coherent reasoning. Yet, most AI tools today operate in isolation: one model detects pneumonia, another segments the lungs, a third generates reports. This fragmentation creates a “siloed model” problem that hinders real-world clinical adoption.

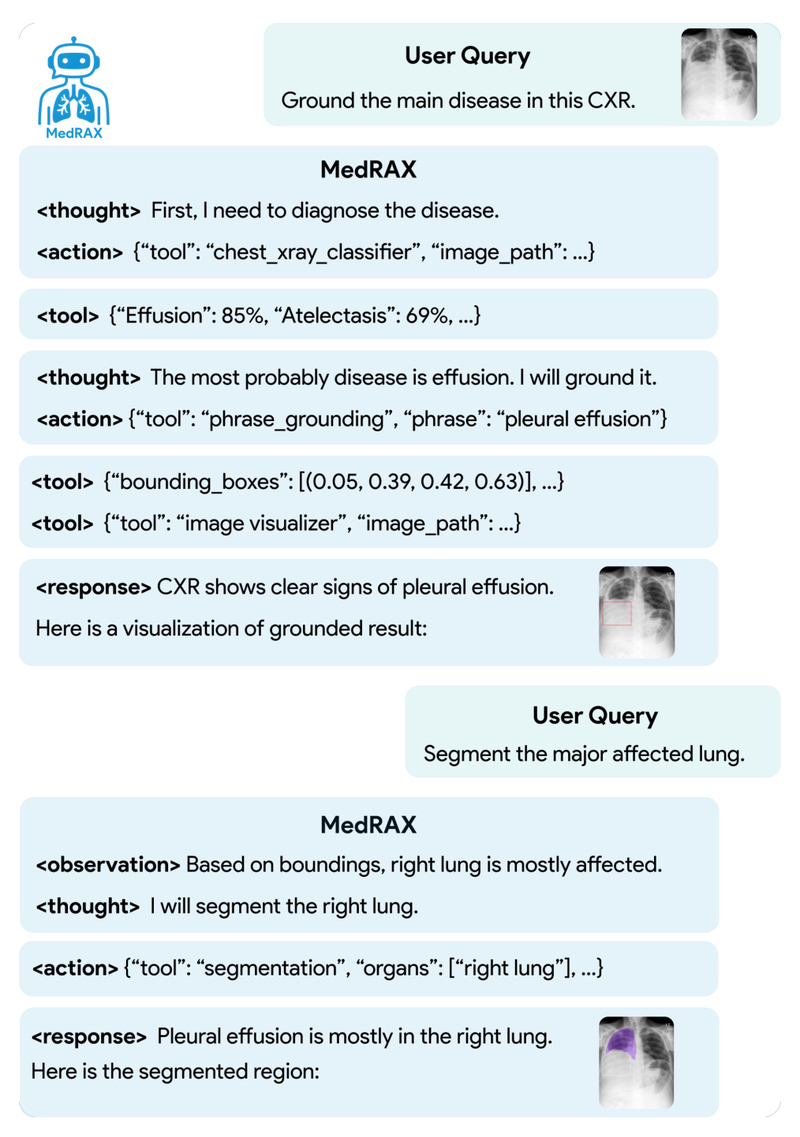

Enter MedRAX: the first versatile medical reasoning agent designed specifically for chest X-ray interpretation. Rather than training a single monolithic model, MedRAX intelligently orchestrates multiple state-of-the-art CXR analysis tools—ranging from disease classification to anatomical segmentation and visual question answering—within a unified framework powered by a vision-capable multimodal large language model (LLM). Crucially, it does all this without requiring any additional training, making it both adaptable and immediately deployable.

Built on LangChain and LangGraph, MedRAX leverages GPT-4o (or any OpenAI-compatible vision LLM) as its reasoning backbone, dynamically selecting and combining specialized tools to answer complex, multi-step medical queries. Whether you’re comparing findings across timepoints, localizing lesions, or generating differential diagnoses, MedRAX treats CXR interpretation as a reasoning process, not just a prediction task.

Why the Medical AI Community Needs MedRAX

Traditional medical AI systems excel at narrow tasks but fail when faced with real clinical complexity. A radiologist doesn’t just say “pneumonia present”; they assess location, severity, associated findings, and implications for treatment. Existing models can’t chain these insights together.

MedRAX solves this by acting as a coordinating intelligence that:

- Understands natural language queries involving visual and clinical reasoning (e.g., “Compare these two X-rays and explain if the effusion has resolved”)

- Automatically routes subtasks to the right expert tool (classification → segmentation → report synthesis)

- Maintains contextual awareness across steps, ensuring coherent final answers

This architecture mirrors how human experts think—modular yet integrated—making MedRAX uniquely suited for real clinical workflows, education, and AI prototyping.

Key Features That Enable Practical Deployment

Modular, Tool-Agnostic Architecture

MedRAX doesn’t lock you into specific models. Its design allows seamless integration of new or custom tools. Currently supported capabilities include:

- Disease Classification: Using DenseNet-121 (TorchXRayVision) for 18 pathologies

- Anatomical Segmentation: Via MedSAM and PSPNet trained on ChestX-Det

- Visual Grounding: Maira-2 for precise localization of findings

- Report Generation: SwinV2 Transformer trained on CheXpert Plus

- Visual QA: CheXagent and LLaVA-Med for complex reasoning

- Synthetic X-ray Generation: RoentGen (manual setup required)

- DICOM Processing & Visualization: Built-in utility tools

You can initialize only the tools you need, reducing resource demands and simplifying deployment.

Production-Ready Interface with Gradio

With a single command (python main.py), you get a fully interactive web interface for testing queries, uploading images, and inspecting results—ideal for demos, clinical validation, or internal tooling.

Flexible Inference Backends

While optimized for GPT-4o with vision, MedRAX supports any OpenAI-compatible API. This includes local LLMs (e.g., via Ollama or LM Studio) or cloud providers like Alibaba Cloud’s Qwen3-VL, giving teams control over cost, latency, and data privacy.

Cloud or Local Deployment

Whether you’re running on a GPU workstation or in a secure hospital cloud, MedRAX adapts. Model weights are auto-downloaded from Hugging Face (except RoentGen), and quantization options (8-bit/4-bit) help manage memory on constrained devices.

Real-World Use Cases

MedRAX is designed for stakeholders who need complex, explainable, and actionable CXR insights:

- Radiology Teams: Use it as a second reader for multi-step queries (e.g., “Is the nodule new? Measure its size and compare to prior”).

- AI Researchers: Rapidly prototype new reasoning pipelines by plugging in custom tools without retraining the entire system.

- Medical Educators: Demonstrate how AI integrates visual analysis with clinical logic for teaching diagnostic reasoning.

- Healthcare Startups: Accelerate product development with a ready-made, modular CXR reasoning engine.

Because it requires no fine-tuning, MedRAX reduces the barrier to deploying advanced CXR AI from months to minutes.

Getting Started Is Simple

- Clone the repo and install:

git clone https://github.com/bowang-lab/MedRAX.git cd MedRAX pip install -e .

- Set your OpenAI API key in a

.envfile. - Optionally specify which tools to load in

main.py(skip heavy ones like LLaVA-Med if needed). - Launch the Gradio app or run batch evaluations using the provided

quickstart.py.

Model weights for most tools are downloaded automatically. Only RoentGen requires manual setup, and it’s optional.

Rigorous Evaluation with ChestAgentBench

To prove its capabilities, the MedRAX team introduced ChestAgentBench—a new benchmark with 2,500 expert-curated, multi-step queries across 7 reasoning categories:

- Detection

- Classification

- Localization

- Comparison

- Relationship inference

- Diagnosis

- Characterization

Built from 675 real clinical cases, this benchmark tests true medical reasoning, not just pattern recognition. MedRAX outperforms both open-source and proprietary models on this benchmark, demonstrating its readiness for real-world use.

Limitations and Practical Notes

- LLM Dependency: Best performance requires GPT-4o or a capable vision-language model. Local alternatives may reduce accuracy.

- Hardware: GPU recommended; some tools (LLaVA-Med, grounding) are memory-intensive.

- MedSAM Support: Listed but not yet implemented (per official docs).

- RoentGen: Requires manual download and placement of weights.

These trade-offs are clearly documented, allowing teams to assess fit for their infrastructure and compliance policies.

Summary

MedRAX represents a paradigm shift in medical AI: instead of chasing ever-larger models, it composes existing expert tools through intelligent reasoning. By unifying classification, segmentation, reporting, and visual QA into a single agent that understands clinical context, it bridges the gap between academic AI and practical radiology workflows. With easy installation, modular design, and state-of-the-art performance on a rigorous clinical benchmark, MedRAX is not just another model—it’s a scalable framework for the next generation of medical reasoning systems.

For developers, researchers, and clinical AI teams working with chest X-rays, MedRAX offers a rare combination: cutting-edge capability, immediate usability, and architectural flexibility—all without the need for retraining.