Reinforcement learning (RL) holds immense promise for solving complex decision-making problems—from robotics and game playing to resource optimization and autonomous systems. Yet, despite rapid algorithmic advances, RL research and development have long been hampered by a fundamental inconsistency: the lack of a unified interface for environments. Without standardization, comparing algorithms becomes unreliable, reproducing results is difficult, and integrating new ideas with existing codebases is unnecessarily time-consuming.

Enter Gymnasium—an open-source Python library that provides a clean, consistent, and well-maintained API for RL environments. Originally forked from OpenAI’s Gym (now under the stewardship of the Farama Foundation), Gymnasium establishes a common language between learning agents and environments, enabling researchers, educators, and engineers to focus on innovation rather than wrestling with incompatible implementations.

By offering a standard set of environments, strict versioning for reproducibility, and tools for easy customization, Gymnasium has become the de facto foundation for modern RL experimentation and education.

Why Standardization Matters in Reinforcement Learning

In many machine learning domains—like supervised learning—datasets and evaluation protocols are well-defined and widely shared. RL lacks this by default: every new environment could theoretically implement its own observation format, action space, reward structure, and termination logic. This fragmentation slows progress.

Gymnasium solves this by enforcing a simple, predictable interface:

- Every environment exposes an

action_spaceandobservation_space. - Interaction follows a universal loop:

reset()→step(action)→ repeat until termination. - Step outputs always include

observation,reward,terminated,truncated, andinfo.

This consistency means an RL algorithm written for one Gymnasium-compatible environment—say, CartPole—can often run on another—like LunarLander—with zero code changes. That interoperability dramatically accelerates prototyping, benchmarking, and knowledge transfer across the RL community.

Core Features That Enable Reliable RL Development

A Unified API for Seamless Integration

At its heart, Gymnasium defines a minimal but powerful contract between agents and environments. Whether you’re using a textbook tabular Q-learning implementation or a state-of-the-art deep RL library like CleanRL, the environment interaction remains identical. This decoupling of algorithm and environment is essential for modular, reusable code.

Diverse Built-in Environment Families

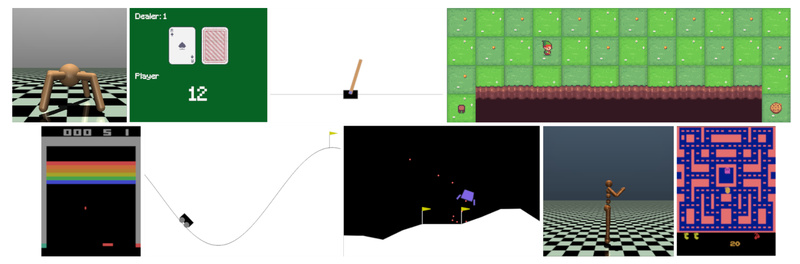

Gymnasium ships with a curated collection of environments spanning multiple complexity levels:

- Toy Text: Extremely simple (e.g., FrozenLake), ideal for debugging and teaching core concepts.

- Classic Control: Physics-based problems like CartPole and MountainCar, perfect for testing control algorithms.

- Box2D & MuJoCo: More advanced continuous-control tasks involving simulated physics.

- Atari: High-dimensional pixel-based environments from the Atari 2600, used in deep RL benchmarks.

Each family can be installed on-demand (e.g., pip install "gymnasium[atari]"), avoiding bloated dependencies.

Reproducibility Through Strict Versioning

Every environment includes a version suffix (e.g., CartPole-v1). If a change could affect learning dynamics—such as reward scaling or observation representation—the version number increments. This ensures that results reported in papers or internal experiments remain reproducible over time, a critical requirement for scientific rigor.

Tools for Customization and Compatibility

Need to build your own environment? Gymnasium provides clear abstractions to define observation spaces, action spaces, and transition logic while staying compliant with the standard API. For legacy or third-party environments not built for Gymnasium, the apply_env_compatibility flag in gymnasium.make() can often bridge the gap automatically.

Ideal Use Cases

Gymnasium excels in several key scenarios:

- Algorithm Prototyping: Quickly test new RL ideas against standardized baselines without writing environment boilerplate.

- Benchmarking: Compare your agent’s performance against published results using identical environment versions.

- Education: Teach RL fundamentals using simple, well-documented environments like Taxi or CliffWalking.

- Production Integration: Leverage robust, tested environments as components in larger systems (e.g., simulating user behavior in recommendation engines).

Its widespread adoption also means extensive community support and integration with leading RL libraries, including CleanRL, Stable Baselines3, and RLLib.

Getting Started Is Effortless

Installing and using Gymnasium requires just a few lines of code:

import gymnasium as gym

env = gym.make("CartPole-v1")

observation, info = env.reset(seed=42)

for _ in range(1000): action = env.action_space.sample() # Random policy observation, reward, terminated, truncated, info = env.step(action) if terminated or truncated: observation, info = env.reset()

env.close()

This minimal example demonstrates the full interaction loop. Advanced users can replace random actions with learned policies, wrap environments with monitoring tools, or chain multiple environments together—all while relying on the same underlying API.

Limitations and Practical Considerations

While Gymnasium provides a robust foundation, users should note a few practical points:

- Optional dependencies: Core installation (

pip install gymnasium) includes only basic environments. Families like Atari, MuJoCo, or Box2D require extra install flags. - Platform support: Official testing and support target Linux and macOS (Python 3.10–3.13). Windows is community-supported.

- Third-party environments: While many external environments adopt the Gymnasium API, version mismatches may occur. Use

gymnasium.make(..., apply_env_compatibility=True)when needed to ensure compatibility.

These considerations are minor trade-offs for the immense gains in standardization and reproducibility.

Summary

Gymnasium addresses a critical bottleneck in reinforcement learning: the absence of a reliable, standardized interface between agents and environments. By providing a consistent API, a rich suite of benchmark environments, strict version control, and easy extensibility, it removes infrastructure friction and lets practitioners focus on what matters—designing better learning algorithms, teaching core concepts, or deploying RL in real applications. For anyone entering or advancing in the RL space, Gymnasium isn’t just a tool—it’s the foundation upon which modern RL is built.