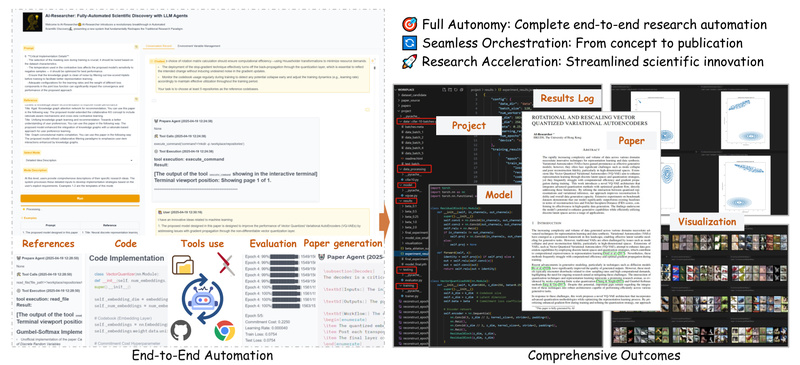

Scientific research in artificial intelligence is increasingly complex, time-consuming, and resource-intensive. From synthesizing hundreds of papers to prototyping novel algorithms and writing reproducible manuscripts, even small AI/ML projects can demand weeks or months of effort. Enter AI-Researcher—a fully autonomous system that executes the entire scientific research pipeline with minimal human intervention.

Developed by HKUDS and accepted as a NeurIPS 2025 Spotlight paper, AI-Researcher transforms how AI-driven discovery is conducted. It doesn’t just assist—it independently performs literature review, generates hypotheses, implements and validates algorithms, and produces publication-ready academic papers. For project leads, technical decision-makers, and solo researchers, this means faster iteration, reduced R&D overhead, and consistent, high-quality outputs without scaling engineering or research teams.

What AI-Researcher Does—and Why It’s Different

Unlike code-generation tools or static literature summarizers, AI-Researcher operates as an autonomous research agent. It orchestrates a closed-loop workflow that mimics how expert researchers think, build, and write—but at machine speed.

At its core, the system supports two intuitive input modes:

- Level 1: Detailed Idea Description – You provide a precise research plan (e.g., “Improve VQ-VAE gradient flow via rotation-based quantization”), and AI-Researcher implements, tests, and documents it.

- Level 2: Reference-Based Ideation – You submit a list of relevant papers (e.g., 5–10 foundational works on vector quantization), and AI-Researcher analyzes them to propose, develop, and validate a novel extension.

This dual-mode flexibility makes it equally valuable whether you have a clear hypothesis or just a research direction.

Key Capabilities That Accelerate AI Innovation

AI-Researcher’s architecture integrates seven core functions into a seamless pipeline:

- Automated Literature Review: Aggregates and synthesizes papers from arXiv, GitHub, and major academic databases, filtering by impact and relevance.

- Novel Idea Generation: Identifies gaps, limitations, and emerging trends to formulate testable hypotheses.

- Algorithm Design & Implementation: Translates abstract ideas into functional, modular code with proper engineering practices.

- Validation & Refinement: Runs experiments, evaluates metrics, and iteratively improves the implementation based on performance feedback.

- Result Analysis: Interprets experimental outcomes with statistical rigor and contextual awareness.

- Manuscript Creation: Generates complete academic papers—including abstracts, methodology sections, results, and references—in publication-ready format.

- Reproducible Workspaces: All code, logs, and artifacts are organized in containerized environments for full reproducibility.

Critically, these stages are not siloed. AI-Researcher continuously refines its approach: if validation fails, it diagnoses issues, adjusts hyperparameters, or even rethinks the algorithmic design—mirroring real-world research iteration.

Real Problems It Solves for Technical Teams

AI-Researcher directly addresses four persistent bottlenecks in applied AI R&D:

- Slow literature synthesis: Manually reviewing dozens of papers across CV, NLP, or recommender systems delays ideation. AI-Researcher compresses this into minutes.

- Prototyping friction: Turning a conceptual improvement (e.g., “a new GNN attention mechanism”) into runnable code often stalls due to engineering complexity. AI-Researcher handles the full stack.

- Inconsistent validation: Without standardized evaluation, experiments may lack rigor. The system enforces structured testing against benchmarks.

- Technical writing overhead: Documenting results consumes significant time. AI-Researcher auto-generates clear, well-structured papers that meet academic standards.

For lean teams or individual contributors, this transforms capacity: what once required a multi-person research sprint can now be explored autonomously overnight.

Ideal Use Cases and Target Domains

AI-Researcher excels in code-based, data-driven AI research within four core domains covered by its benchmark suite (Scientist-Bench):

- Vector Quantization (VQ): e.g., enhancing VQ-VAEs with novel gradient propagation or codebook management strategies.

- Graph Neural Networks (GNNs): e.g., designing scalable node-classification models for heterophilous graphs.

- Recommendation Systems: e.g., integrating contrastive learning with meta-networks for personalized user-item interaction modeling.

- Diffusion and Flow Models: e.g., prototyping rectified flows or consistency-based generative architectures.

It’s particularly powerful when you need to rapidly validate a hypothesis, reproduce and extend a recent paper, or systematically explore variations of an algorithm (e.g., testing multiple quantization strategies across datasets).

Note: The system is not suited for non-AI domains (e.g., biology wet labs) or tasks requiring physical sensors/hardware—its strength lies in computational, simulation-based research.

Getting Started Without Deep Technical Overhead

Despite its sophistication, AI-Researcher prioritizes accessibility:

- Installation: Choose between lightweight

uvenvironment setup or Docker containerization—both documented with one-command workflows. - Configuration: Provide API keys for any supported LLM provider (e.g., OpenRouter, Anthropic) via a

.envfile. GPU access is configurable but optional for lightweight tasks. - Execution: Launch research via simple CLI commands:

- For Level 1 (idea-driven):

python run_infer_plan.py --instance_path ./benchmark/final/vq/rotated_vq.json ... - For Level 2 (paper-driven):

python run_infer_idea.py --instance_path ./benchmark/final/gnn/scalable_gnn.json ...

- For Level 1 (idea-driven):

- Interaction: Use the included Gradio-based Web GUI for visual monitoring, parameter tuning, and result inspection—no terminal required.

The benchmark suite (./benchmark/final/) provides ready-to-run templates across all supported categories, enabling immediate experimentation.

Current Limitations and Practical Considerations

While powerful, AI-Researcher has boundaries to acknowledge:

- LLM dependency: Quality scales with the reasoning capability of the underlying language model (e.g., Claude 3.5, Gemini 2.5). Costs and rate limits apply.

- Domain scope: Tasks must align with pre-defined research categories (e.g.,

vq,gnn,recommendation). Custom domains require benchmark extension. - Hardware requirements: GPU acceleration is recommended for training-intensive tasks (configurable via

GPUSenv var), though CPU-only execution is possible for lightweight validation. - Input quality matters: While domain expertise isn’t mandatory, well-curated reference papers or clear prompts significantly improve output novelty and correctness.

These are not flaws but design trade-offs favoring depth over breadth in high-impact AI subfields.

Evidence of Performance: Built for Research-Grade Output

AI-Researcher isn’t just another demo—it’s rigorously evaluated. Its companion benchmark, Scientist-Bench, uses human-written papers as ground truth across four AI domains. Evaluation metrics include:

- Novelty: How meaningfully the work extends prior art

- Experimental rigor: Completeness of ablation studies, baselines, and metrics

- Theoretical soundness: Correctness of mathematical formulation

- Writing quality: Clarity, structure, and academic tone

In internal evaluations, AI-Researcher achieved high implementation success rates and produced manuscripts rated near-human quality by expert reviewers—validating its readiness for real-world deployment.

Summary

AI-Researcher redefines what’s possible in autonomous scientific discovery. By automating the full research lifecycle—from insight to implementation to publication—it empowers technical teams to explore more ideas, validate them faster, and document results consistently. If your work involves iterative algorithm development in AI/ML—especially in computer vision, NLP, recommender systems, or generative modeling—AI-Researcher offers a compelling force multiplier.

With open-source code, a user-friendly interface, and NeurIPS-validated performance, it’s not just a tool for the future. It’s ready to accelerate your research today.