In many real-world scenarios—whether you’re analyzing patient outcomes in healthcare, consumer behavior in economics, or system failures in engineering—you can’t run controlled experiments. Yet, you still need to understand not just what is correlated, but what causes what. This is where causal discovery becomes essential.

Causal-learn is a modern, open-source Python library designed to uncover causal relationships from purely observational data. Built for both researchers and practitioners, it brings together a wide array of classical and cutting-edge causal discovery algorithms—all within a native Python environment. Unlike older tools written in Java or R, Causal-learn integrates seamlessly into today’s data science and machine learning workflows, requiring no external runtime or complex setup.

If your work depends on moving beyond correlation to identify actionable drivers, Causal-learn offers a practical, flexible, and well-documented path forward.

Why Causal Discovery Matters

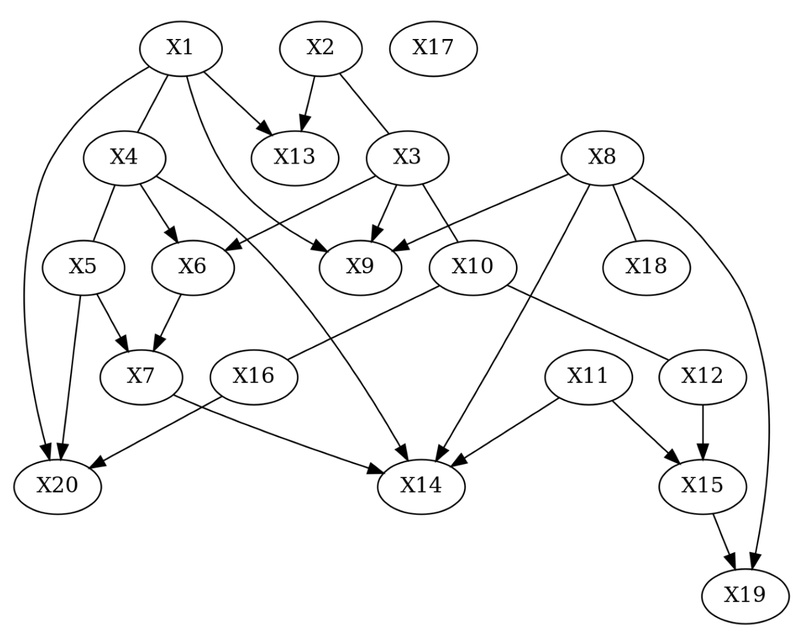

Traditional machine learning excels at prediction but often falls short when it comes to explanation or intervention. For example, knowing that ice cream sales and drowning incidents are correlated doesn’t tell you whether one causes the other—or if a hidden factor (like hot weather) explains both. Causal discovery methods aim to infer the underlying causal structure—typically represented as a directed acyclic graph (DAG)—from data alone, under certain assumptions.

This capability is invaluable for:

- Generating scientific hypotheses

- Designing robust policies or interventions

- Debugging complex systems by identifying root causes

- Building interpretable, generalizable models

Causal-learn provides the algorithmic toolkit to make this possible without requiring randomized trials.

Key Features That Set Causal-Learn Apart

Comprehensive Algorithm Support

Causal-learn implements multiple families of causal discovery methods:

- Constraint-based algorithms (e.g., PC, FCI) that use conditional independence tests

- Score-based methods (e.g., GES) that optimize a scoring criterion over graph structures

- Functional causal models (e.g., LiNGAM, ANM) that impose structural assumptions on data-generating mechanisms

- Granger causality for time-series data

- Permutation-based and hidden causal representation learning approaches for more advanced use cases

This breadth ensures you can select the right method for your data type and assumptions.

Native Python Experience

Unlike the widely used Tetrad (a Java-based causal inference suite), Causal-learn is written entirely in Python. This means:

- No Java Virtual Machine (JVM) dependencies

- Easy installation via

pip - Natural integration with pandas, NumPy, scikit-learn, and other staples of the Python data stack

For users who still need access to Tetrad’s extensive legacy algorithms, the project maintains guidance on using py-tetrad to bridge the two ecosystems.

Developer-Friendly and Modular Design

The library isn’t just for running off-the-shelf algorithms. It exposes reusable building blocks:

- A suite of independence tests (e.g., Fisher-Z, kernel-based, discrete)

- Score functions for structure learning

- Graph utilities for manipulation and evaluation

- Evaluation metrics to compare inferred graphs against ground truth

This modularity empowers developers to prototype new causal discovery methods or customize existing ones.

Accessible for Non-Experts

With clear APIs and thorough documentation, Causal-learn lowers the barrier to entry. Running a basic PC algorithm requires just a few lines of code:

from causallearn.search.ConstraintBased.PC import pc cg = pc(data) # data is a NumPy array or pandas DataFrame cg.draw_pydot_graph() # visualize the causal graph

Tutorials and runnable examples (e.g., TestPC.py, TestGES.py in the tests/ directory) further accelerate onboarding.

Ideal Use Cases

Causal-learn shines in domains where experimentation is impractical, unethical, or too costly:

- Healthcare: Uncover potential causal pathways between treatments, biomarkers, and outcomes from electronic health records.

- Economics & Social Science: Infer policy effects or behavioral drivers from survey or observational datasets.

- Operations & Engineering: Diagnose root causes of system anomalies using logs or sensor data.

- Scientific Research: Generate testable hypotheses about gene regulation, climate dynamics, or ecological interactions.

In all these settings, Causal-learn helps turn passive observations into structured causal knowledge.

Getting Started Is Simple

Installation is straightforward:

pip install causal-learn

Prerequisites include standard scientific Python packages (NumPy, pandas, scikit-learn, etc.), and optional visualization tools like Graphviz and matplotlib.

Once installed, users can:

- Load observational data (as a pandas DataFrame or NumPy array)

- Choose a causal discovery method (e.g., PC, GES, LiNGAM)

- Run the algorithm

- Visualize or export the resulting causal graph

- Use utility functions for further analysis or validation

The official documentation and example scripts provide step-by-step guidance for common workflows.

Limitations and Considerations

While powerful, Causal-learn is not a magic bullet. Users should be aware of several important caveats:

- Assumptions matter: Most methods assume causal sufficiency (no unmeasured confounders), faithfulness, and correct model specification. Violations can lead to incorrect graphs.

- Data quality is critical: Causal discovery is sensitive to noise, sample size, and measurement error. Poor data often yields unreliable results.

- Interpretation requires care: The output is a hypothesized causal structure—not proven truth. Domain expertise is essential to validate findings.

- Active development: As a rapidly evolving project, APIs may change between versions. Check release notes when upgrading.

The library is best used as part of an iterative, hypothesis-driven process—not as a one-shot black box.

How It Compares to Alternatives

The most prominent alternative is Tetrad, a mature Java-based platform with decades of algorithm development. While Tetrad offers more legacy methods, its Java foundation creates friction in modern Python-centric pipelines.

Causal-learn addresses this by:

- Providing native Python implementations of key algorithms

- Offering cleaner, more intuitive APIs for Python users

- Enabling easier integration with ML libraries and cloud infrastructure

For cases where a specific Tetrad algorithm isn’t yet ported, the Causal-learn team recommends using py-tetrad to call Java code from Python—giving users the best of both worlds.

Summary

Causal-learn fills a critical gap in the Python data science ecosystem: reliable, accessible, and extensible tools for causal discovery from observational data. By combining methodological breadth, ease of use, and developer flexibility, it empowers teams to move beyond correlation and toward actionable causal understanding. Whether you’re exploring new scientific questions or optimizing real-world systems, Causal-learn provides a solid foundation for evidence-based decision-making—without the need for controlled experiments.

With active development, comprehensive documentation, and strong academic backing, it’s a compelling choice for any project where understanding why matters as much as predicting what.