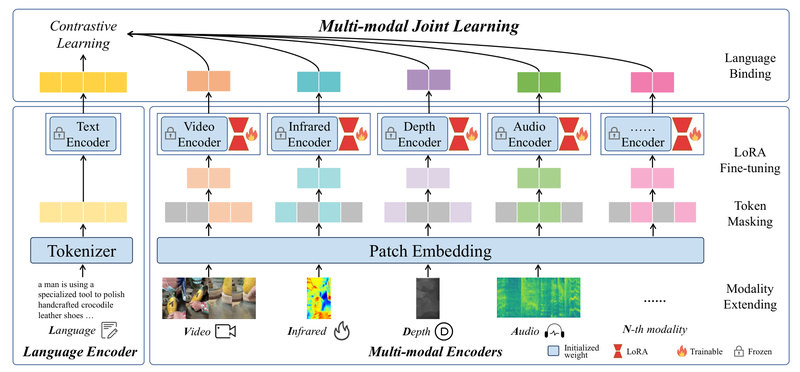

Imagine building an AI system that understands not just images and text—but also video, audio, infrared (thermal), and depth data—all…

Cross-Modal Retrieval

Chinese CLIP: Enable Zero-Shot Chinese Vision-Language AI Without Custom Training 5695

Multimodal AI models like OpenAI’s CLIP have transformed how developers build systems that understand both images and text. But there’s…

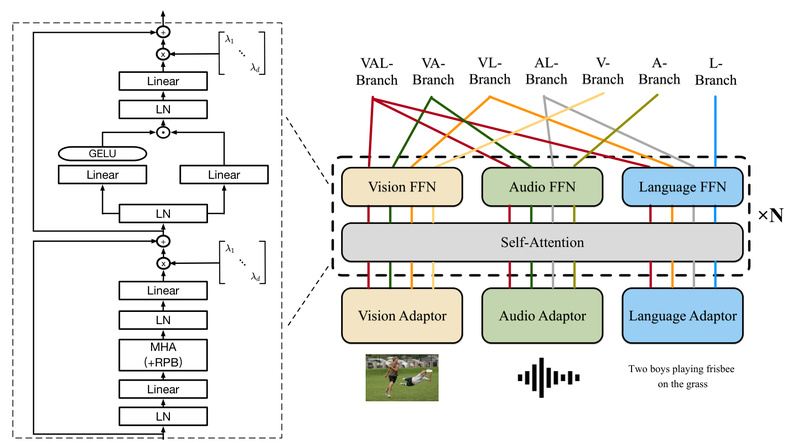

ONE-PEACE: A Single Model for Vision, Audio, and Language with Zero Pretraining Dependencies 1062

In today’s AI landscape, most multimodal systems are built by stitching together specialized models—separate vision encoders, audio processors, and language…

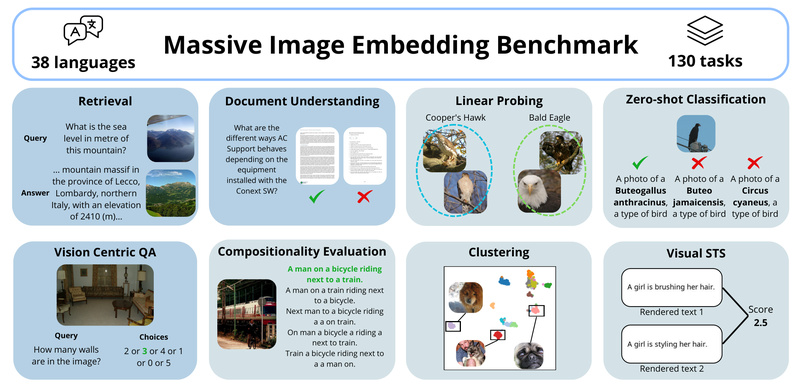

MIEB: Benchmark 130 Image & Image-Text Tasks Across 38 Languages for Reliable Model Evaluation 3016

Evaluating image embedding models has long been a fragmented and inconsistent process. Researchers and engineers often test models on narrow,…