Large language models (LLMs) like ChatGPT are transforming how we interact with AI—but they often “make things up.” These fabricated,…

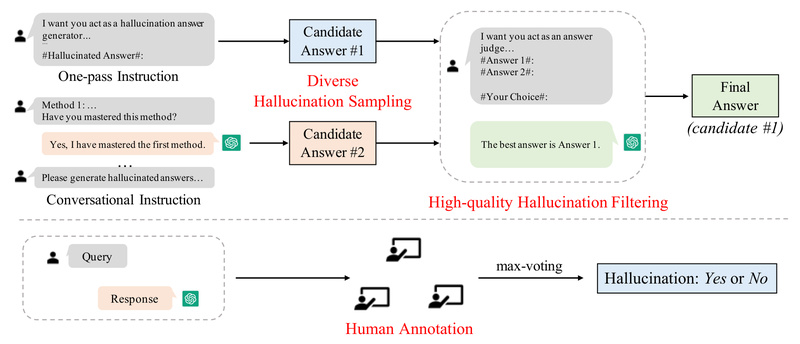

Hallucination Detection

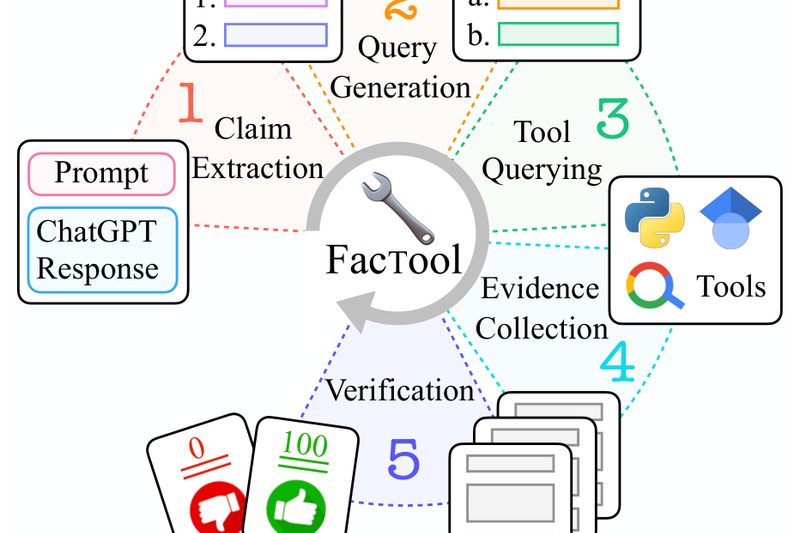

FacTool: Automatically Detect Factual Errors in LLM Outputs Across Code, Math, QA, and Scientific Writing 899

Large language models (LLMs) like ChatGPT and GPT-4 have transformed how we generate text, write code, solve math problems, and…

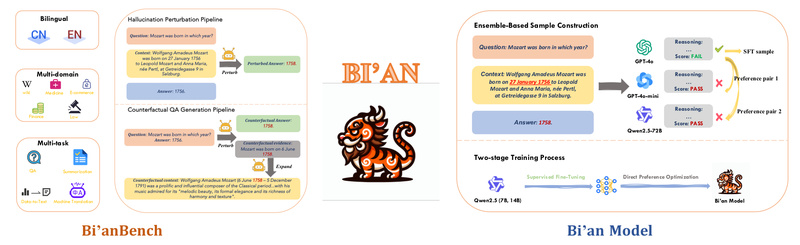

Bi’an: Detect RAG Hallucinations Accurately with a Bilingual Benchmark and Lightweight Judge Models 8343

Retrieval-Augmented Generation (RAG) has become a go-to strategy for grounding large language model (LLM) responses in real-world knowledge. By pulling…

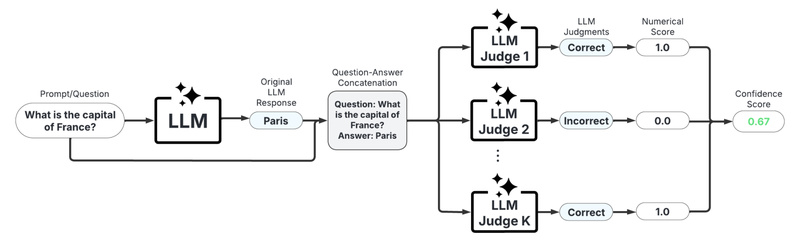

UQLM: Detect LLM Hallucinations with Uncertainty Quantification—Confidence Scoring Made Practical 1079

Large Language Models (LLMs) are transforming how we build intelligent applications—from customer service bots to clinical decision support tools. Yet…