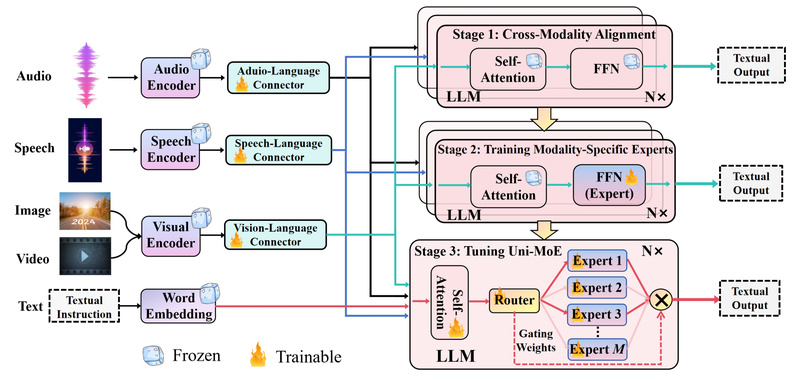

Imagine managing a project that needs to understand speech, analyze images, interpret video frames, and respond to written prompts—all within…

Instruction Tuning

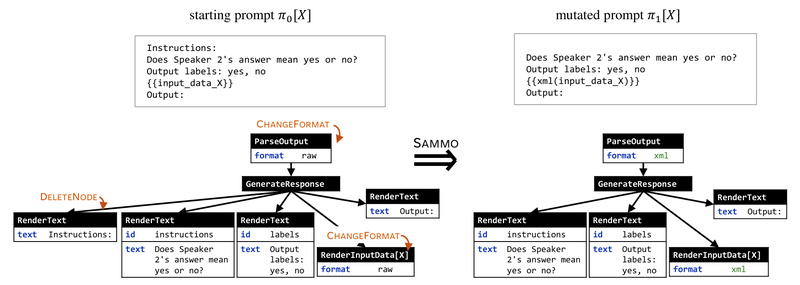

SAMMO: Optimize LLM Prompt Programs Like Code—Structure-Aware, Compile-Time Tuning for RAG, Instruction Refinement, and Prompt Compression 731

Modern LLM applications increasingly rely on complex, structured prompts—especially in scenarios like Retrieval-Augmented Generation (RAG), instruction-based tasks, and data labeling…

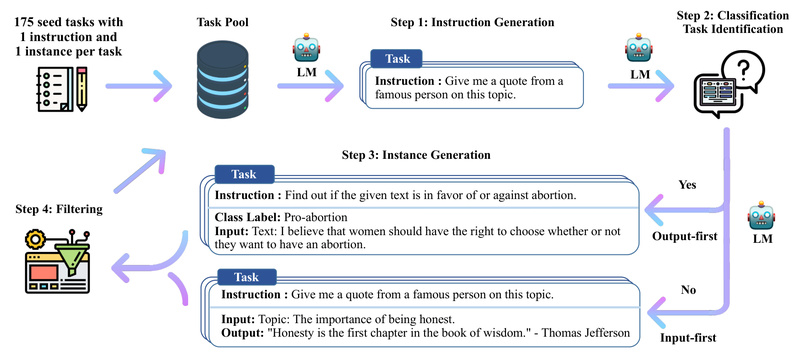

Self-Instruct: Bootstrap High-Quality Instruction Data Without Human Annotations 4557

For teams building or fine-tuning large language models (LLMs), one of the biggest bottlenecks is the scarcity of high-quality, diverse…

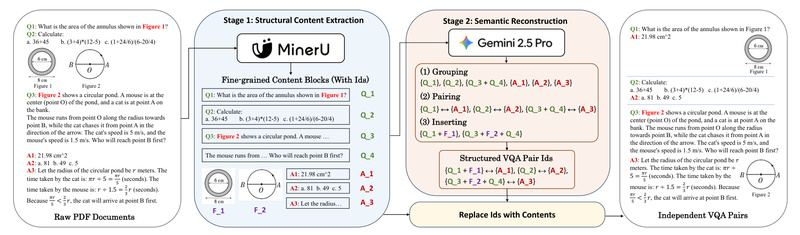

FlipVQA-Miner: Automatically Extract High-Quality Visual QA Pairs from Textbooks for Reliable LLM Training 1737

Large Language Models (LLMs) and multimodal systems increasingly demand high-quality, human-authored supervision data—especially for tasks requiring reasoning, visual understanding, and…

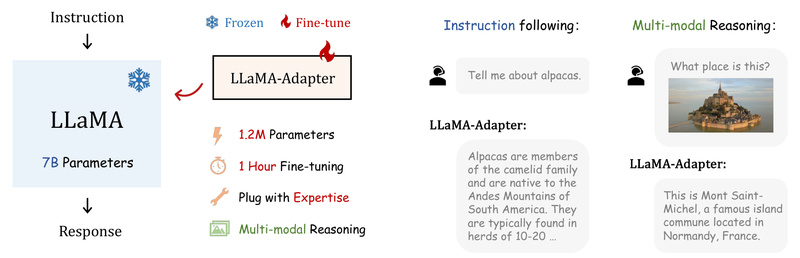

LLaMA-Adapter: Efficiently Transform LLaMA into Instruction-Following or Multimodal AI with Just 1.2M Parameters 5907

If you’re working on a project that requires a capable language model—but lack the GPU budget, time, or infrastructure for…

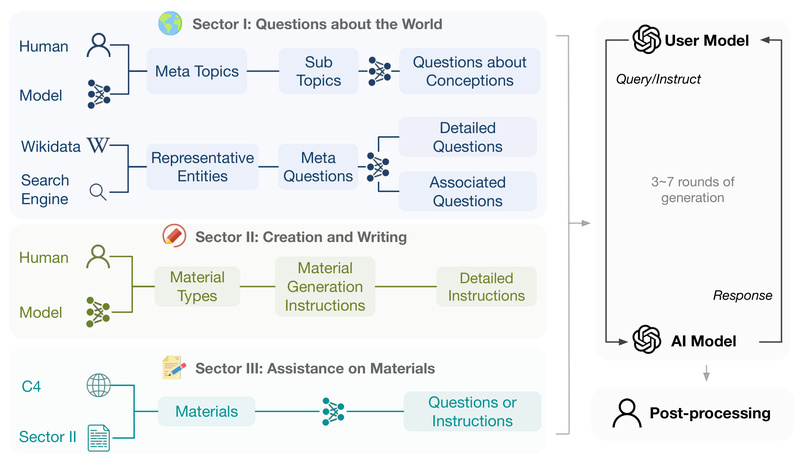

UltraChat: Train Powerful Open-Source Chat Models with 1.5M High-Quality, Privacy-Safe AI Dialogues 2721

If you’re a technical decision-maker evaluating options for building or fine-tuning a conversational AI system, you know that high-quality instruction-following…

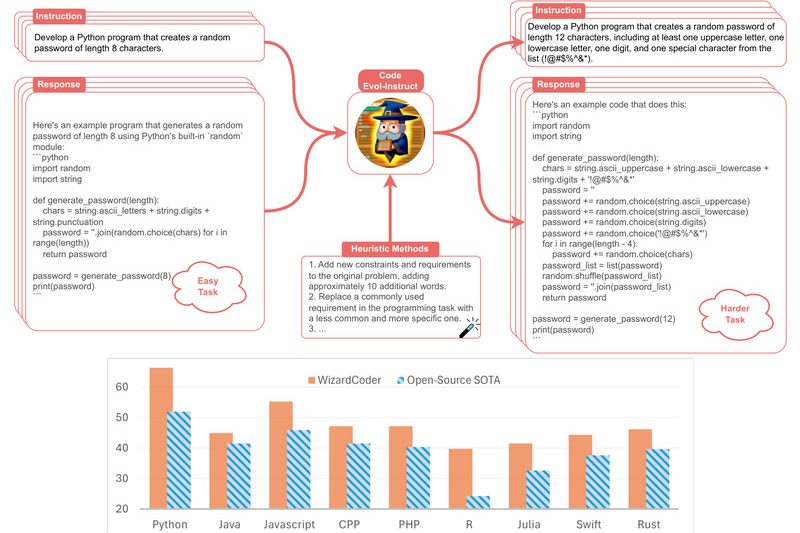

WizardCoder: Open-Source Code LLM That Outperforms ChatGPT and Gemini in Code Generation 9472

WizardCoder is a state-of-the-art open-source Code Large Language Model (Code LLM) that delivers exceptional performance on code generation tasks—often surpassing…

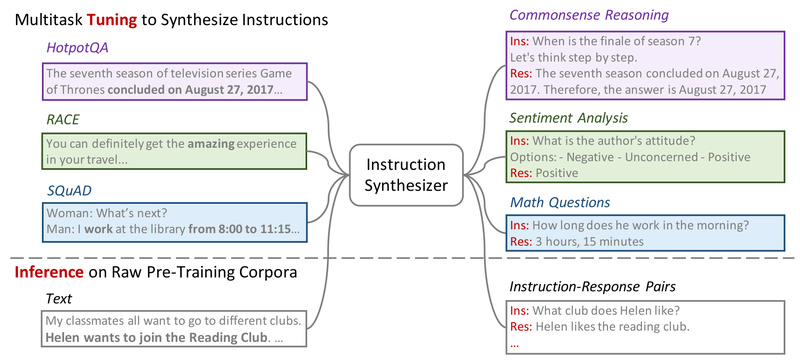

Instruction Pre-Training: Boost Language Model Performance from Day One with Supervised Multitask Pre-Training 4150

Traditional language model (LM) development follows a two-stage process: unsupervised pre-training on massive raw text corpora, followed by instruction tuning…

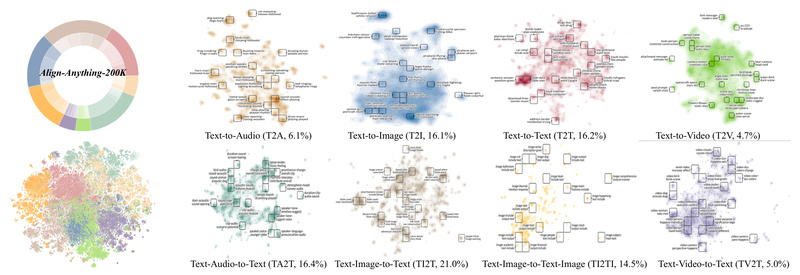

Align Anything: The First Open Framework for Aligning Any-to-Any Multimodal Models with Human Intent 4562

As AI systems grow more capable across diverse data types—text, images, audio, and video—the challenge of aligning them with human…