For teams building real-world AI applications that combine vision and language—whether it’s parsing scanned documents, analyzing instructional videos, or creating…

vision-language modeling

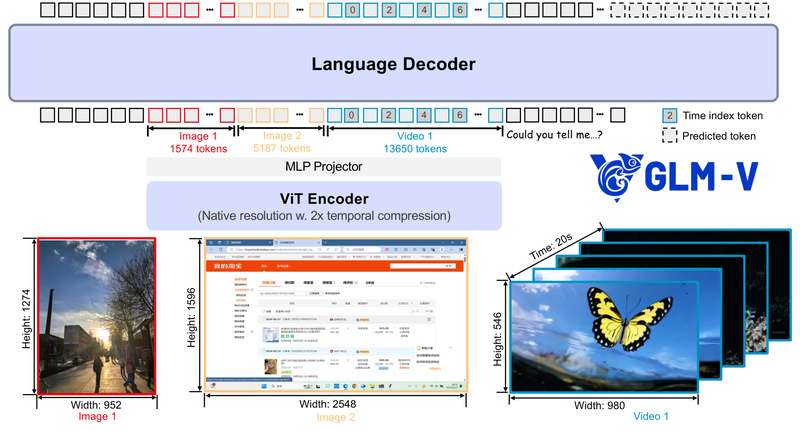

GLM-V: Open-Source Vision-Language Models for Real-World Multimodal Reasoning, GUI Agents, and Long-Context Document Understanding 1899

If your team is building AI applications that need to see, reason, and act—like desktop assistants that interpret screenshots, UI…

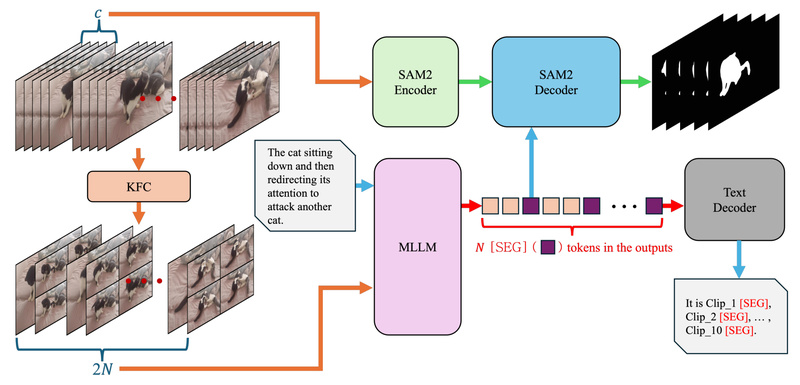

Sa2VA: Unified Vision-Language Model for Accurate Referring Video Object Segmentation from Natural Language 1455

Sa2VA represents a significant leap forward in multimodal AI by seamlessly integrating the strengths of SAM2—Meta’s state-of-the-art video object segmentation…

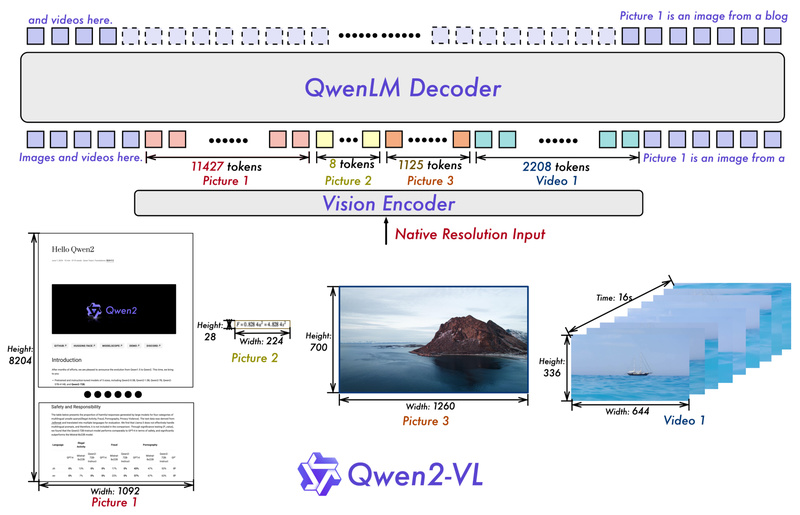

Qwen2-VL: Process Any-Resolution Images and Videos with Human-Like Visual Understanding 17241

Vision-language models (VLMs) are increasingly essential for tasks that require joint understanding of images, videos, and text—ranging from document parsing…

MME: The First Comprehensive Benchmark to Objectively Evaluate Multimodal Large Language Models 17004

Multimodal Large Language Models (MLLMs) have captured the imagination of researchers and developers alike—promising capabilities like generating poetry from images,…

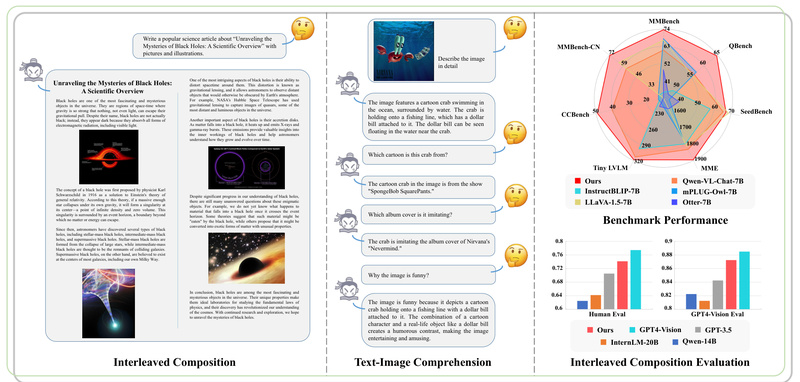

InternLM-XComposer: Generate Rich Text-Image Content and Understand High-Res Visuals with Open, Commercially Free AI 2909

Overview For technical decision makers evaluating multimodal AI, choosing between closed-source APIs and open alternatives often means trading off control,…

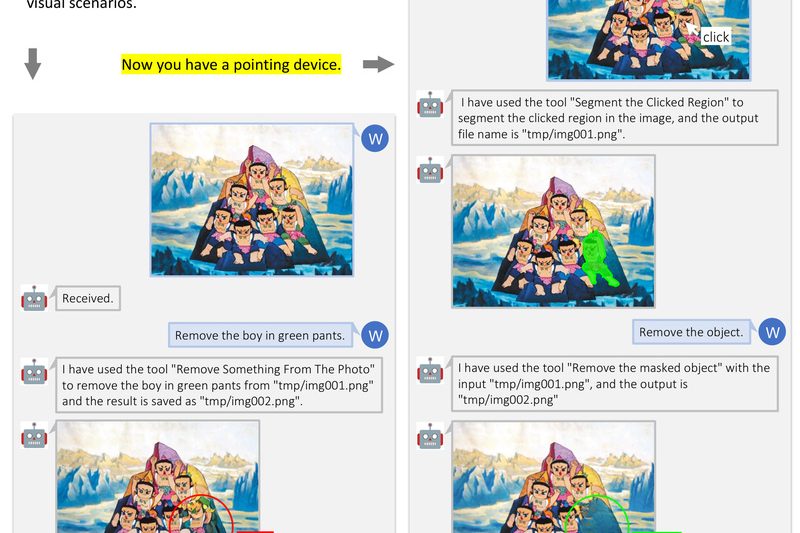

InternGPT: Solve Vision-Centric Tasks with Clicks, Scribbles, and ChatGPT-Level Reasoning 3221

In today’s AI landscape, large language models (LLMs) like ChatGPT have transformed how we interact with software—through natural language. But…

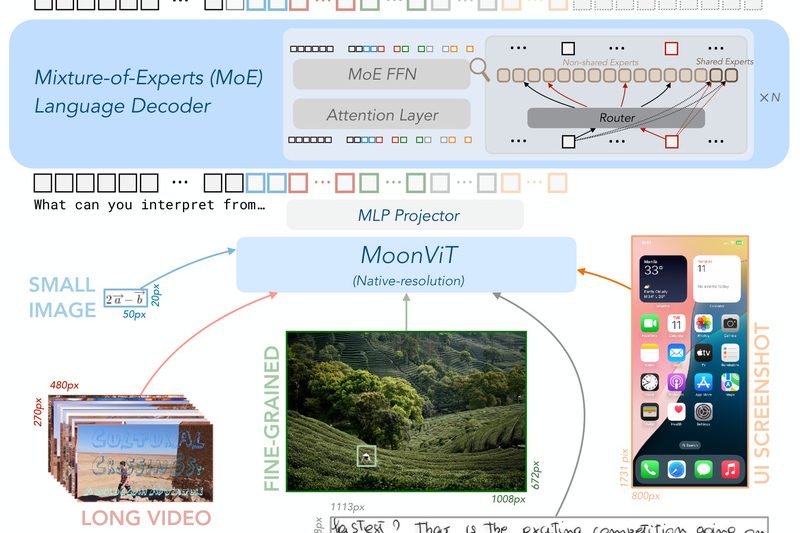

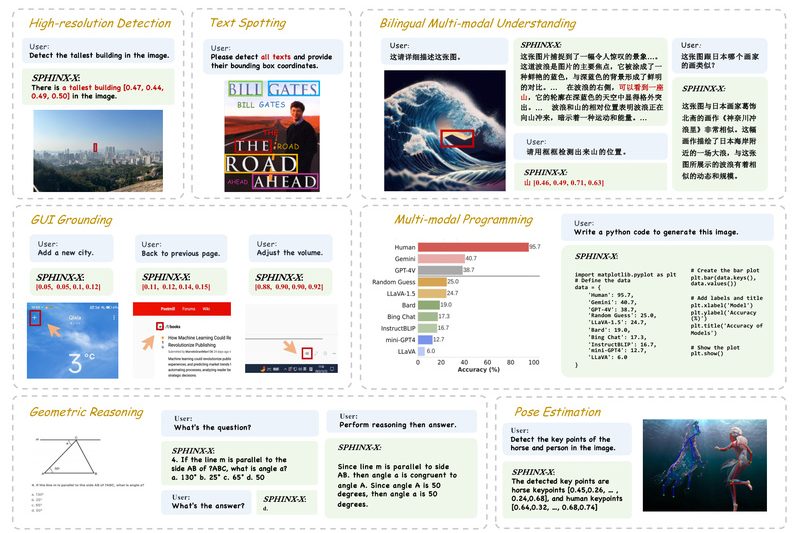

SPHINX-X: Build Scalable Multimodal AI Faster with Unified Training, Diverse Data, and Flexible Model Sizes 2794

SPHINX-X is a next-generation family of Multimodal Large Language Models (MLLMs) designed to streamline the development, training, and deployment of…

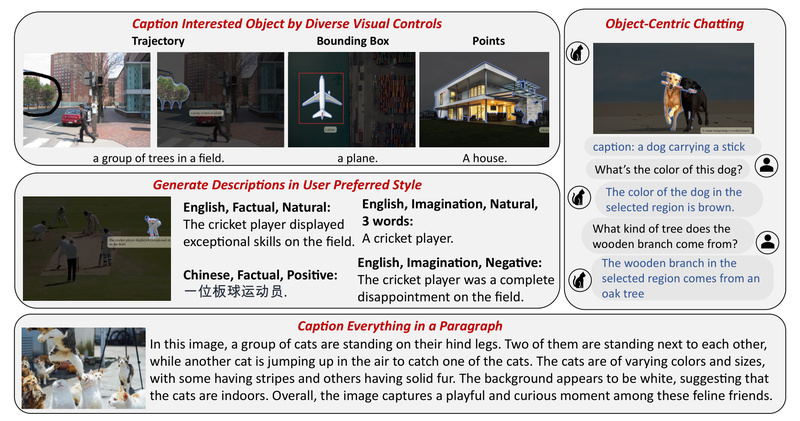

Caption Anything: Interactive, Multimodal Image Captioning Controlled by You 1770

Traditional image captioning systems produce static, one-size-fits-all descriptions—often generic, inflexible, and disconnected from actual user intent. What if you could…

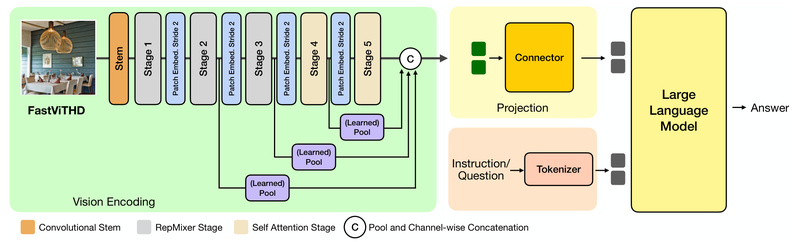

FastVLM: High-Resolution Vision-Language Inference with 85× Faster Time-to-First-Token and Minimal Compute Overhead 7052

Vision Language Models (VLMs) are increasingly central to real-world applications—from mobile assistants that read documents to AI systems that interpret…