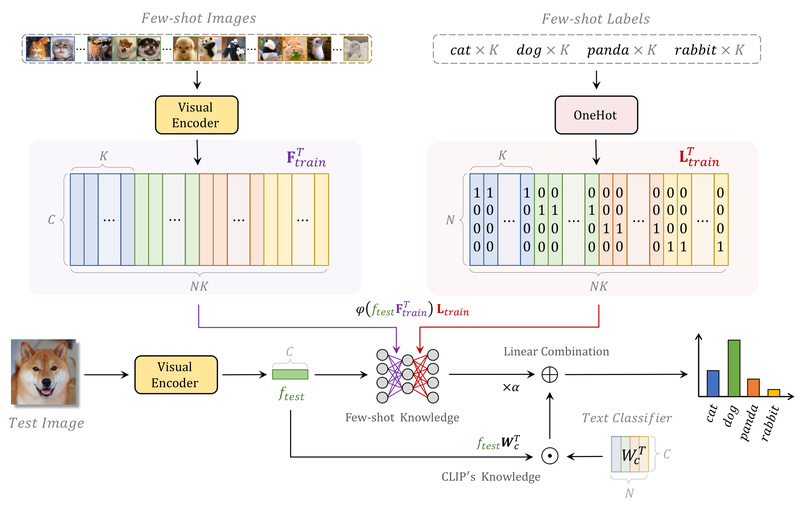

In the era of foundation models, CLIP (Contrastive Language–Image Pretraining) has revolutionized how we approach vision-language tasks—especially zero-shot image classification.…

Zero-shot Learning

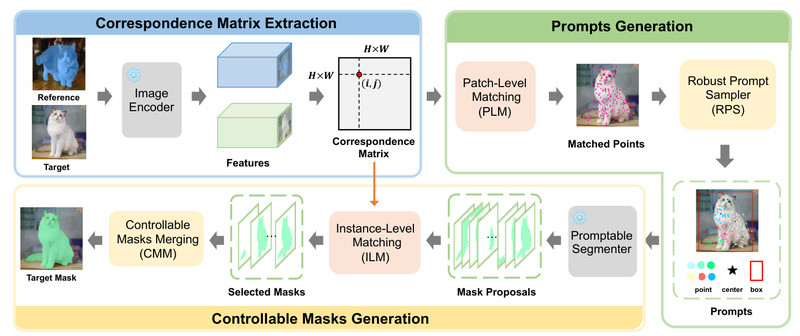

Matcher: One-Shot Segmentation Without Training—Unlock Flexible, Label-Free Perception for Real-World Applications 522

In modern computer vision workflows, deploying accurate segmentation models often demands large annotated datasets, task-specific architectures, and costly retraining—barriers that…

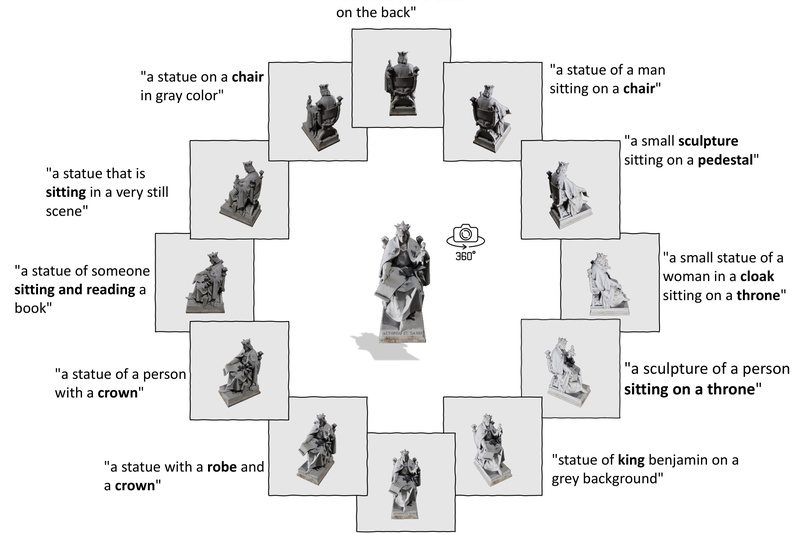

ULIP-2: Scalable Multimodal 3D Understanding Without Manual Annotations 547

Imagine building a system that can understand 3D objects as intuitively as humans do—recognizing a chair from its point cloud,…