Most modern Vision-Language Models (VLMs) treat images as static inputs—processed once, then reasoned about using purely text-based logic. But humans don’t think that way. When solving a complex diagram, inspecting a medical scan, or verifying a geometric proof, we constantly loop between looking, interpreting, zooming in, comparing regions, and revising our conclusions. This active, iterative visual reasoning is what DeepEyes aims to replicate in AI.

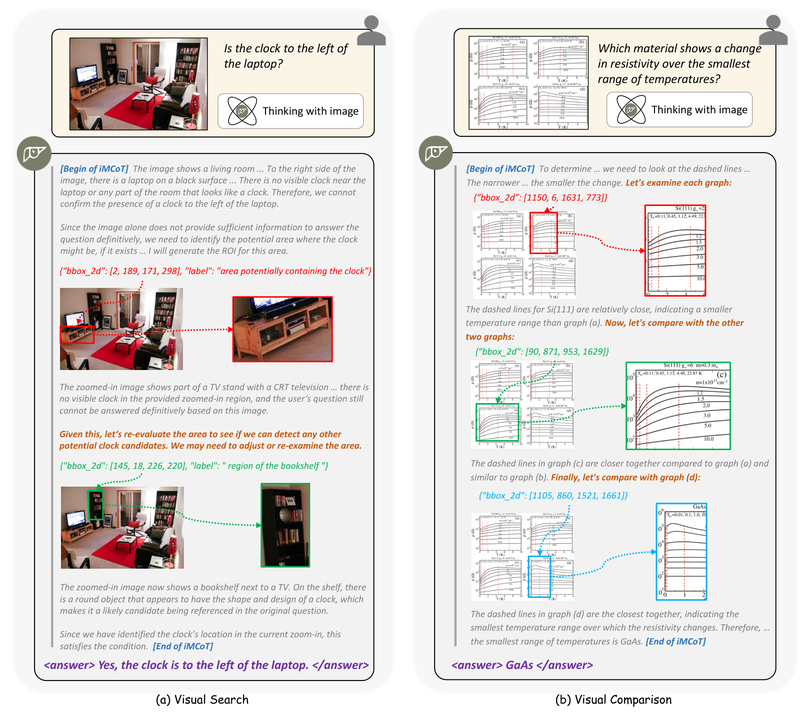

DeepEyes is an open-source project that equips VLMs with the ability to “think with images” through end-to-end reinforcement learning (RL). Unlike conventional approaches that rely on supervised fine-tuning or external vision tools, DeepEyes learns to dynamically interact with visual content as part of its reasoning chain—natively and autonomously. The result? Stronger performance on fine-grained perception, reduced hallucinations, better mathematical reasoning, and emergent behaviors that mirror human visual cognition, such as targeted zooming or cross-region comparison.

For technical decision-makers in product, research, or engineering teams, DeepEyes offers a pathway to build AI systems that don’t just “see” but truly reason with visual evidence—making it especially valuable in domains where accuracy, grounding, and interpretability are non-negotiable.

How DeepEyes Rethinks Visual Reasoning

From Passive Perception to Active “Thinking with Images”

Traditional VLMs encode an image once and generate a response based on a fixed visual embedding. In contrast, DeepEyes treats reasoning as an interactive trajectory: the model can invoke visual tools (like zooming into a region of interest) mid-reasoning, observe the updated visual input, and continue refining its answer. This capability—called “thinking with images”—is not hard-coded but emerges during RL training.

Crucially, this behavior is learned without any supervised fine-tuning (SFT) or cold-start data. Instead, DeepEyes uses outcome-based rewards to guide the model toward successful reasoning paths, allowing tool-use strategies to evolve organically.

Reinforcement Learning Without External Dependencies

DeepEyes builds on the VeRL framework and uses a foundation model like Qwen-2.5-VL-7B as its base. Through RL, it optimizes both language generation and visual tool invocation in a unified loop. The system includes:

- A tool-use-oriented data selection mechanism that prioritizes samples where visual interaction leads to better outcomes.

- A reward strategy based on a “judge” LLM (e.g., Qwen-2.5-72B served via vLLM) that evaluates final answer correctness.

- Native support for interleaved multimodal reasoning, where images and text alternate dynamically within a single response.

Importantly, DeepEyes does not depend on external, specialized vision models for tool execution. The visual grounding and zooming capabilities are handled internally, leveraging the VLM’s own perception strengths.

Key Strengths for Technical Teams

1. No Supervised Fine-Tuning Required

DeepEyes skips the costly and brittle SFT phase. Instead, it learns effective visual reasoning directly from reward signals—a significant advantage when high-quality reasoning traces are scarce.

2. Proven Gains Across Multiple Benchmarks

Experiments show consistent improvements on:

- High-resolution visual QA (e.g., V*, ArxivQA)

- Visual grounding (measured by IoU increases during training)

- Hallucination mitigation (answers better anchored in visual evidence)

- Mathematical reasoning (especially geometry and diagram-based problems)

3. Emergent Human-Like Reasoning Patterns

During training, DeepEyes develops sophisticated strategies such as:

- Scanning for small or partially occluded objects

- Comparing visual features across distant image regions

- Using

image_zoom_in_toolto verify preliminary conclusions

These behaviors aren’t programmed—they emerge as the model learns to maximize reward, offering a glimpse into scalable artificial visual cognition.

4. Modular and Extensible Architecture

Built as a plugin atop VeRL, DeepEyes supports:

- Multiple RL algorithms (PPO, GRPO, Reinforce++)

- Custom multimodal tools via a simple

ToolBaseinterface - Hybrid training that mixes agentic and non-agentic data

This makes it adaptable to new domains without rewriting core infrastructure.

Ideal Use Cases

DeepEyes shines in scenarios where passive image understanding falls short:

- Technical Document Analysis: Extracting structured insights from engineering schematics, research papers with complex figures, or financial reports with charts.

- AI Tutoring Systems: Explaining visual math problems by “showing work” through zoomed-in steps and region comparisons.

- Medical or Industrial Inspection: Assisting experts by grounding diagnostic or quality-control answers in specific image regions, reducing ambiguous or fabricated responses.

- Enterprise Knowledge Bases: Enabling RAG systems that can cite visual evidence from internal documents—not just text passages.

In all these cases, DeepEyes doesn’t just answer questions—it builds traceable, visually grounded reasoning chains that users can audit and trust.

Getting Started: Practical Workflow for Engineering Teams

Despite its advanced capabilities, DeepEyes provides a structured onboarding path:

- Environment Setup: Run the provided installation script (

scripts/install_deepeyes.sh) after installing VeRL. - Launch a Judge LLM Server: Serve a large model like Qwen-2.5-72B via vLLM to evaluate answer quality during RL.

- Prepare Data: Use the provided high-resolution datasets (available on Hugging Face), ensuring each sample includes an

env_namefield (e.g.,visual_toolbox_v2) to enable tool use. - Start Distributed Training: Use the example scripts (

final_merged_v1v8_thinklite.sh) with a Ray cluster across multiple nodes.

For teams wanting to add custom tools (e.g., OCR, measurement rulers), they only need to:

- Subclass

ToolBaseand implementexecute()andreset() - Register the tool in the agent module

- Annotate training data with the new

env_name

This design ensures that DeepEyes remains both powerful and engineer-friendly.

Important Limitations and Resource Requirements

While promising, DeepEyes isn’t a drop-in solution for all teams:

- Hardware Demands: Training the 7B variant requires at least 32 GPUs (e.g., 4 nodes × 8 GPUs) and 1200+ GB CPU RAM per node due to high-resolution image processing. The 32B version needs double the GPU count.

- Dependency on VeRL: DeepEyes is tightly integrated with the VeRL framework; teams must adopt its ecosystem.

- Predefined Tool Scope: Tool usage is controlled per sample via the

env_namemetadata—dynamic tool discovery isn’t supported out of the box. - Custom Evaluation Pipeline: Final assessment involves automatic bounding box processing (detailed in

EVALUATION.md), which adds complexity to benchmarking.

These constraints mean DeepEyes is best suited for well-resourced research labs or product teams building mission-critical visual reasoning systems—not for lightweight prototyping.

Summary

DeepEyes represents a meaningful shift in how AI systems handle visual information: from passive observation to active, iterative reasoning. By enabling VLMs to “think with images” through pure reinforcement learning, it delivers measurable gains in accuracy, grounding, and reasoning fidelity—without relying on supervised data or external models.

For technical leaders evaluating next-generation multimodal systems, DeepEyes offers a compelling blueprint for building AI that doesn’t just describe images but uses them as tools for thought. If your use case involves high-stakes visual analysis, complex diagrams, or the need to eliminate ungrounded hallucinations, DeepEyes is worth serious consideration.

With its modular design, open-source availability, and strong empirical results, it stands as one of the most promising approaches to human-like visual reasoning in modern AI.