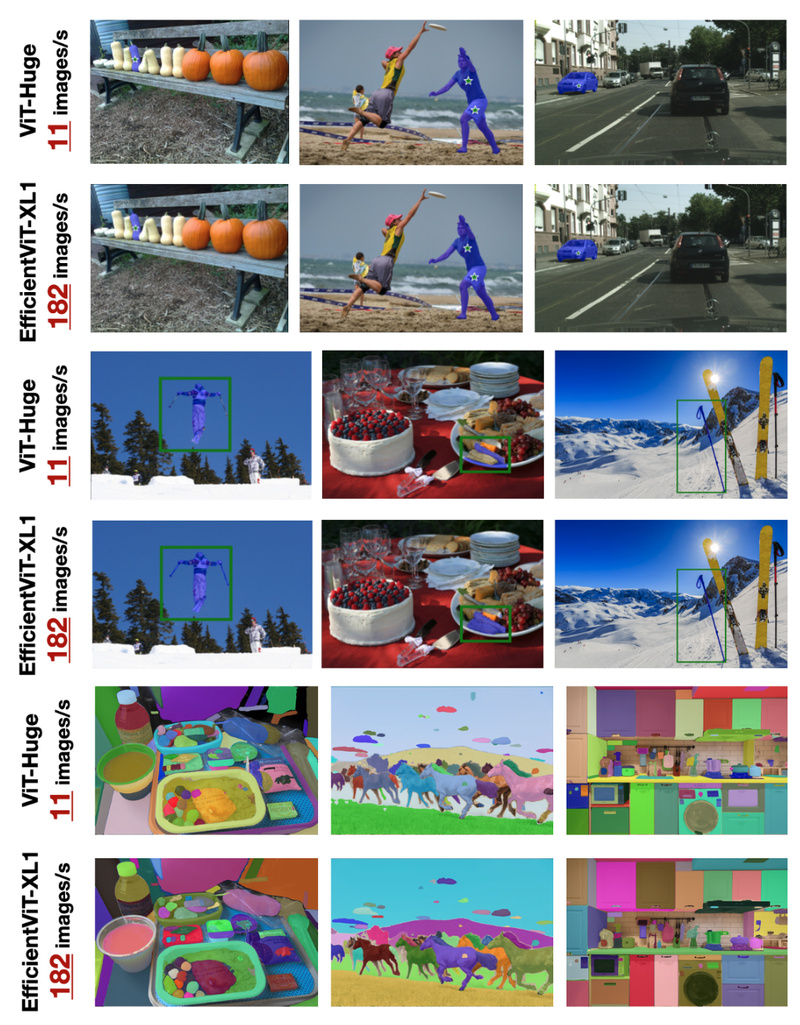

If you’ve worked with Meta’s Segment Anything Model (SAM), you know its power—and its pain points. While SAM delivers state-of-the-art zero-shot segmentation across diverse domains, its computational demands make real-time or edge deployment impractical. Enter EfficientViT-SAM: a re-engineered version of SAM that achieves 48.9× faster inference on an A100 GPU while preserving (and in some cases, even exceeding) the original model’s accuracy.

Developed by the MIT HAN Lab, EfficientViT-SAM replaces SAM’s heavyweight Vision Transformer (ViT-H) image encoder with the highly optimized EfficientViT backbone. The prompt encoder and mask decoder remain untouched—ensuring full compatibility with existing SAM workflows and prompting interfaces (points, boxes, or masks). This means you can drop EfficientViT-SAM into your pipeline with minimal refactoring and immediately reap massive speed gains.

For technical decision-makers, researchers, and engineers building applications that depend on fast, reliable segmentation—especially under hardware or latency constraints—EfficientViT-SAM offers a rare combination: no accuracy trade-off, dramatically lower latency, and seamless SAM-style usability.

Blazing Speed Without Sacrificing Quality

The standout achievement of EfficientViT-SAM isn’t just speed—it’s speed without compromise.

The original SAM-ViT-H, while accurate, requires substantial GPU memory and compute time, making it unsuitable for real-time systems or resource-constrained devices. EfficientViT-SAM addresses this bottleneck by leveraging the EfficientViT architecture, which was explicitly designed for high-resolution dense prediction tasks with minimal computational overhead.

Through a two-stage training process—first knowledge distillation from SAM-ViT-H to EfficientViT, followed by end-to-end fine-tuning on the SA-1B dataset—EfficientViT-SAM retains SAM’s zero-shot generalization capabilities while slashing inference time.

Benchmarked with TensorRT on an NVIDIA A100 GPU, EfficientViT-SAM delivers a 48.9× measured speedup over SAM-ViT-H. This isn’t theoretical: it translates directly into lower cloud costs, faster user feedback loops, and the ability to run segmentation on laptops or edge hardware that previously couldn’t support SAM.

Where EfficientViT-SAM Shines: Real-World Applications

EfficientViT-SAM isn’t just a lab curiosity—it’s already proving its value in demanding real-world settings:

- Medical Imaging: The MedficientSAM system, built on EfficientViT-SAM, won 1st place in the CVPR 2024 Segment Anything in Medical Images on Laptop Challenge. This demonstrates its viability in clinical environments where both accuracy and portability are critical.

- Edge AI & Robotics: Integrated into Grounding DINO 1.5 Edge, EfficientViT-SAM enables fast, open-set object detection and segmentation on edge devices—ideal for drones, autonomous systems, or industrial inspection.

- Interactive Tools: With an official online demo available, developers can test segmentation interactively, making it easier to prototype user-facing applications like photo editors, annotation tools, or AR experiences.

- Resource-Constrained Deployments: Whether running on a laptop, embedded GPU, or cost-sensitive cloud instance, EfficientViT-SAM dramatically reduces the barrier to deploying high-quality segmentation at scale.

How to Get Started Quickly

Adopting EfficientViT-SAM is straightforward, especially if you’re already familiar with SAM:

- Access the Code & Models: The official repository (https://github.com/mit-han-lab/efficientvit) provides pre-trained EfficientViT-SAM models, along with scripts for inference, evaluation, and visualization.

- Use Standard SAM Prompts: Since the prompt encoder and mask decoder are unchanged, you can use the same point, box, or mask inputs you’d use with vanilla SAM.

- Try the Online Demo: A live demo at https://evitsam.hanlab.ai/ lets you experiment without any setup.

- Deploy with TensorRT: To achieve the best performance (e.g., the reported 48.9× speedup), optimize your model with TensorRT—a key step for production deployments.

The codebase includes clear instructions for setting up a Conda environment, installing dependencies, and running inference—making it accessible even for teams without deep systems optimization expertise.

Key Limitations and Considerations

While EfficientViT-SAM delivers impressive gains, technical decision-makers should be aware of a few constraints:

- Codebase Maintenance: As of September 2025, the EfficientViT repository is no longer actively maintained. Future developments from the MIT HAN Lab will focus on DC-Gen, a newer framework. However, the existing EfficientViT-SAM models and code remain fully functional and production-ready.

- Hardware Optimization Dependency: The headline 48.9× speedup assumes TensorRT acceleration on high-end GPUs like the A100. On CPUs or non-optimized GPUs, speedups will be less dramatic—though still significant compared to SAM-ViT-H.

- Architecture Lock-In: EfficientViT-SAM retains SAM’s original prompt encoder and mask decoder. While this ensures compatibility, it also means you can’t easily modify those components without retraining or risking performance degradation.

These limitations don’t diminish its value—they simply define the scope where it excels: drop-in SAM replacement for speed-critical applications.

Making the Right Choice: When to Pick EfficientViT-SAM

Choose EfficientViT-SAM if your project requires:

- SAM-level segmentation quality with zero-shot generalization,

- Dramatically faster inference (especially on GPU-accelerated systems),

- Deployment on laptops, edge devices, or cost-sensitive cloud infrastructure,

- Minimal refactoring of existing SAM-based pipelines.

It’s particularly well-suited for applications in telemedicine, mobile vision apps, real-time video analysis, and any scenario where latency or compute budget is a hard constraint—but where cutting corners on segmentation accuracy isn’t an option.

Summary

EfficientViT-SAM redefines what’s possible with the Segment Anything Model. By swapping in a purpose-built EfficientViT backbone, it achieves unprecedented speed without sacrificing the accuracy that made SAM a landmark in computer vision. For technical teams looking to deploy high-quality segmentation in real-world, resource-aware environments, EfficientViT-SAM isn’t just an alternative—it’s the pragmatic, high-performance upgrade path SAM always needed.