Creating photorealistic 3D models of real-world objects typically demands dozens—or even hundreds—of input images captured from carefully calibrated viewpoints. This requirement poses a major bottleneck in practical applications like e-commerce product digitization, augmented reality content creation, or rapid prototyping in design workflows, where collecting dense, high-quality multi-view data is often infeasible.

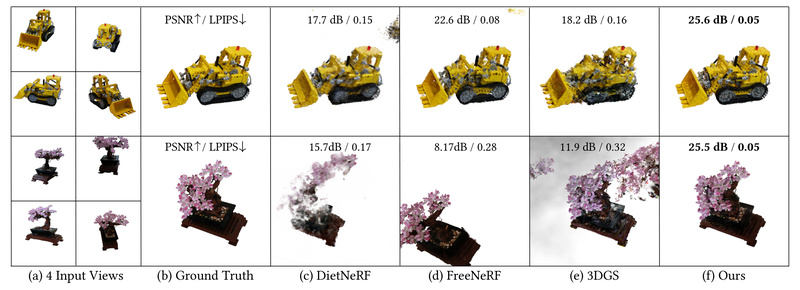

GaussianObject changes this paradigm. Introduced at SIGGRAPH Asia 2024, it enables high-fidelity 3D object reconstruction from only four casually captured images, even when precise camera poses are unavailable. By combining geometric priors, Gaussian splatting, and diffusion-based inpainting, GaussianObject delivers results that significantly outperform prior state-of-the-art methods—while lowering the barrier to entry for real-world deployment.

Whether you’re building a 3D asset pipeline for an online store or enabling users to scan physical objects with their smartphones, GaussianObject offers a compelling blend of quality, minimal data requirements, and robustness under sparse-view constraints.

Why Four Views Are Enough—And Why That Matters

Reconstructing 3D geometry from sparse views has long been fraught with two core challenges:

- Insufficient multi-view consistency: With only a handful of images, traditional structure-from-motion (SfM) pipelines struggle to establish reliable correspondences.

- Missing geometry and texture: Critical parts of the object—especially those occluded in all input views—simply cannot be observed, leading to holes, distortions, or implausible shapes.

GaussianObject tackles both problems head-on through a two-stage architecture that first builds a geometrically coherent foundation, then intelligently hallucinates plausible missing details using learned priors.

This approach unlocks scenarios previously deemed too data-constrained:

- A user snaps four photos of a coffee mug from different angles with their phone and instantly gets a rotatable 3D model.

- An e-commerce platform auto-generates 3D previews for new inventory using only existing product catalog images.

- A museum digitizes fragile artifacts without requiring complex multi-camera rigs.

The ability to work with such minimal input transforms 3D reconstruction from a specialist task into a scalable, user-friendly capability.

Core Innovations That Deliver Quality and Flexibility

Structure-Aware Initialization with Visual Hulls

Rather than initializing 3D Gaussians randomly or from noisy depth maps, GaussianObject constructs a visual hull—a coarse geometric proxy derived from object masks and estimated (or known) camera poses. This hull enforces basic shape plausibility early in optimization, dramatically improving multi-view consistency from the outset.

Crucially, it also employs floater elimination, a refinement step that removes stray Gaussians floating in empty space—common artifacts in sparse-view settings. The result is a clean, structured coarse representation that serves as a solid base for subsequent enhancement.

Diffusion-Based Gaussian Repair

Even with a good initial shape, four views can’t capture every surface. GaussianObject addresses this with a novel Gaussian repair model built on Stable Diffusion and ControlNet.

Here’s how it works:

- The system generates “corrupted” renderings by intentionally occluding parts of the current Gaussian model (via a “leave-one-out” strategy).

- These synthetic incomplete views are paired with full-reference images to train a diffusion model that learns to restore missing geometry and texture.

- Once trained, the repair model is applied selectively—only to viewpoints where the 3D representation is likely incomplete—providing targeted refinement without over-smoothing observed regions.

This fusion of explicit geometry and generative priors enables reconstruction quality previously unattainable with so few inputs.

COLMAP-Free Operation for Real-World Usability

Many 3D reconstruction systems rely on COLMAP or similar SfM tools to compute accurate camera poses—a step that often fails with only four casually captured images.

GaussianObject introduces a COLMAP-free variant that leverages DUSt3R or MASt3R—recent foundation models for monocular and multi-view depth and pose estimation. By integrating these pose predictors directly into the pipeline, GaussianObject can reconstruct objects from unposed, casually captured images without manual calibration.

This makes it uniquely suited for consumer-facing applications where users lack technical expertise or controlled capture environments.

Practical Workflow: From Four Photos to a Polished 3D Model

Using GaussianObject is structured but accessible. The typical workflow for a new dataset includes:

- Data Preparation: Organize four RGB images in a folder. Optionally provide object masks (automatically generated via SAM if not available) and camera poses (or let DUSt3R estimate them).

- Visual Hull Construction: Run

visual_hull.pyto generate an initial point cloud constrained by object silhouettes. - Coarse Gaussian Splatting: Optimize an initial 3D Gaussian model using the visual hull as initialization.

- Leave-One-Out Analysis: Simulate missing views to identify regions needing repair.

- LoRA Fine-Tuning: Adapt a ControlNet-based diffusion model to the specific object using synthetic training pairs.

- Gaussian Repair & Final Optimization: Apply the trained repair model and refine the 3D Gaussians to produce the final high-quality output.

For COLMAP-free usage, pose estimation via pred_poses.py replaces manual calibration, and the pipeline uses the estimated poses throughout—demonstrating end-to-end usability with minimal human intervention.

All steps are scripted and modular, enabling both out-of-the-box usage and customization for specialized needs.

Limitations and Practical Considerations

While powerful, GaussianObject isn’t a magic bullet. Users should be aware of the following constraints:

- Object masks are required, though they can be auto-generated using Segment Anything (SAM)—adding a preprocessing step but removing manual labeling.

- View diversity matters: The four input images should cover the object from sufficiently distinct angles. Front-only views will still yield incomplete reconstructions.

- GPU memory intensive: Training the repair model and optimizing Gaussians requires a capable GPU (ideally ≥16GB VRAM). A Colab notebook is provided for lower-resource testing.

- Preprocessing overhead: Running SAM for masks, DUSt3R for poses, and ZoeDepth for depth maps adds setup complexity—but scripts automate most of this.

These trade-offs are reasonable given the problem’s difficulty, and the team provides extensive tooling to mitigate them.

Summary

GaussianObject sets a new standard for sparse-view 3D object reconstruction by delivering high-quality, view-consistent results from just four images—with or without accurate camera poses. Its integration of geometric initialization, floater-aware optimization, and diffusion-based repair creates a robust pipeline that bridges the gap between academic research and real-world applicability.

For technical teams building 3D digitization tools, AR/VR experiences, or generative design systems, GaussianObject offers a rare combination: minimal data requirements, production-ready flexibility, and state-of-the-art visual fidelity. With open-source code, pre-trained models, and clear documentation, it’s ready for integration into next-generation 3D workflows today.