Creating personalized, visually consistent characters is a common need across gaming, animation, virtual avatars, and digital storytelling—but until recently, doing so efficiently and reliably has been a major challenge. Traditional approaches either require extensive per-character fine-tuning (which breaks text control) or rely on older U-Net-based diffusion models that struggle with generalization and image quality.

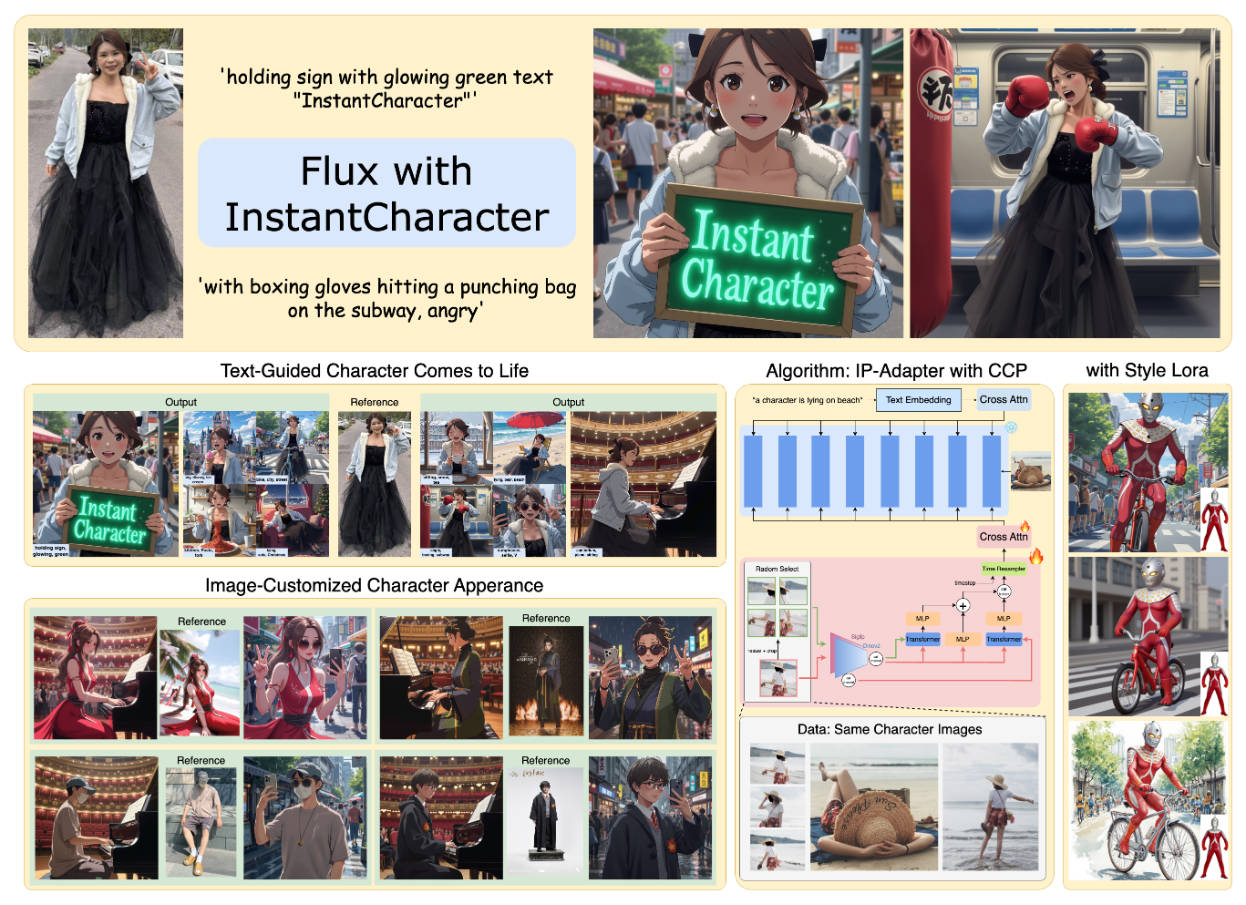

Enter InstantCharacter: a breakthrough, tuning-free framework that lets you generate high-fidelity, pose- and style-diverse images of a character from just a single reference photo. Built on a modern diffusion transformer architecture, InstantCharacter preserves identity consistency while fully respecting textual prompts—no retraining, no model collapse, and no loss of creative control.

Whether you’re a developer building an avatar generator, a designer exploring character variations, or a researcher pushing the limits of personalized image synthesis, InstantCharacter offers a production-ready solution that’s both powerful and surprisingly easy to use.

Why InstantCharacter Stands Out

Solves Core Problems in Character Personalization

Most existing character customization methods fall into two camps—both flawed:

- Learning-based (e.g., U-Net adapters): Often overfit to training data, failing on unseen poses, outfits, or art styles.

- Optimization-based (e.g., textual inversion, DreamBooth): Require per-subject fine-tuning, which degrades prompt fidelity and isn’t scalable.

InstantCharacter bridges this gap by eliminating fine-tuning entirely while delivering superior visual quality and generalization.

Three Technical Advantages Backed by Design

- Open-Domain Personalization with High Fidelity

InstantCharacter works across wildly different appearances, clothing, poses, and artistic styles—all while keeping the character’s identity intact. This isn’t limited to human faces; it extends to full-body characters in dynamic scenes. - Scalable Adapter for Diffusion Transformers

Instead of modifying the base model, InstantCharacter introduces a lightweight, stackable transformer-based adapter. This module processes rich character features from dual vision encoders (SigLIP and DINOv2) and injects them into the latent space of FLUX.1, a state-of-the-art diffusion transformer. The result? Seamless integration without architectural overhaul. - Dual-Structure Training Data for Balanced Learning

The method is trained on a massive, curated dataset of over 10 million samples, split into:- Paired data: Multi-view images of the same character (enforces identity consistency)

- Unpaired data: Diverse text-image pairs (preserves textual controllability)

This dual-path training ensures the model doesn’t “forget” how to follow prompts while staying faithful to the subject.

Practical Use Cases

InstantCharacter isn’t just a research prototype—it’s built for real-world workflows:

- Game Development: Generate hundreds of in-game poses or outfits for a hero character from one concept art image.

- Digital Storytelling: Create consistent illustrations of a protagonist across scenes (“a girl playing guitar in the rain,” “the same girl reading in a café”).

- Style Transfer with LoRAs: Instantly adapt your character into iconic animation styles—like Studio Ghibli or Makoto Shinkai—using pre-trained style LoRAs, all without retraining.

- AI-Powered Design Tools: Enable creators to sketch a character once and generate variations on demand, accelerating concept iteration.

Because it’s tuning-free, you can onboard new characters instantly—ideal for apps serving thousands of users, each with their own avatar.

Getting Started Is Surprisingly Simple

Despite its advanced architecture, using InstantCharacter requires only a few lines of Python:

- Load the base FLUX.1 diffusion model.

- Initialize the InstantCharacter adapter with pre-trained weights and dual image encoders.

- Provide a single reference image (even on a white background).

- Generate new images using natural language prompts.

Optional: Apply a style LoRA by specifying a path and a trigger phrase like “ghibli style.” The framework handles the rest.

Minimal ML expertise is needed—just basic Python and access to a GPU with ~22GB VRAM (or less, thanks to offload inference support).

Limitations and Considerations

While powerful, InstantCharacter isn’t magic:

- Animal characters may show reduced stability compared to human figures, as noted by the authors.

- Hardware requirements: Full inference runs best on 22GB+ VRAM GPUs, though recent optimizations enable offloaded execution on lower-memory systems.

- Image quality depends on the reference photo—clean, well-lit, front-facing images yield the most consistent results.

These are practical constraints, not dealbreakers, and the team provides clear guidance for mitigation.

Summary

InstantCharacter redefines what’s possible in character-driven image generation. By combining a scalable adapter architecture, dual-encoder feature fusion, and a massive balanced dataset, it delivers tuning-free personalization that’s both controllable and visually stunning. For teams tired of trading off identity consistency for prompt flexibility—or vice versa—this framework offers a compelling, production-ready path forward.

With open-source code